Why Machine Learning Systems Misbehave

And what makes them so difficult to work with

I frequently hear people complain about why “X company hasn’t done X with X product”. This has especially been the case for AI products. They’re brand new and show potential but many (maybe even most?) products fall short. Complaining about AI products as a consumer is understandable. AI has introduced a new way of computing that changes the way consumer devices work. What’s more concerning to me is the amount of software engineers–the people building a lot of this technology–who make similar claims.

AI is a paradigm shift for software engineers too, but it’s our job to understand what makes technology work. Pretty soon most complex problems will be solved with machine learning. If you’re a software engineer that is going to continue building the technology of the future, you need a baseline familiarity with machine learning systems and how they work.

Let’s walk through what makes working with machine learning systems difficult and some concrete examples of how they compare to the traditional software systems we all know and love.

What makes these systems difficult to work with?

There are three fundamental characteristics of machine learning systems that make them difficult compared to traditional software systems:

Non-determinism

A lack of interpretability

Fluidity

If you’re an engineer, you’ve probably dealt with all of these before in traditional systems, but machine learning systems deal with all three all the time and always in conjunction with one another.

Non-determinism

Simply put, a non-deterministic system is a system where the same input can lead to multiple different outputs. Non-determinism in machine learning systems stems from the machine learning models themselves. Both training and serving introduce elements of stochasticity (randomness) to models. This randomness ensures models don’t overfit to their training data.

In training, stochasticity is introduced primarily in the random sampling of mini-batches of training data to ensure randomness in the order in which a model sees the training data. In serving, temperature creates randomness to ensure model outputs aren’t too similar. Consumers know temperature as a measure of “model creativity”. I’ve written more in-depth about temperature here if you’d like to learn more.

Non-deterministic systems aren’t new to software engineers, but it’s very difficult to work with systems that are inherently non-deterministic at the scale of current generative AI models when combined with their lack of interpretability.

A lack of interpretability

Machine learning models are made up of layers of numbers called weights. Weights perform calculations on inputs to produce an output. When training, these weights are used to produce an output in the forward pass and adjusted on the backward pass. The changing out weights on the backward pass is the learning process.

The combination of many of these weights allows models to be super efficient at learning information. We can see this in the state-of-the-art LLMs such as ChatGPT, Gemini, or Claude. The difficulty is understanding how specific information is stored. For example, you can talk to ChatGPT about gardening, but it’s very difficult to look at that model’s weights and identify exactly where the information about gardening is stored.

One of the things I love about traditional software systems is that the code doesn’t lie. If I want to understand how something works and I have access to the code, I can figure it out. There’s ongoing research to figure out how to do the same thing with machine learning models (my research was in understanding model feature extraction) but currently we can learn more about how a model works based on its data than we can based on the actual weights and model architecture. This is why there’s such a push from the AI community for open LLMs to release their weights.

Fluidity

While we don’t understand exactly how information is stored in machine learning models, we do know their behavior is governed by the data they’re trained on. This is a beautiful thing because we can change how systems work by changing data instead of code; however, this can also make iteration difficult.

While many machine learning systems iterate by updating model architecture, a huge part of iteration is also updating the data a model is trained on. Each time a new state-of-the-art model is released, it’s been trained on more current data (or it's constantly kept up-to-date–see my article on online machine learning systems for more on that). Every time the data a model is trained on is updated, the behavior of that model can change. With a lack of interpretability, these changes can be difficult to identify and can lead to large changes in model output.

A good example of this is bias. If more recent training data is biased, a model will be biased and its output will reflect this. This can be hard to identify, but potentially harmful to users. This is why iterating on machine learning systems requires a great deal of experimentation to ensure model changes are working as intended. If ML experimentation interests you, make sure to subscribe because I’ll be writing about that soon.

Let’s get into a few concrete examples I think software engineers will appreciate to better understand these characteristics of machine learning systems. If you aren’t a software engineer there’s no need to worry. I’ll make sure to explain everything.

Testing

If you’re a software engineer, testing is something you’re familiar with. In traditional software systems, testing is done by spinning up a copy of the system being tested, mocking its inputs from systems it depends on, and giving it an input to test. An assertion is used to ensure the mock up and input when run through the system give an expected output.

Here’s a critical question: does this method of testing work for a LLM? We run into a few issues:

LLMs are expensive. Spinning up a test system isn’t always feasible.

LLMs are non-deterministic. How can we assert a proper output when we don’t have a concrete understanding of what that output should be?

LLMs aren’t easily interpretable. We can’t test the actual model to ensure proper function.

This is my favorite concrete example of why machine learning systems misbehave. In traditional software engineering, testing is the bread and butter of ensuring a system is doing what we want it to do. In machine learning systems, this becomes a lot more difficult.

If you’re interested in learning the current best practices for testing LLMs, do some research into LLM-as-a-judge. That uses a LLM to evaluate whether the output of another LLM is what is expected. It’s a super interesting, complex problem space.

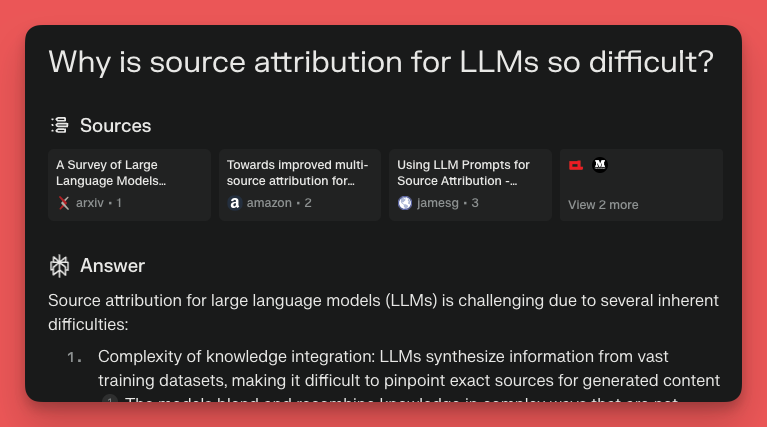

Source attribution

Intellectual property rights regarding AI has been a hot topic recently. One of the suggestions I see a lot is to “simply” have LLMs attribute their sources when they give information. This is an example of a topic people complain about as if companies just choose not to do it when in actuality it’s super technically complex.

Remember, we can’t interpret how models store information. So how do we attribute sources? What about the millions of topics LLMs have learned from multiple sources? If we’re attributing sources, how do we test to ensure source attribution is correct?

The first major application of source attribution that was complained about were LLMs like Gemini or Perplexity that search the web. This application has made progress by combining the LLMs with traditional search systems. The LLM uses search to find information on the internet and shares that information in conjunction with the LLMs knowledge. This system takes advantage of current attribution practices to make things work.

Where this gets a lot more difficult is in generative AI art. Art is abstract. When AI generates art it becomes even more so. How can we attribute the source for abstract features to an origin? It’s incredibly tough.

Legacy Systems

I struggled to find a traditional software engineering example to explain fluidity because it’s pretty unique to machine learning systems. I think the best comparison to the difficulty of working with fluidity is something all software engineers are familiar with: working with legacy systems.

Legacy systems are old, out-of-date software systems. Their lack of upkeep makes them notoriously difficult to work with because their software is outdated, they’re architecture is complex due to a series of duct tape fixes over the years, and their documentation is usually very limited. Most software teams have some sort of legacy system they need to keep running for clients and I’ve never met an engineer that enjoys it.

The frustrations of understanding a legacy system and keeping its lights on is similar to attempting to understand the difficulties of working with data fluidity. The upside of machine learning systems is that although data fluidity is difficult, it’s a much more fun problem to work on. The downside of machine learning systems is that the rate at which machine learning progresses can very quickly turn the traditional software aspects of machine learning systems into legacy code.

Hopefully this provides some insight into why machine learning systems misbehave and what makes them difficult to work with. I find there are two ways to think about machine learning to make these concepts easier to understand:

Don’t think of AI research as pushing the capabilities of AI, instead think of it as getting AI to what we want it to do. This encompasses pushing the capabilities of AI but captures the difficulties AI research faces and where efforts are concentrated much better.

Think of LLMs as a knowledge store similar to a database. Instead of store rows in a table, information is vectorized and stored in numbers that interact with one another to provide an output. Instead of information being inserted by a user or system, information is gathered from the semantics of a language.

I find these two viewpoints help me think about machine learning more accurately and change the way I approach problems. They make the ambiguities of working with machine learning systems much clearer.

If this article interested you, you’d probably like my overview of what makes machine learning infrastructure different from regular software systems. You can check that article out here. You can also support Society’s Backend for $1/mo to get extra articles and have input into what I write about. Thanks for reading!

Always be (machine) learning,

Logan

Resources

Here are the resources I read through as I prepared this:

Thank you for introducing me to new insights regarding nondeterminism, interpretability, and fluidity. We have inputs and outputs but we don’t quite know all that is going on in the middle. That is an intriguing engineering challenge to solve. Citing the source is pretty much the only way the user can assess the veracity of the information we are given. Moreover, not providing citations and links that point to original source material seems intellectually dishonest if not predatory. That seems a technical problem worth solving.