What you need to understand about LLM creativity

An simple overview of temperature and its effect on LLM output

Thank you to everyone who has subscribed recently! I appreciate your support of my writing. My goal is for every member of Society’s Backend to gain an effective understanding of machine learning and I’ll keep sharing the information I think is needed for you to do so. Don’t forget to follow me on X, LinkedIn, and YouTube.

The thing I love most about machine learning is that the math makes sense. On the surface, it seems really complex but I’m a firm believer that anyone can understand machine learning if they want to. Machine learning employs math in beautiful and simple ways to make numbers work the way we want them to. One of the greatest examples of this is something you may be familiar with if you’ve used a LLM before: temperature.

Many think of temperature as a measure of creativity–and they’re right, but indirectly. Temperature is actually a dial of randomness. Higher temperature is conducive to greater LLM output randomness. It’s similar to a saying I’ve heard about art: A bit of mess is required to enable creativity (if you know the exact quote or who this can be attributed to, let me know). If we allow a bit of a mess in our LLMs, it allows them to be a bit more “creative”.

To understand temperature, we must first understand the softmax function. I watched the greatest visual overview of a softmax function I’ve seen in a recent 3Blue1Brown video. I often bring up the 3Blue1Brown YouTube channel as a great resource for visual mathematical explanations and this is an excellent example of why I do. If you want a more visual explanation of temperature, I suggest watching the video at the link above (the link should take you to the right part of the video). I recommend watching the entire video if you’re interested in understanding what GPTs are. It even includes an excellent overview of deep learning.

Softmax is the piece of the LLM that determines the next word in an output and uses temperature to spark creativity. For a very basic overview of what softmax accomplishes, here’s a quote from 3Blue1Brown:

Softmax is the standard way to turn an arbitrary list of numbers into a valid distribution in such a way that the largest values end up closest to 1, and the smaller values end up very close to 0.

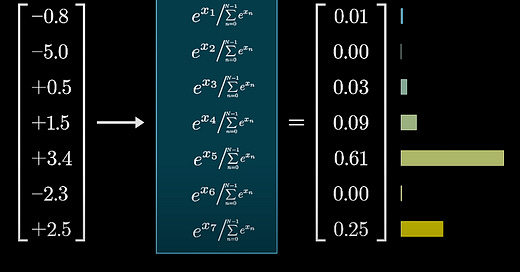

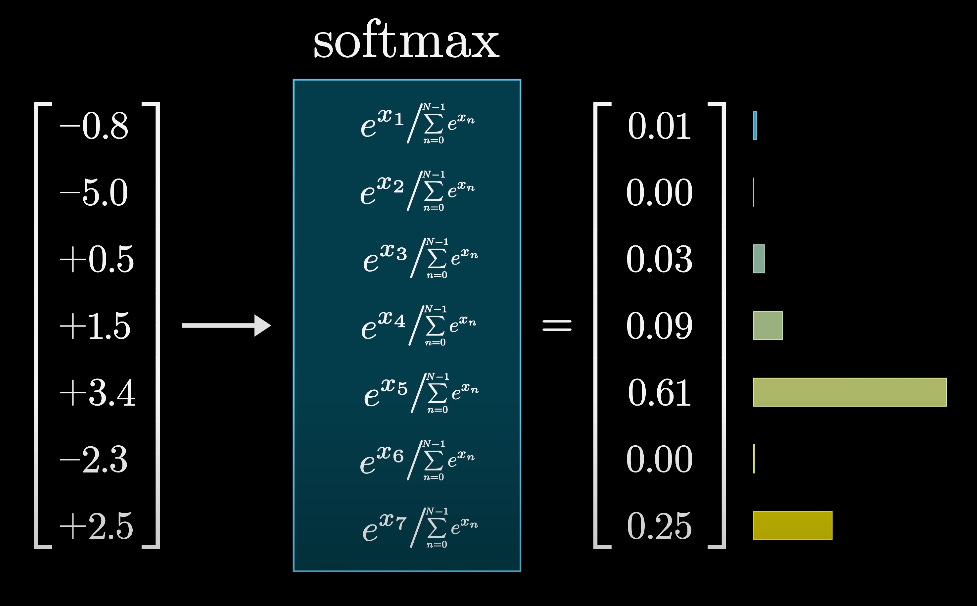

Essentially, when a LLM makes a guess at the next word (or token) in its output, its last vector in its last layer is used in conjunction with a vector representing the model’s vocabulary to generate a single value for each word in the model’s vocabulary. These values represent the probability of that word being the next output, but they’re highly variable due to the way models are trained. This means some of these values may come out in the negative hundred while others are in the positive thousands.

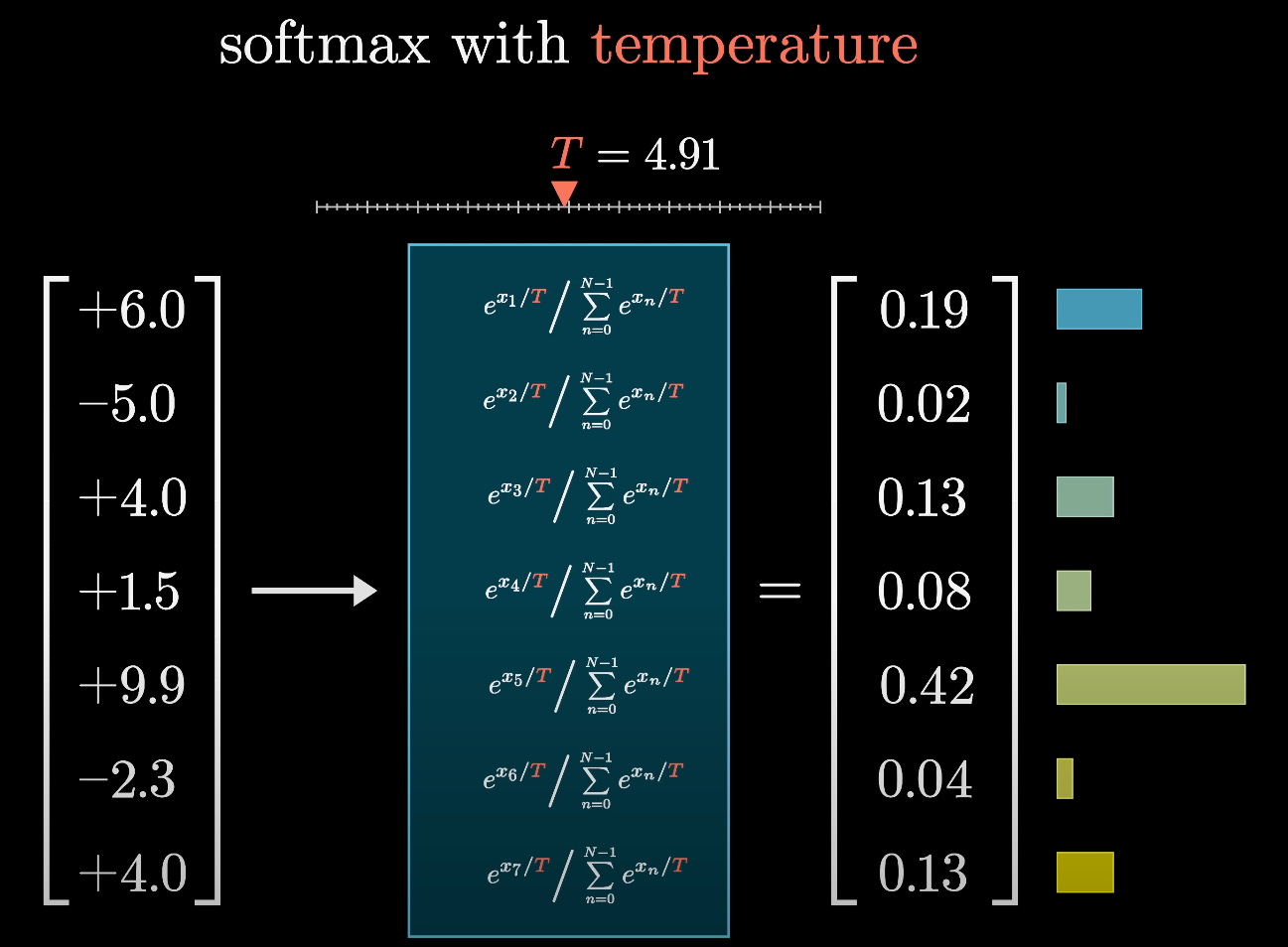

After those values are created, a normalized probability distribution is created by piping them through a softmax function (see the image above). Normalization forces the otherwise unruly, highly variable values all between a value of 0 and 1 where they all add up to 1. The closer a number is to 1, the higher probability it has to be the proper next output. Normalization makes it much easier for a LLM (or humans, for that matter) to compare probabilities. It’s really easy for a LLM to pick the highest value each time this calculation is performed, but this can lead toward repetitive responses. To avoid this, we add temperature to the softmax function.

Temperature is a coefficient we add to the softmax function to give more weight to lower values as the coefficient gets larger. This is done by adding a coefficient T to the denominator of the exponents within the softmax function (see above) which allows for a more uniform probability distribution as T gets larger. The value of T can be any number we want to choose. Higher temperatures mean more randomness with 0 meaning no randomness is introduced at all. Testing has shown that temperature values above 2 tend to meaningfully degrade model performance.

And that’s a simple explanation of how LLMs become “creative”. As everyone uses LLMs more, the basics of how LLMs work become more important to understand. Knowing how a machine learning system arrives at an output is important to use that system properly. A knowledge of temperature shows us that:

Machine learning math is beautiful and simple.

Creativity within AI is actually randomness, but this can lead to creativity.

A model’s randomness can be tweaked. Primarily LLM API users are given the ability to tweak temperature currently. As LLMs become more widely adopted, this feature will likely make its way to user interfaces as well.

That’s all for this week! Let me know if I’m missing any important details. You can support Society’s Backend for just $1/mo for your first year and it’ll get you access to even more resources, my notes, and more benefits as I think of them:

FYI the youtube link in your header doesn't work :)