Machine Learning Infrastructure: The Bridge Between Software Engineering and AI

What makes machine learning infra so important and why I find it so interesting

Due to popular demand, here's an overview of machine learning infrastructure (ML infra). The aim of this article isn't to delve into the complexities of ML infra but to provide a straightforward overview, highlighting the differences between machine learning infrastructure and typical software systems. My goal is to make this understandable for all readers and explain why creating a robust machine learning infrastructure is a challenging task.

I've had the privilege of developing ML infra at two notable companies: Microsoft (previously) and Google (currently). I’ll draw insights from my experiences at both organizations but this article will not focus on any specific company or application. Instead, it will concentrate on what makes machine learning infrastructure intriguing and why I find it so captivating.

I write short articles weekly about important software engineering and machine learning concepts to make them easily understandable. If this interests you, subscribe to get them in your inbox.

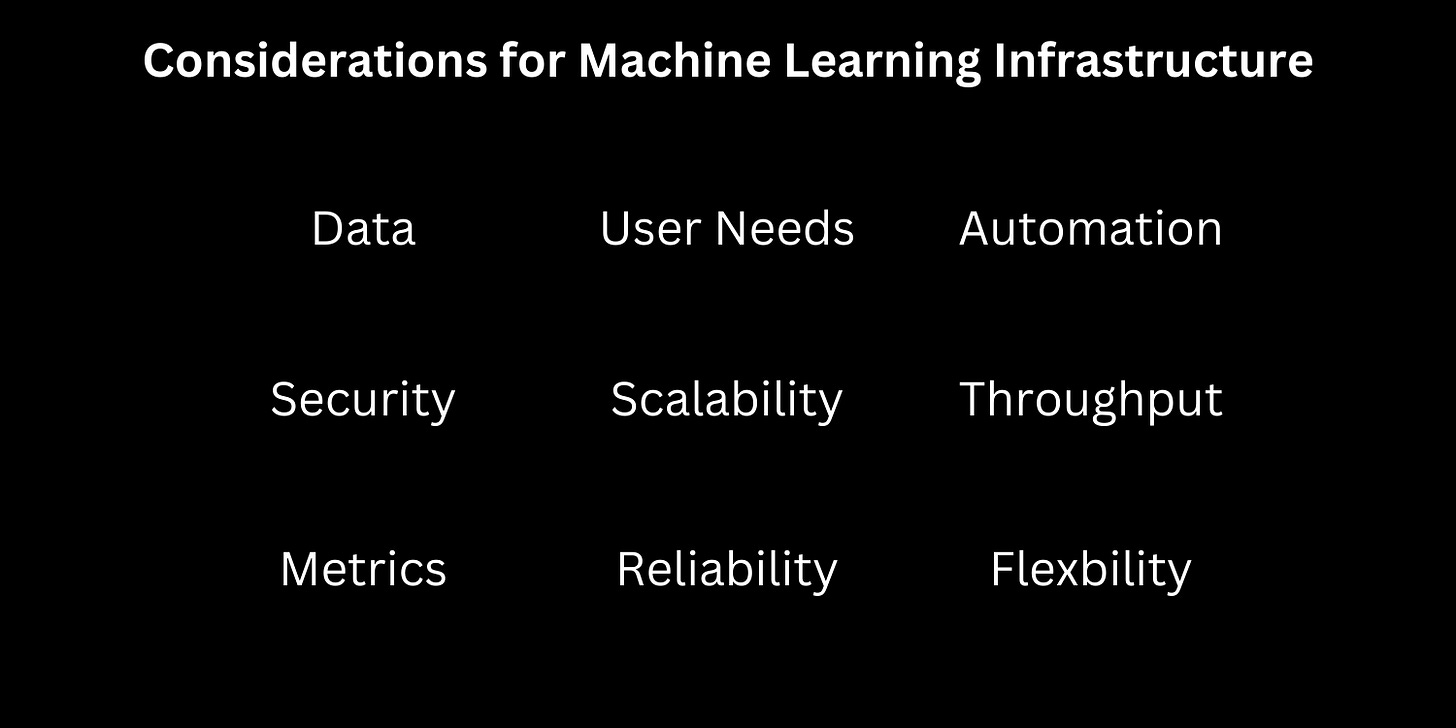

Below are the following considerations for machine learning infrastructure I deem most important. We’ll go through each individually and touch on what they mean for ML infra.

Data

Machine learning infrastructure revolves around how data is managed, pipelined, and utilized. The infrastructure must process vast amounts of data through all training phases. Factors to consider for training data include:

Selecting appropriate data for training

Monitoring sensitive data and using it appropriately

Determining where training data is stored

Understanding how training data is retrieved

Deciding how training data should be pipelined for particular use cases

Additionally, we must also consider the data within the model:

Do we need to partition embeddings and weights?

If so, where do we store them for quick retrieval and updates?

This will become increasingly important as machine learning research advances and models grow larger.

Data centers form the core of all these operations. Machine learning (particularly at the level required to warrant machine learning engineers) demands massive computational power. At the data center level, it's crucial to:

Understand the network of the data center:

How do server racks communicate, and how does this affect model training?

Where can data (training data, embeddings, weights) be stored within the training servers?

Maximize compute architecture:

What kind of compute are we training on?

How do we train across multiple processors?

Schedule jobs efficiently and reliably:

How do we optimize available compute resources?

How do we assign tasks appropriately within the data center?

Security

Standard security measures should be implemented at the data center level. However, unique security considerations arise when dealing with user data. This user data must be tracked to understand its usage and ensure its safety while preventing unauthorized access. This means infrastructure needs models to access user data only if authorized while the engineers developing the infrastructure need to be prohibited from accessing it themselves. This gets more complex as more laws regulating data usage are introduced.

Metrics

Regular metrics such as request latency, request reliability, and traffic monitoring are necessary to prevent request drops when redeploying/updating models. Machine learning also requires advanced metrics for understanding models and making informed decisions. This includes:

Assessing a model's performance

Comparing different models to identify improvements

Monitoring performance for models serving live traffic - it's more than just examining loss

Understanding why an issue occurs by examining the training process and relevant metrics

The way to gather and analyze these metrics vary with machine learning use cases and continues to change as more of these use cases emerge. ML analytics is a rapidly growing field and that will only continue to get larger.

User Needs

Just like any software engineering project client needs take precedence. The complex and rapidly evolving nature of machine learning necessitates constant communication with ML clients. This interaction helps understand how the infrastructure can be tailored to meet their needs.

Engineers rely on client feedback to improve infrastructure and clients depend on their engineers to provide the infrastructure necessary to achieve their goals. This symbiotic relationship between infra and clients is critical for advancing machine learning. Research teams can't make progress without robust support from the infrastructure team and the infra team can't support them without their input.

Scalability and Reliability

While most software scales per user requests, ML infra scales with user requests, number of models, training data volume, and even parameter count. In just the past year, we've seen models grow from 7 billion parameters to 70 billion. As models become larger, more experimentation is required, thus necessitating more robust infrastructure.

Every aspect of the system must be designed for scalability even if it doesn't immediately require it. Future demands may necessitate this scalability and designing without scalability in mind could potentially create bottlenecks that hinder the machine learning platform's operations in the future. This can result in considerable time and financial costs for researchers and developers.

Machine learning training is costly and time-consuming; any reliability issues that delay training can cost significant time and money (potentially millions in revenue). While reliability should be a priority in all software development, this principle is particularly important in ML infra where even minor latency can add up significantly across thousands of models during the training process.

Although revenue loss is a significant concern, time loss could be even more detrimental. In a competitive field like machine learning, there's a stark difference between research teams that experiment quickly and those that don't. Capable infrastructure is paramount for effective research efforts.

Automation

Automation plays a critical role as time equates to money. Manual intervention at every step is not only costly but also slows down the infrastructure when it waits for human intervention. Unlike humans, automation never stops and unlike automation, humans are very expensive.

Any process that can be automated while training should be but it must be done carefully to prevent any negative impact on model quality or the training throughput. Automation itself is another element of the infrastructure that needs monitoring and maintenance. If implemented poorly, it can adversely affect the rest of the system. Thus, principles like reliability and scalability are doubly important for automation.

If executed correctly, automation can yield significant benefits. It's highly valuable when done right but requires careful planning and maintenance.

Throughput

The key objective of machine learning infrastructure is throughput. Machine learning involves training a model on vast amounts of data, which is time-consuming and resource-intensive. Idle compute resources (CPUs, GPUs, or TPUs) represent wasted time and money. However, constantly forcing processor uptime could lead to failures and retraining needs—both detrimental to throughput. Therefore, while increasing throughput is essential, it requires meticulous attention to detail and a comprehensive understanding of how the system operates to prevent creating more problems than solutions.

The most effective way to boost training throughput is by identifying system bottlenecks and enhancing their speeds. This is a never ending optimization task because once one bottleneck is optimized, another piece of the system becomes the bottleneck. Knowledge about software systems, data center networks, and compute architecture is vital for performing finding bottlenecks and improving them.

Flexibility

Flexibility in machine learning infrastructure is intriguing due to the many solutions utilizing ML. There are many ways infra can be optimized to improve model training, but it differs depending on the problem clients need to solve. As a training platform, it introduces an interesting predicament:

Do we create a more general approach to model training that works for many different models but isn’t optimized for each?

Or do we optimize specifically for one primary use case?

The revenue of solving one primary use case can justify optimizing infrastructure for just that particular use case. It's an extremely interesting balance act and explains why numerous ML infra platforms (each taking a different approach) exist across the tech industry.

What do I think?

Machine Learning Infrastructure is complex and it’s just as important as modeling and machine learning research for companies to achieve their ML breakthroughs. It’s possibly even more important for delivering quality machine learning algorithms to customers—the ultimate goal.

Machine learning infrastructure occupies a position between software engineering and machine learning. In this role, I've had the opportunity to learn something that neither general software engineers nor modelers get to focus on: the more practical side of machine learning.

During my college studies, I researched how machine learning can reduce medical imaging costs—a topic that is super important to me. My primary concern then was: How can I make the model perform better? I lacked an understanding of the practical side of using machine learning and how it's actually implemented in a production environment.

My perspective on machine learning research has evolved. Instead of solely focusing on improving a model, I consider making a model more accessible and anticipate the engineering challenges involved in making it widely available. I think beyond cutting-edge machine learning research toward how it can be applied to help people.

This is what makes machine learning infrastructure so fascinating. I get to stand with one foot in two super impactful realms of technology and share the things I learn with all of you.

If you have any questions, leave them in the comments below.

Don’t forget to subscribe for weekly easily digestible articles to help you understand the fields of software engineering and machine learning.

Awesome post, Logan! I was fascinated the whole way through. It's cool to see the way your discipline both differs and overlaps with my daily. Though the tech stacks are wildly different, we are both focused on enabling other developers to build amazing user-facing stuff on top of a set of primitives. I agree that staying nimble and using resources in an optimal manner really sets platform teams apart—I'm sure that's all the more true in ML infra where, as you say, resources are precious and experimentation is really at the fore.

Thanks for your insights—you're clearly an amazing technologist 👏

Thanks, Logan. I learned alot. I know that because I don't know much about what you wrote about yet. Onto some learning!