Weekly Backend #6: 59 Resources and Updates, AI is Taking Off in Medicine

Google DeepMind releases AlphaFold 3, KANs, LLM Benchmarks are being looked at more critically, Apple is bringing their AI chips to data centers, StackOverflow partners with OpenAI, and more

Interestingly, there were a lot of AI updates this week and a lot of excellent AI in healthcare developments. I love seeing AI applications expanded, especially in an area that greatly needs it. I’m thinking the following weeks will have more resources as these updates are explained by the ML community in greater detail.

Welcome to the Weekly Backend! Here's a comprehensive list of the important ML resources and updates of the past week. Feel free to peruse them and read anything that's interesting to you. Leave a comment to let me know what you think. If you missed last week's updates and resources, you can check it out here.

Number of machine learning updates: 33

Number of machine learning resources: 26

You can get access to the complete list of resources, my notes, my feed, and even interesting job opportunities (coming soon!) by supporting Society's Backend for just $1/mo:

You can also get more updates and resources by following me on X and YouTube. Let's get started!

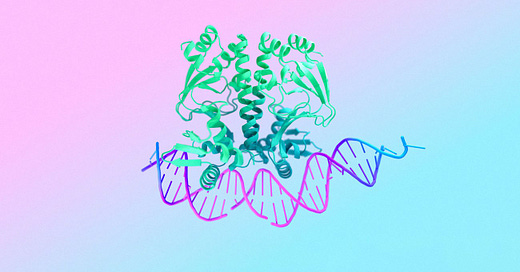

AlphaFold 3 predicts the structure and interactions of all of life’s molecules

AlphaFold 3 is a groundbreaking model that predicts the structure and interactions of all life's molecules with high accuracy. It helps scientists understand biological processes and accelerates drug discovery. AlphaFold Server, a free tool, allows researchers worldwide to access its capabilities easily. The model's predictions surpass existing systems and have the potential to revolutionize scientific research.

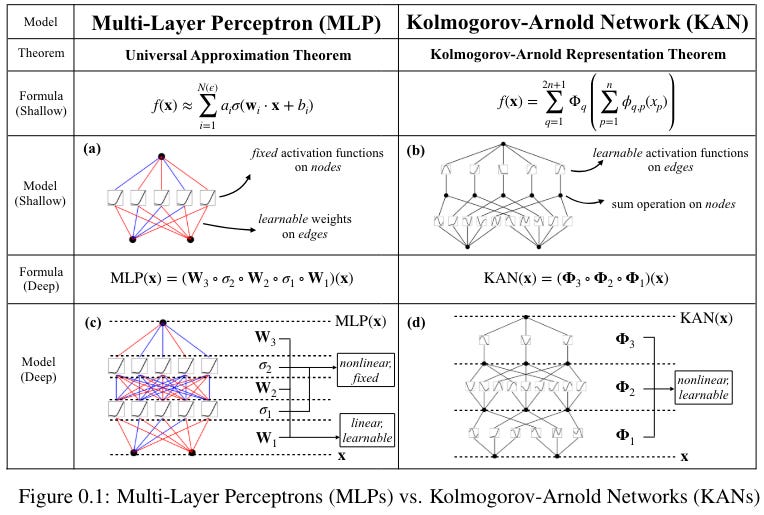

KAN: Kolmogorov-Arnold Networks

Ziming Liu, Yixuan Wang, Sachin Vaidya, Fabian Ruehle, James Halverson, Marin Soljačić, Thomas Y. Hou, Max Tegmark

KANs have learnable activation functions on edges, outperforming MLPs in accuracy and interpretability. They show faster neural scaling laws and can represent functions more effectively than MLPs. KANs leverage splines and MLPs' strengths while avoiding weaknesses. The training of KANs involves differentiable operations and backpropagation.

How overfit are popular LLMs on public benchmarks?

Alexandr Wang

New research from SEAL evaluates popular LLMs for overfitting on public benchmarks. Mistral & Phi show overfitting, while GPT, Claude, Gemini, and Llama do not. The study praises the team for their work. For more details, check out the paper on arxiv.org/abs/2405.00332.

Apple is reportedly developing chips to run AI software in data centers

Dylan Butts

Apple is developing chips for AI software in data centers. The project has been ongoing for years with no clear timeline. Apple's chip may focus on AI inference, not training models. The company is investing significantly in AI technology.

We’re thrilled to announce we’re partnering with @OpenAI

Stack Overflow

Stack Overflow is partnering with OpenAI to combine technical knowledge and AI models. The partnership aims to empower technology development through collective knowledge. This collaboration will bring together the best in class resources for AI development. The announcement was made on x.com.

Ignore inductive biases at your own peril

Christoph Molnar

Inductive biases are essential in machine learning, guiding algorithms in selecting prediction functions from an infinite set of possibilities. Without these biases, machine learning would be unable to learn and make predictions, merely acting as a database. Choices in model features, architecture, and hyperparameters all introduce specific inductive biases, influencing the model's behavior and effectiveness. Ignoring inductive biases can lead to misunderstandings about a model's assumptions and its ability to handle new data.

Deep learning techniques for Hyperspectral image analysis in agriculture

Guest Contributor

Technological advancements like drones and deep learning are transforming agriculture rapidly. Hyperspectral imaging and deep learning are enhancing farming practices for increased efficiency and sustainability. These innovations enable early detection of diseases, precise pesticide application, and optimal water usage. Embracing these technologies can lead to reduced chemical use, conservation of water resources, and enhanced soil health in agriculture.

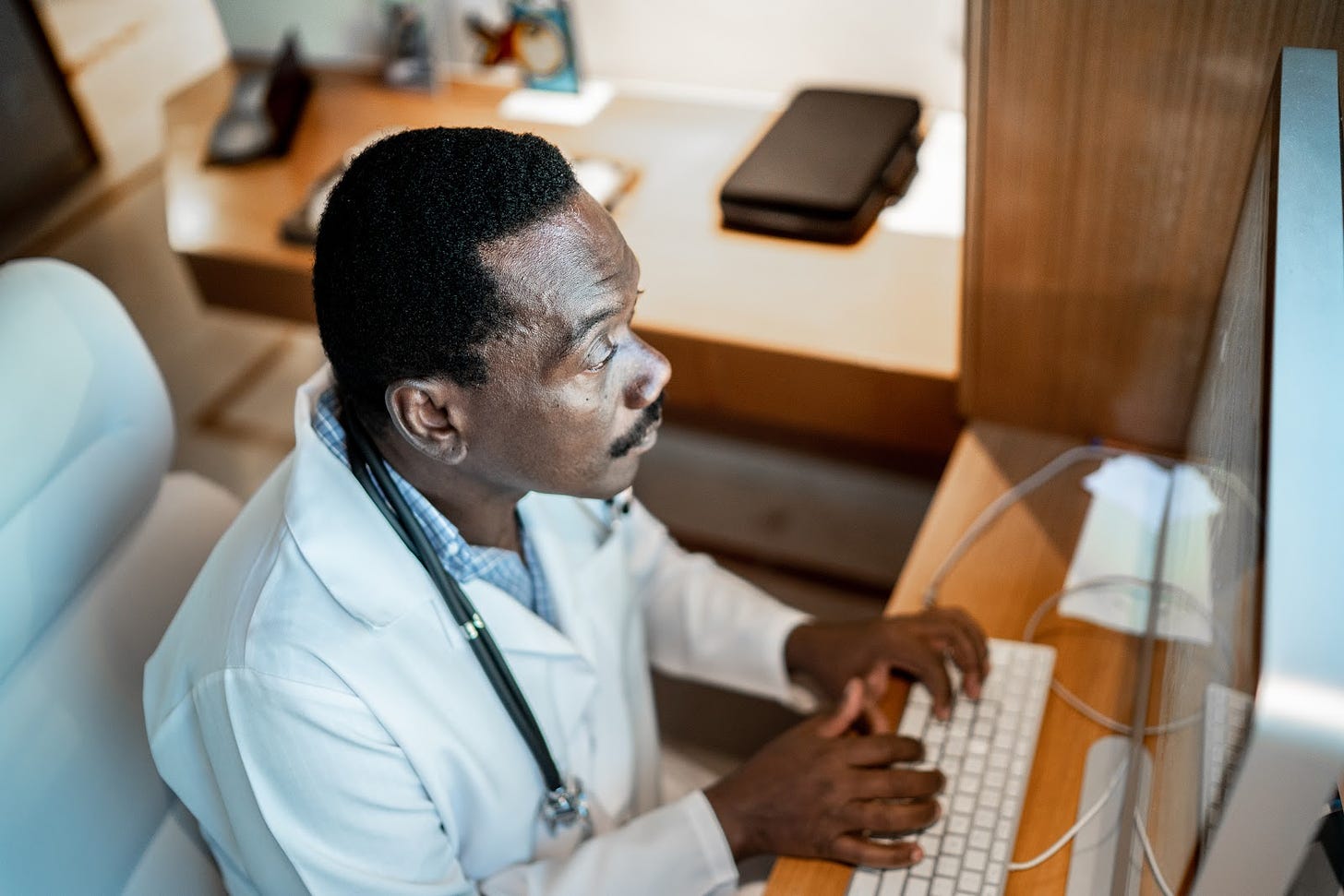

Large Language Models in Healthcare: Are We There Yet?

Stanford HAI

The text discusses the potential of large language models (LLMs) in healthcare and the need for systematic evaluation. There is a gap between the promise of LLMs and their actual performance in healthcare settings. The importance of testing LLMs with real patient care data and evaluating them across various healthcare tasks is highlighted. Efforts to standardize evaluation methods and address biases are crucial for maximizing the benefits of LLMs in healthcare.

📍Meet ChandlerAi, your all-in-one AI assistant

ChandlerAi is an all-in-one AI assistant for productivity and creativity. It offers features like chatting with AI models, AI-powered search, and creating presentations. You can access ChandlerAi on MacOS, Windows, and Chrome Webstore. Visit the website for more information.

𝘓𝘚𝘛𝘔: 𝘌𝘹𝘵𝘦𝘯𝘥𝘦𝘥 𝘓𝘰𝘯𝘨 𝘚𝘩𝘰𝘳𝘵-𝘛𝘦𝘳𝘮 𝘔𝘦𝘮𝘰𝘳𝘺 ~ Takeaway by Hand ✍️

Tom Yeh | AI by Hand ✍️

Sepp Hochreiter introduced xLSTM, a new version of LSTM, after 30 years. xLSTM includes innovations like sLSTM for Exponential Gating and mLSTM for Matrix Memory. The text explains these key improvements through a drawing. The goal is to help readers better understand the advancements in xLSTM compared to LSTM.

llmlingua - This really interesting lib from Microsoft can 𝗰𝗼𝗺𝗽𝗿𝗲𝘀𝘀 your prompt massively

Rohan Paul

LLMLingua is a tool from Microsoft that can significantly compress prompts, reducing their length by up to 20%. It helps speed up LLMs' inference and maintains key information while reducing costs and latency. The tool intelligently removes unnecessary words while retaining essential information, offering cost savings and enhanced support for longer contexts without requiring additional training for LLMs. LLMLingua also includes features for structured prompt compressions and comprehensive information recovery.

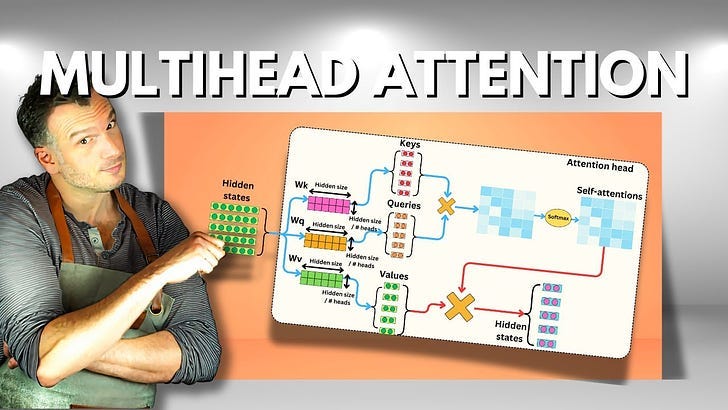

The Multi-head Attention Mechanism Explained!

Damien Benveniste

The Multihead-Attention Mechanism uses multiple layers of attention in parallel to understand different word interactions in a text. Each attention head's output is adjusted in size and then combined with others for a comprehensive analysis. This method enhances the processing of input sequences by considering various aspects simultaneously. Adjusting the internal matrices' shapes can manage the dimensionality of the resulting data for efficiency.

Active learning is one of the coolest techniques you'll use

Santiago

Active learning involves annotating small portions of data, training a model, and using it to label more data, selecting the most informative samples to improve the model. By training a model with selective data, it can perform as well as one trained with the entire dataset, but the process takes time.

We’ve just passed 100 days since the first participant in our clinical trial

We’ve just passed 100 days since the first participant in our clinical trial received his Neuralink implant. Read Neuralink's latest progress update.

Russian Researchers Improve Neural Networks' Spatial Navigation Performance

Научно-образовательный портал IQ

Researchers improved neural networks' navigation performance using attention mechanisms, achieving a 15% increase in performance. They focused on reinforcement learning for navigating three-dimensional environments. The study used advanced reward shaping techniques and attention mechanisms to enhance neural network performance. The research aimed to optimize learning for graph neural networks by leveraging the attention mechanism.

Google just added their Google AI Essentials course below the search bar

AshutoshShrivastava

Google added th

eir AI Essentials course below the search bar on their homepage. The course is for beginners and requires no experience. Google aims to educate users and make the course easily accessible.

Why are LLMs so versatile?

Alejandro Piad Morffis

Language models like GPT-4 are versatile because they're trained on massive amounts of data, making them capable of understanding and generating human-like text. Their effectiveness is further enhanced through additional fine-tuning and specific training techniques, which improve usability and interaction quality. Despite their complexity, at their core, they predict text continuations, acting like advanced autocomplete systems. However, making them practical involves overcoming challenges like bias and ensuring they're user-friendly.

The Top ML Papers of the Week

DAIR.AI

Top ML papers of the past week with summaries. These are the papers worth being familiar with. Links are included for further reading.

Efficient-Large-Model/VILA

VILA is a visual language model trained with image-text data, offering video and multi-image understanding capabilities. VILA-1.5 release includes different model sizes for efficient deployment on NVIDIA GPUs. The model's performance is evaluated on various benchmarks, showcasing its strengths in image and video tasks. VILA models can be quantized for edge deployment and come with pretraining and fine-tuning steps for training.

LLM Roadmap: A step-by-step project-based LLM roadmap to mastering large language models

Prashik Hingaspure

The text discusses a step-by-step roadmap for mastering Large Language Models (LLMs), emphasizing the importance of foundational skills like Python programming and data analytics. Learning LLMs can lead to diverse applications, career growth, and innovation across industries. The roadmap includes mastering basics, exploring pre-trained models, working on hands-on projects, and connecting with a community to enhance learning and retention. Implementing best practices like regular engagement, hands-on practice, and exploring interactive platforms can help develop a strong skill set for real-world applications in natural language processing.

We reversed the Rabbit R1 🐇

Rithwik Jayasimha

The Rabbit R1 app was successfully reversed to run on Android phones without root access. The process involved analyzing firmware and patching tools for OTA updates. The project team collaborated to overcome challenges and create a script for future updates. Overall, the achievement was celebrated with a shoutout to the team members involved.

Yellow.ai debuts industry's first Orchestrator LLM, delivering contextual, human-like customer conversations without training

Yellow.ai has introduced Orchestrator LLM, a cutting-edge AI model that improves customer conversations by understanding intent and context. This innovation leads to faster issue resolution and a significant boost in customer satisfaction. Orchestrator LLM excels in context switching and requires zero manual training, resulting in a 60% decrease in operational costs. Yellow.ai aims to revolutionize customer service by providing personalized and efficient AI-driven solutions.

Sora AI Breaks Ground with Washed Out Music Video

OpenAI's Sora AI released the first AI-generated music video for "The Hardest Part" by Washed Out. The video, directed by Paul Trillo, used 700 Sora generations and 55 clips, sparking conversations about AI in music. Critics noted room for improvement in AI-generated content despite the milestone achieved. This marks a significant advancement in blending AI technology with creative arts.

Finetuning Transformer Models

The text discusses a course on finetuning transformer models offered by Codecademy. It covers practical skills like preparing data, using tools like LoRA and QLoRA, and optimizing models efficiently. Learners can gain hands-on experience with techniques like parameter-efficient finetuning and 4-bit quantization. The course is designed for intermediate learners and takes about 2 hours to complete.

ChatBotArena: The peoples’ LLM evaluation, the future of evaluation, the incentives of evaluation, and gpt2chatbot

Nathan Lambert

ChatBotArena is a platform where different chat models are compared head-to-head, aiming to identify the best language model by public evaluation. It is appreciated for filling a niche in the community by offering a simple and intuitive way for evaluating chatbots' performance. However, ChatBotArena faces criticism for providing limited feedback for model improvement and being more of an entertainment or market analysis tool than a rigorous evaluation method. Despite its limitations, it offers valuable insights into language model performance, attracting attention from model providers and the AI community.

A notebook to help classify SPAM messages

Sebastian Raschka

Sebastian Raschka shared a notebook to help classify SPAM messages with 96% accuracy. The notebook is small enough to train on a laptop in about 5 minutes. You can find the notebook on github.com/rasbt/LLMs-fro. It's a great coding and reading project for the weekend.

Why MLX is Important for the ML Community

Logan Thorneloe

MLX is a machine learning framework designed for Apple Silicon, making it easier for researchers and hobbyists to run models on Macs. It simplifies machine learning by using syntax similar to NumPy and focuses on research rather than production deployment. The framework increases accessibility to machine learning, especially for those previously hindered by the need for specific hardware like Nvidia graphics cards. The article provides a guide to setting up a simple neural network with MLX, highlighting its potential to democratize machine learning further.

Elastic Security Labs Releases Guidance to Avoid LLM Risks and Abuses

Elastic Security Labs shared a guide to help avoid risks with large language models (LLMs). The guide offers best practices and countermeasures to prevent LLM abuses. It aims to help organizations safely adopt LLM technology and protect against potential attacks. Elastic encourages industry-wide use of their research and detection rules to enhance security practices.

ScrapeGraphAI: You Only Scrape Once

A web scraping tool called ScrapeGraphAI uses powerful LLMs for data extraction. It is popular due to its use of LLM providers like ollama. The tool is a Python library and can be found on GitHub. It emphasizes scraping data efficiently in one go.

Keep reading with a 7-day free trial

Subscribe to Society's Backend: Machine Learning for Software Engineers to keep reading this post and get 7 days of free access to the full post archives.