Weekly Backend #5: 55 Resources

The inner workings of transformers, Machine Learning Q and AI, machine learning classifies harmful viruses, and more

Welcome to the Weekly Backend! Here's a comprehensive list of the important ML resources and updates of the past week. Feel free to peruse them and read anything that's interesting to you. If you missed last week's updates and resources, you can check it out here.

This week I’ve added the author to each post where applicable to make sure I’m giving credit where due. I also think it’s important for you, the reader, to know the source of the information.

Number of machine learning updates: 26

Number of machine learning resources: 29

You can get access to the complete list of resources, my notes, my feed, and even interesting job opportunities (coming soon!) by supporting Society's Backend for just $1/mo:

You can also get more updates and resources by following me on X and YouTube.

All images are pulled from the author's work. If you are an author and would like your images or work removed, let me know. Let's get started!

Inner Workings of Transformer Language Models

Author: elvis

This paper explains how Transformer language models work. It discusses techniques for understanding their inner workings. Elvis provides insights into the internal mechanisms of these models.

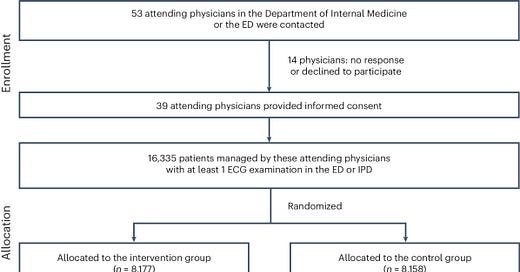

AI-enabled electrocardiography alert intervention and all-cause mortality: a pragmatic randomized clinical trial

Author: Division of Cardiology, Department of Internal Medicine, Tri-Service General Hospital, National Defense Medical Center, Taipei, Taiwan, Republic of China

The study tested AI-enabled ECG alerts to identify high-risk patients in hospitals. Implementation of AI-ECG alerts led to reduced all-cause mortality within 90 days. Patients with high-risk ECGs benefited the most from the AI alerts. The results suggest that AI assistance can help detect high-risk patients promptly and improve clinical care outcomes.

Deep Learning Approaches for Medical Image Analysis and Diagnosis

Author: Gopal Kumar Thakur

Deep learning techniques improve medical image analysis accuracy and patient outcomes. Collaboration between clinicians, data scientists, and industry is essential for maximizing deep learning potential. Deep learning models like CNNs revolutionize medical imaging by detecting abnormalities and improving diagnosis efficiency. Future research aims to address challenges and enhance deep learning impact in healthcare.

The Disinformation Machine: How Susceptible Are We to AI Propaganda?

Author: Dylan Walsh

AI-generated propaganda has been found to be more effective than human-created propaganda, especially when humans tweak the AI's output. Research by Stanford's Michael Tomz and colleagues showed that AI propaganda can significantly increase agreement with certain statements. However, its impact might be less on issues where people already have strong opinions, like in elections. The study also warns that AI's ability to produce convincing fake audio and visuals presents a growing challenge in distinguishing real from fake content.

Quickly start your chat with Gemini using the new shortcut...

You can chat with Gemini quickly using a new shortcut in Chrome. Just type "@" in the address bar and select Chat with Gemini. Write your message, and then get your response on gemini.google.com. It's a simple and quick way to start chatting with Gemini.

Building a RAG application from scratch using Python, LangChain, and the OpenAI API

Author: Underfitted

The text explains how to build an application using Python, LangChain, and the OpenAI API. It involves setting up a model, creating prompts, and generating answers. The process includes tokenization, context passing, and using embeddings for text analysis

gkamradt/LLMTest_NeedleInAHaystack

Author: gkamradt

This text discusses a testing method called 'Needle In A Haystack' for evaluating long context language models. It provides instructions on setting up the testing environment, running tests on different model providers, and visualizing results. The project allows for testing multiple needles in the context and using LangSmith for evaluation.

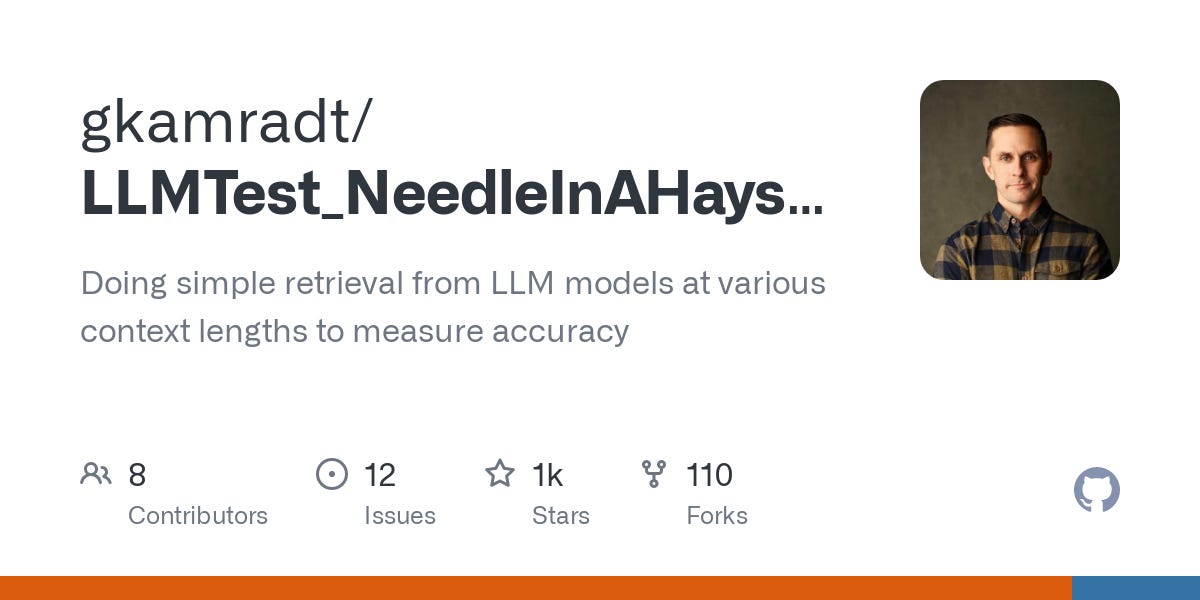

An introduction to vectorization

Author: Tivadar Danka

The simple mathematical expression "ax + b" is foundational in machine learning, evolving into complex neural networks through generalization and abstraction. It discusses vectorization, a key process that allows handling multiple variables efficiently in machine learning models, improving computational speed and enabling the analysis of high-dimensional data. By extending from a single predictor and target variable to multiple ones, the model becomes more versatile, applying to real-world scenarios like predicting real estate prices or microbial culture processes. This progression from basic linear regression to sophisticated models illustrates the power of mathematics in developing advanced machine learning algorithms.

Announcing Hermes 2 Pro on Llama-3 8B!

Author: Nous Research

Nous Research has released the Hermes 2 Pro model on Llama-3 8B. This model offers new capabilities like Function Calling and Structured Output. It outperforms other models on various evaluation benchmarks. The Hermes Pro models are a result of collaboration among several individuals.

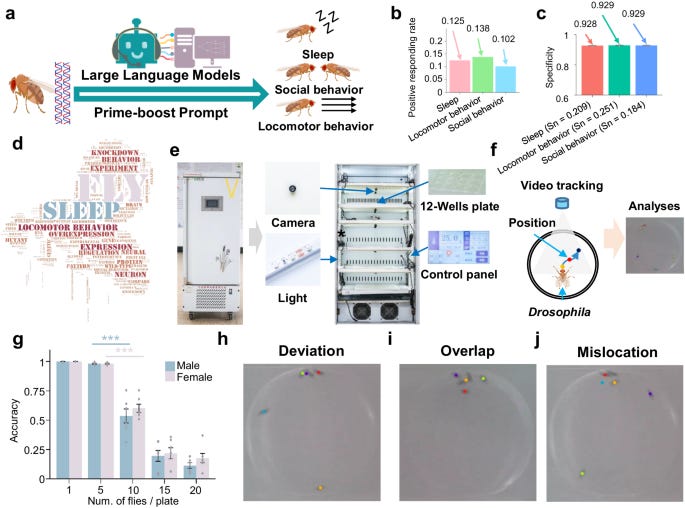

Large-language models facilitate discovery of the molecular signatures regulating sleep and activity

Author: Di Peng et al.

Researchers used large-language models to identify genes regulating sleep and activity in fruit flies, discovering specific genes like mre11 and NELF-B. Through genome-wide interpretation and RNA interference screening, they pinpointed genes involved in sleep, locomotor, and social activity regulation. The study revealed molecular signatures controlling these behaviors and highlighted the role of proteins like Dop1R1 in sleep regulation. This research provides insights into the genetic basis of sleep and activity in fruit flies.

China's recent major LLM developments

Author: Rowan Cheung

China has made significant advancements in AI development. Recent developments include a Sora competitor and a humanoid robot. SenseTime's SenseNova 5.0 outperforms GPT-4T on various benchmarks. China's progress in AI is noteworthy but often overlooked.

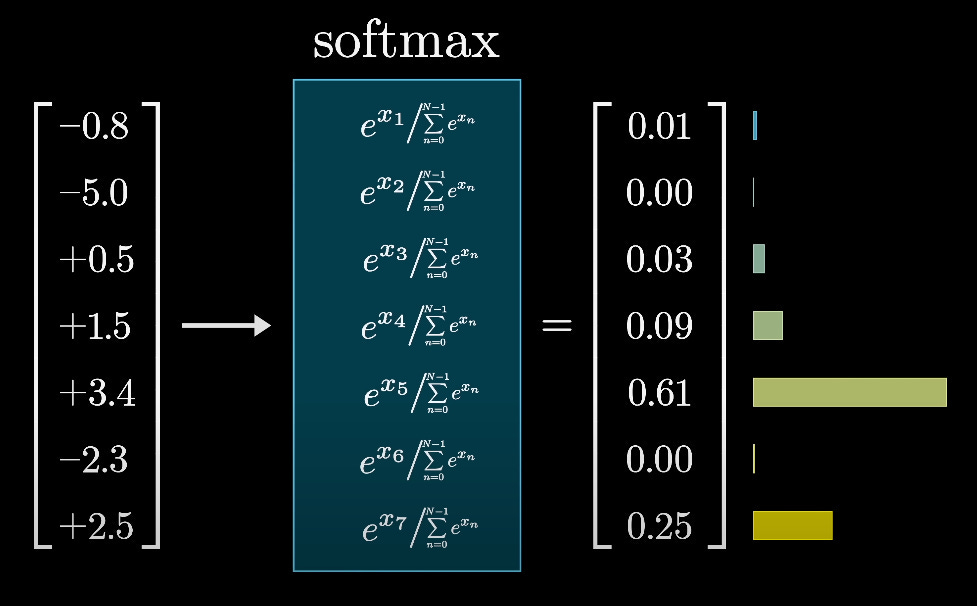

What you need to understand about LLM creativity

Author: Logan Thorneloe

Machine learning uses math in simple, beautiful ways to enhance creativity in large language models (LLMs) by adjusting "temperature," which controls randomness. The "softmax function" helps LLMs predict the next word by creating a probability distribution, where higher temperature settings increase randomness and can make outputs seem more creative. However, too high a temperature can negatively impact model performance. Understanding the role of temperature in LLMs is crucial for effectively using these models, as it allows users to tweak the level of creativity in responses.

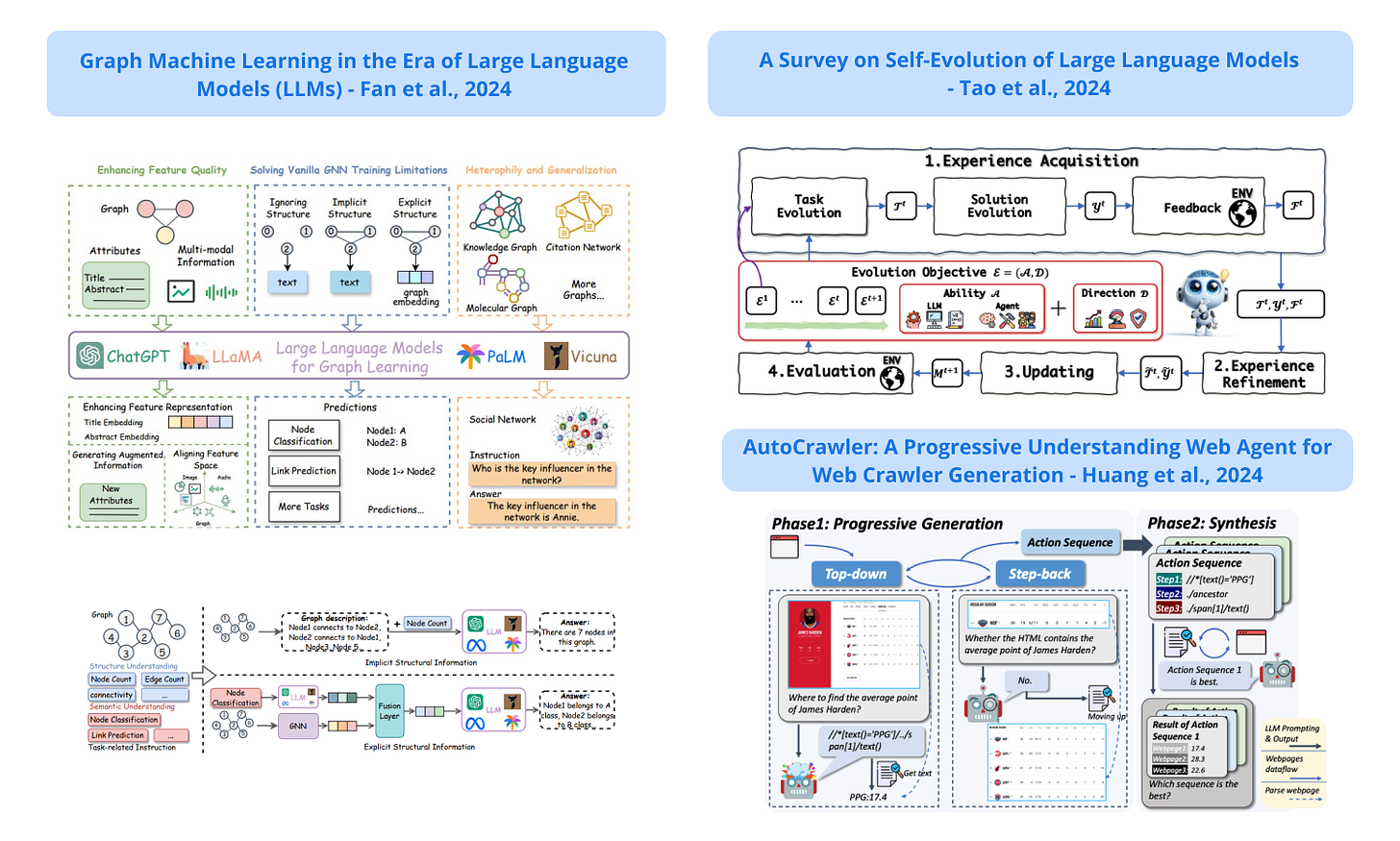

🥇Top ML Papers of the Week

Author: elvis

This week's top ML papers introduced innovations like phi-3, a powerful language model with a context length up to 128K, and OpenELM, which improves accuracy with fewer parameters. Arctic, a cost-efficient, open-source model, competes with larger models using less compute. Techniques to enhance LLMs' context utilization and a large-scale web dataset, FineWeb, aimed at improving data quality for training, were also highlighted. Additionally, advancements in AI-powered gene editing and new methods for web crawling and graph machine learning showcase the expanding applications of LLMs.

Machine Learning Q and AI: 30 Essential Questions and Answers on Machine Learning and AI

Author: Sebastian Raschka

Learn advanced machine learning and AI concepts through a question-and-answer format. Author Sebastian Raschka simplifies complex topics for easy understanding. Explore various AI topics and practical applications to enhance model performance. Elevate your machine learning knowledge with clear explanations and hands-on exercises.

💾 LLM Datasets

Author: Maxime Labonne

LLM development focuses on creating good datasets, like Llama 3. A collection of datasets and tools is available for fine-tuning and creating new datasets. More information can be found on GitHub.

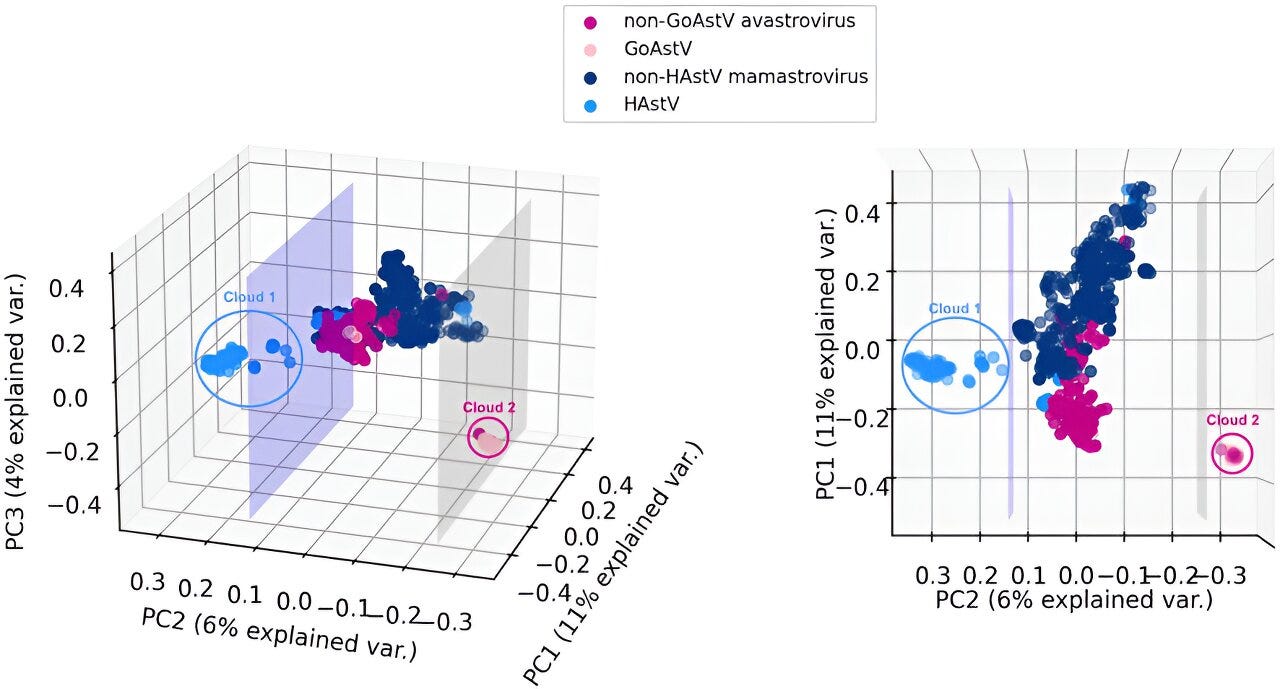

Machine learning classifies 191 of the world's most damaging viruses

Author: University of Waterloo

Researchers at the University of Waterloo used machine learning to identify 191 new astroviruses. Astroviruses are harmful viruses that cause diarrhea and impact both humans and animals. The new classification method can help develop vaccines and understand virus transmission. This research was published in Frontiers in Molecular Biosciences.

What do organizations need to be successful at Machine Learning? [Breakdowns]

Author: Devansh

Organizations aiming for success in Machine Learning (ML) need to focus on the comprehensive roles of ML Engineers, including data preparation, model building, deployment, and maintenance. Effective ML operations (MLOps) rely on rapid prototyping (Velocity), early error detection (Validation), and efficient model and data management (Versioning). A culture of experimentation, collaboration with experts, and data-driven iteration is essential for advancing ML projects. Continuous model evaluation, leveraging dynamic datasets and standardized validation systems, is crucial for sustaining model performance and aligning with business goals.

UChicago scientists use machine learning to turn cell snapshots dynamic

Author: University of Chicago News

UChicago scientists use machine learning to study cell changes over time. They developed a new method called TopicVelo to analyze snapshots from single-cell RNA sequencing. This method helps researchers understand how cells and genes evolve dynamically. By combining stochastic and topic modeling, they can reconstruct trajectories and enhance research in cancer and immunology.

Foundations of Vector Retrieval

Author: Sebastian Bruch

Vectors represent various types of data and are crucial for retrieval tasks. This text discusses fundamental concepts and advanced techniques for finding similar vectors. It aims to make the topic more accessible to a wider audience. Sebastian Bruch provides insights into vector retrieval in this monograph.

Introducing the Claude Team plan and iOS app

Claude introduces a new Team plan for collaborative workspaces and an iOS app for convenient access. The Team plan offers increased usage, advanced AI models, and admin tools for $30 per user per month. The iOS app allows seamless syncing, vision capabilities, and is free for all users. Claude aims to enhance productivity and collaboration for individuals and teams across industries.

Train A GPT-2 LLM, Using Only Pure C Code

Author: Donald Papp

Andrej Karpathy released llm.c for training LLMs in pure C, showing simplicity in working with these tools. GPT-2 is a key model for large language models with a lineage to modern versions. llm.c simplifies training by implementing the neural network algorithm directly in C. This project offers a focused and concise approach, running efficiently on CPUs or GPUs.

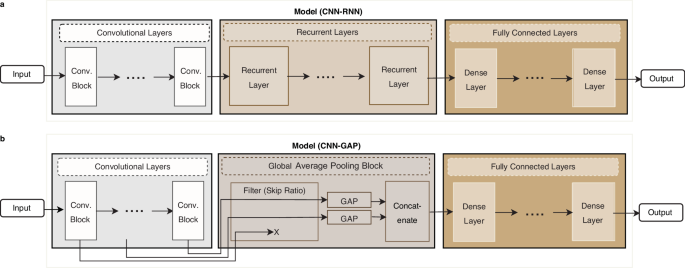

Optimized model architectures for deep learning on genomic data

Author: Hüseyin Anil Gündüz et al.

GenomeNet-Architect is a framework that optimizes deep learning models for genomic data. It reduces misclassification rates, speeds up inference, and requires fewer parameters compared to existing baselines. By automatically designing neural architectures for genomic sequences, it outperforms expert-designed models and enhances pathogenicity detection. Researchers can utilize GenomeNet-Architect to tailor model architectures for optimal performance on specific genomic datasets.

Understanding How Vector Databases Work!

Author: Damien Benveniste

Vector databases index data using vectors, enabling fast searches for similar items, crucial for AI applications like recommender systems. They use algorithms like Product Quantization and Locality-sensitive hashing to efficiently find nearest neighbors, reducing memory space and search time. Popular vector databases include Milvus, Qdrant, and Weaviate, each offering unique features for AI and search applications. These databases support scalability, precise queries, and integration with AI tools, making them essential for handling complex data searches.

3 things we learned from professional creatives about their hopes for AI

Author: Ayça Çakmakli

Over the past 18 months, AI has helped and inspired creative professionals, but there are concerns about its impact on their work. Creative professionals appreciate AI for expanding their skills and aiding in expression but don't want it to replace their creative process. They see value in AI managing non-creative tasks, allowing them to focus more on creativity. Insights from these professionals are guiding the development of AI tools that better meet their needs.

How Good Are Low-bit Quantized LLaMA3 Models? An Empirical Study

Author: Wei Huang et al.

LLaMA3 models show impressive performance with super-large scale pre-training. Researchers explore how well LLaMA3 models perform when quantized to low bit-width. Results reveal significant performance degradation, especially in ultra-low bit-width scenarios. The study aims to improve future models for practical use.

Deep Learning and Neural Networks Drive a Potential $7.9 Trillion AI Economy

Author: USA News Group

AI technology, particularly generative AI, is projected to contribute $7.9 trillion annually to the global economy. Companies like Tesla, Accenture, Palantir, and ServiceNow are advancing AI capabilities in various sectors. Scope AI Corp. has broadened its focus from agriculture to digital marketing and online gaming with its GEM technology. Leaders like Elon Musk are pushing boundaries by aiming for Artificial General Intelligence (AGI) and innovative applications like using Tesla's EV fleet for cloud computing services.

Biden administration taps tech CEOs for AI safety and security board

Author: David Ingram

The Biden administration formed an advisory board to study protecting critical infrastructure from AI threats. Tech CEOs like Sam Altman and Sundar Pichai are on the board. The board will develop recommendations to prevent disruptions to national security caused by AI. Homeland Security aims to mitigate AI risks by adopting best practices.

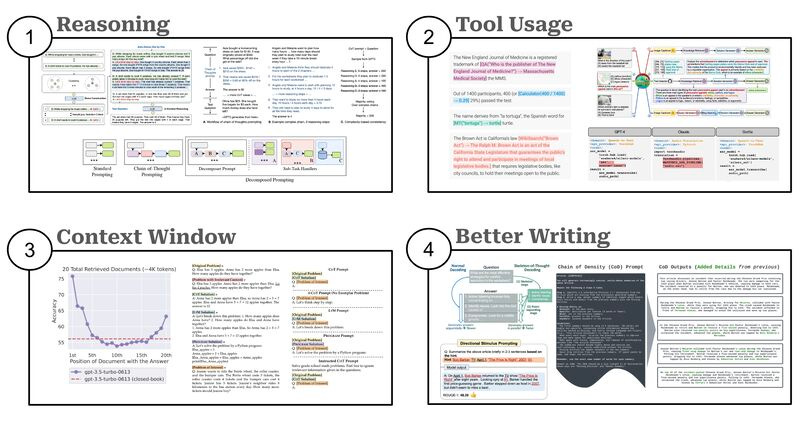

Cameron R. Wolfe, Ph.D.’s Post

Author: Cameron R. Wolfe, Ph.D.

Cameron R. Wolfe, Ph.D., discusses advancements in prompt engineering for AI in four key areas: reasoning, tool usage, context window, and better writing. Research focuses on improving AI's ability to solve multi-step reasoning problems and utilize external tools effectively. Studies show the importance of context windows and in-context learning for enhancing AI performance. AI can also be leveraged to improve human writing through prompt engineering techniques.

Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.