3 Key Principles for AI at Scale [Part 2]

The key to how large AI companies outcompete

This is the second in a two part series. This article assumes you have the knowledge shared in the first. If you haven’t read it yet, check it out at A Fundamental Overview of Machine Learning Experimentation [Part 1]. Subscribe so you don’t miss the next article in this series.

Don’t forget to follow me on X and LinkedIn for more frequent tidbits about machine learning engineering and all things AI.

This is an article I’ve wanted to write for a looooong time because this is exactly what I work on at Google. Everyone knows compute is a necessity for effective machine learning, but too few people realize it’s the bare minimum.

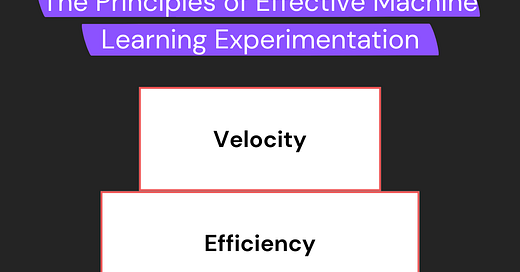

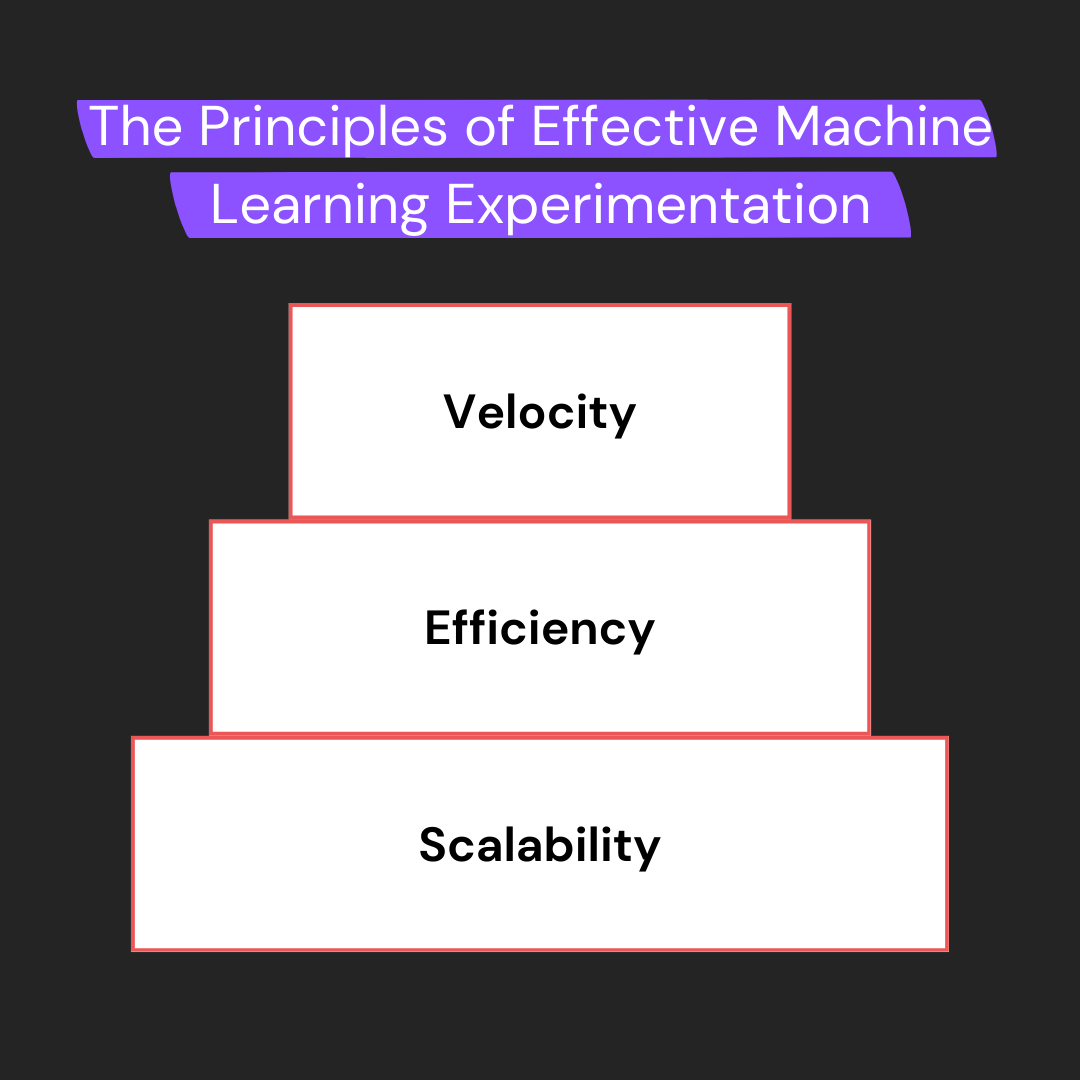

I’m going to get into the three fundamental building blocks for successful machine learning at scale:

Scalability

Efficiency

Velocity

These are all crucial aspects required for effective machine learning experimentation. Let’s jump in!

Scalability

This is the simplest principle and is easily understood after reading part 1. The first key principle for AI at scale is actually being able to complete the machine learning experimentation process.

This means having the compute and necessary infrastructure to train many models to test multiple hypotheses for model improvements. When most people discuss scaling up AI, they only mention having more compute. In reality, that is only the first step to achieve AI at scale.

The important question regarding scalability is: “Can I train enough models to experiment effectively?”

The first step in this process is getting enough compute and the second step is ensuring you can scale up model experimentation with the compute you have. This includes scheduling training and having the infrastructure to run models through the data, training, and serving pipelines.

It’s important to note that scalability also includes reliability. Reliable model training is required to scale up AI otherwise failed experiments will diminish the scale of experimentation for a given amount of compute.

Efficiency

Now that you’ve scaled your training up enough to accommodate the ML experimentation process, the next step is making that process as efficient as possible. The question you can ask yourself regarding efficiency is: “How well am I using my resources?” or “How can we get more output per dollar spent?”

The key for maximizing efficiency is understanding how inefficient your system is in a way that allows for identifying improvements. You might have read that and thought, “Well, duh,” but there’s a huge difference between knowing a system is inefficient because training constantly fails and knowing a system is inefficient because you’re meticulously tracking hardware usage and failure rates—one allows for improvement and the other doesn’t. Put more succinctly: Metrics that aren’t tracked won’t be improved.

This is especially the case with machine learning because of how many trackable metrics are involved in creating an efficient machine learning experimentation system due to the scale required.

The first metric to track is resource utilization. This tracks hardware “uptime” or the amount of time compute is actually being utilized. Idle GPUs are wasted resources.

Tracking resource utilization gives insight into system optimizations. Methods for achieving this include:

Optimizing resource allotment

Optimizing model design

Reducing duplicated work

An example of an efficiency improvement that can be made is tracking metrics to understand data efficiency and using methods such as knowledge distillation (learn more about it here) or transfer learning (further training a pre-trained model to perform a different task) to reduce resource usage while improving model performance.

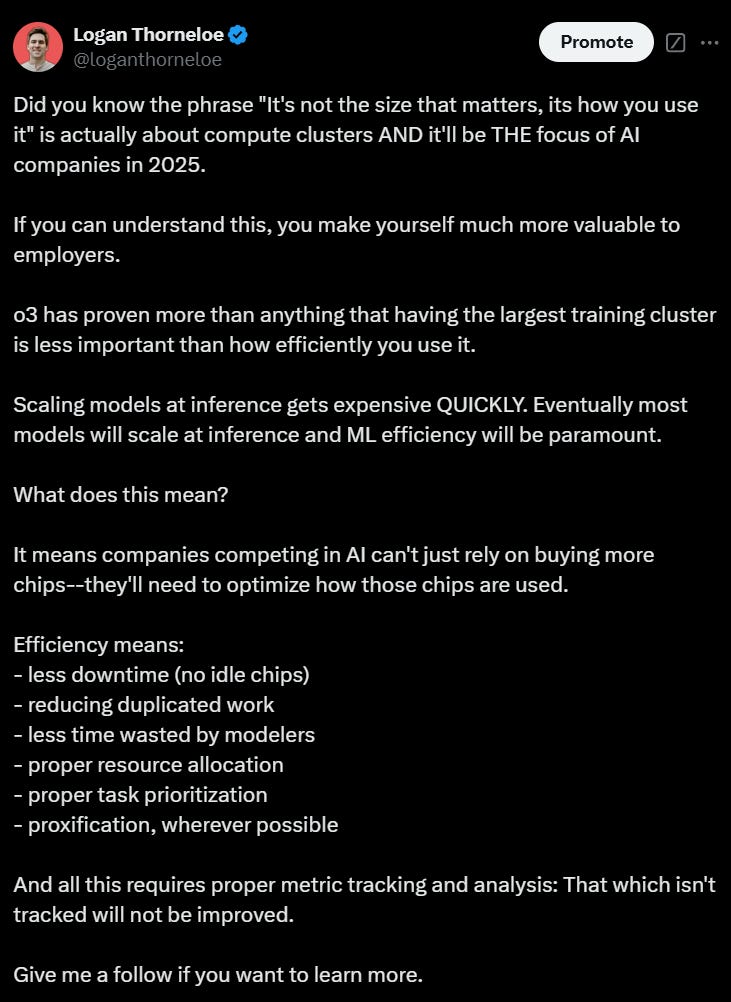

The important thing to note about efficiency is it allows companies to compete. Companies won’t last if they aren’t able to scale and efficiently experiment.

I’ve said it many times: efficiency will be a huge talking point for AI in 2025. Doing more with less will be important not just for cost savings, but also for improving model performance. See my post on X about it below.

Velocity

The third principle for machine learning at scale is velocity. It aims to maximize experimentation output from a system.

Examples of questions velocity metrics aim to answer are:

“How can we optimize the time modelers spend on their experiments?”

“How can we maximize the number of valuable experiments we can run in a certain period of time?”

“Can we make tradeoffs between experimentation latency and throughput to maximize resource efficiency?”

There are so many factors that play into these questions. Solving them is difficult and open-ended. For example, there can be different answers to these questions based on the day of the week or based on team or company culture. These questions are also only examples and many velocity-based questions are team- or company-dependent.

Thus, velocity metrics and improvements are less well-defined than the other two principles. Tracking and improving velocity can require cooperation between everyone involved in the experimentation process because many of the questions relate to the people involved and situations that arise when experimenting.

This means there are many metrics that can be tracked to understand ML experimentation velocity but those metrics depend on specifics of the experimentation process. However, there are three primary metrics that should be defined and tracked to understand velocity regardless of situation:

Latency: End-to-end time for ML experimentation.

Throughput: Experiments completed over an allotment of time.

Bandwidth: Number of experiments capable of being in process at a given time.

These three metrics sliced by the same factors mentioned in the efficiency section give further insight into system inefficiencies that may not be directly apparent when investigating resource utilization. These are especially helpful when combined with scalability and efficiency metrics from the above sections. They also provide a basis for making trade-offs (sacrificing performance in certain metrics to improve others) to optimize overall system performance.

An important note is velocity enables companies to outcompete. If a company can maximize its human resources (such as modelers) and optimize the entire experimentation process it’ll be able to develop AI faster than competitors.

That’s all for this article! I was going to tack on a part three about how the above principles can help you understand which companies will win the AI race, but I decided to post that on X instead. Click the image below if you want to check it out. If you’d prefer a written post about it instead, let me know.

If you’re interested in machine learning engineering topics, don’t forget to subscribe to get these articles directly in your inbox.

See you next time!

Always be (machine) learning,

Logan