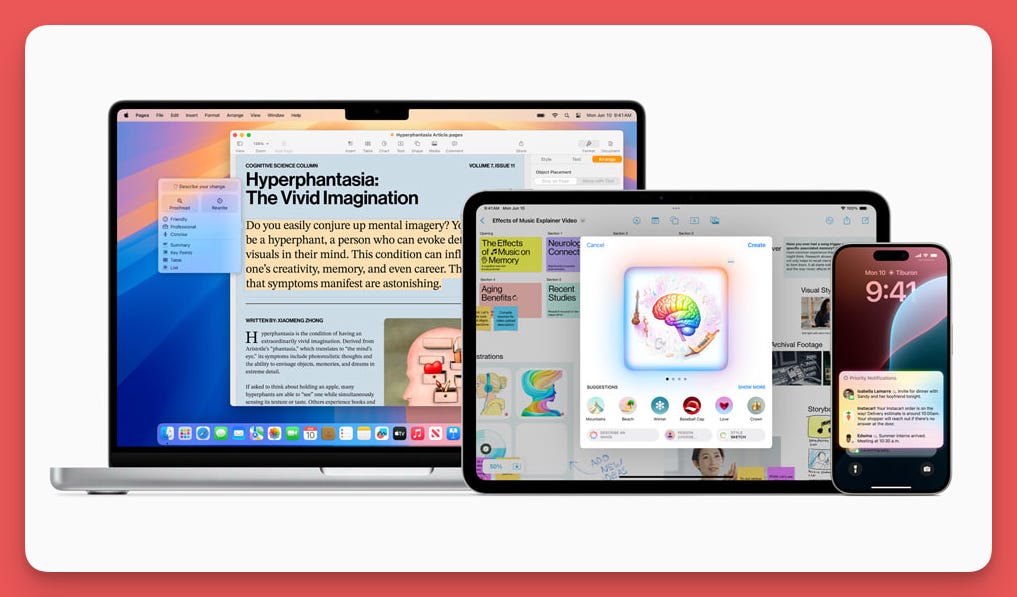

Apple Intelligence was announced yesterday at WWDC and there are certain machine learning-related implications a lot of other people are missing so I’m going to go over what Apple Intelligence means for you. Even if you have an Android/no Mac devices a lot of these updates will likely permeate the rest of the industry.

First and foremost, let’s get a few things out of the way:

Apple Intelligence is really good thing. Making machine learning capabilities accessible to more people (for free!) will always be good.

Apple Intelligence is a terrible name. It’s a very Apple thing to rebrand AI but the branding just seems a bit cringeworthy to me. Let me know what you think.

There was a lot of important machine learning-related information left out of the keynote. This info can be found in their blog posts. I find this frustrating because while the presentation was designed for non-AI invested individuals, some of this information really needed to be included in the presentation.

If you missed the WWDC keynote and want to watch it, start at the second half or read their press release. The first half was a lot of introducing Android/Windows features to Apple devices.

Here are the big Apple Intelligence updates:

Siri is good now. Siri is now backed by generative AI. Siri can generate images, better understand context and input, and can utilize a LLM for response generation. Since Siri is integrated with the Apple ecosystem, it is also personalized with information about you. LLM integration is what we need to make AI truly productive. Siri is also capable of pulling the context of your screen when you’re making a request. All these new Siri features are free.

Apple introduces Private Cloud Compute. New Siri will run a LLM on-device to fulfill simple requests, but will also run a larger LLM in the cloud to service larger requests. More information on these models can be found here. To make this possible and maintain user privacy, Apple has introduced a new Cloud that includes hardware security features and end-to-end encryption of your personal info making it unaccessible even to Apple. The cloud also never stores your personal information. More info regarding this is in their blog post. A few thoughts: I think we’ll need to see how this plays out before passing judgment, but it seems promising. I also wonder what the downsides of some of the security approaches are or if Apple Silicon really is the secret sauce that allows this to work. Other cloud providers have had cutting-edge security for years without security features like this. One potential downside pointed out on X is: Will cloud-based Siri will be capable of maintaining request context with the new security features? If no information is stored in the cloud, how will Apple work this? I’m excited to learn more about this.

Private Cloud Compute means Apple has datacenters using their M-series chips. It makes me think about other possibilities for these datacenters. It’s possible Apple doesn’t intend to use them for anything, but there are promising potential enterprise applications.

Siri integrates with ChatGPT, kind of. Siri can ask ChatGPT questions if you allow it to. If Siri detects ChatGPT might be good at answering a question, it’ll ask you if it’s okay for it to ask ChatGPT. Your IP is protected and your personal information is never shared with OpenAI. It seems like AI-based Siri has a three-tier system: on-device Apple LLM, Apple-based cloud LLM, and OpenAI’s ChatGPT. I’m wondering why the GPT integration is necessary but maybe it’s just a handy feature. Someone pointed out on X that maybe they were mentioning it to really show that their cloud offerings Siri is using aren’t ChatGPT. I think that’s plausible too. I definitely don’t think the workflow of asking an assistant to ask another assistant something is useful at all. We’ve seen it before and it hasn’t worked out.

Apple Intelligence is coming to iPhone 15 Pro and M-series devices. Since there’s a cloud involved, you’d think any device incapable of on-device computing would fallback to only using the cloud offering, but this isn’t the case. Instead, they just don’t get Apple Intelligence. There are probably some technical limitations to address to make older devices work, but this could very well be an Apple move to sell more new devices. It’s a shame that people with two year old iPhones will feel like they’re no longer supported.

Images and Genmojis can be generated based on people in your Photos app. Apple introduced the ability to create emojis (called “Genmojis”) and other images based on people present in your Photos. They gave a few examples, one of which was creating a happy birthday image for someone where they are cartoonized. I’m wondering if this will have potential likeness issues. After the ScarJo/OpenAI issue, I wouldn’t want to encourage using generative AI with someone else’s likeness. Especially since it was made to seem like you could do this with any random person in the back of a photograph you’ve taken.

There you have it: those are the most important machine learning considerations regarding Apple Intelligence. Let me know what you think and as always please correct me if I got anything wrong.

Always be (machine) learning,

Logan

Machine Learning Resources and Updates

Below are the machine learning resources and updates from the past few days you don't want to miss. If you want all the ML updates from X, follow me on there. You can get all the updates and resources each week by supporting Society's Backend for just $1/mo.

Private Cloud Compute: A new frontier for AI privacy in the cloud

Forget Sora — Kling is a killer new AI video model that just dropped and I’m impressed

Apple Intelligence: BEST update from Apple after Years (Supercut)

A case study in reproducibility of evaluation with RewardBench

NotebookLM goes global with Slides support and better ways to fact-check

Claude’s Character

Claude 3 is an AI with "character training" to make it more curious, thoughtful, and open-minded. It avoids adopting extreme views and aims to be honest and engaging. This improves its interactions and helps it navigate complex human values.

Tips for Deploying Machine Learning Models Efficiently

Deploying machine learning models involves optimizing them for performance, containerizing with tools like Docker, implementing CI/CD for reliable updates, monitoring performance, and ensuring security and compliance. These practices enhance efficiency and reliability.

Private Cloud Compute: A new frontier for AI privacy in the cloud

Apple's Private Cloud Compute (PCC) brings their device-level privacy and security to the cloud. It ensures that user data is only accessible to the user, not even Apple. PCC uses advanced hardware and software to protect data during processing and prevents leaks. Security researchers can verify these protections, ensuring transparency and trust. This marks a significant advancement in cloud AI security.

google/mesop

Mesop is a Python UI framework for fast web app development, used at Google. It supports hot reloads, strong type safety, and requires no JavaScript/CSS/HTML. Build apps quickly with easy-to-understand Python code. Install via pip and start coding in less than 10 lines.

The AI PC Arrives, OpenAI Used For Disinformation, U.S. and China Seek AI Agreement, Training Models to Reason

Microsoft launched AI-powered Copilot+ PCs. OpenAI models were used in disinformation campaigns. The U.S. and China discussed preventing AI catastrophes. AI's potential for harm and benefits were highlighted, with a focus on responsible use and regulation.

Demystifying NPUs: Questions & Answers

The article explains Neural Processing Units (NPUs), highlighting their role in AI tasks like voice recognition and visual content creation. Major chip makers like Intel, AMD, Qualcomm, and Nvidia are integrating NPUs into their processors for better performance.

Introducing Apple’s On-Device and Server Foundation Models

Apple has developed advanced AI models to enhance user tasks on iPhones, iPads, and Macs. These models improve writing, notifications, and image creation. They prioritize privacy and responsible AI development. Key innovations include on-device and server-based models.

Forget Sora — Kling is a killer new AI video model that just dropped and I’m impressed

Kling is a new AI video model by Kuaishou, offering advanced features like 2-minute 1080p videos, accurate physics, and photorealism. It's a strong competitor to OpenAI's Sora, but its availability outside China is uncertain.

Extracting Concepts from GPT-4

OpenAI developed methods to break down GPT-4's inner workings into 16 million interpretable patterns. They used scalable autoencoders to achieve this. While promising, the methods still face challenges in fully understanding and validating these patterns.

Mathematics for Machine Learning

The book "Mathematics for Machine Learning" teaches essential math skills for understanding machine learning. It covers key concepts like linear algebra, calculus, and probability theory. Available as a free PDF.

SITUATIONAL AWARENESS: The Decade Ahead

The text discusses the rapid advancement of AI technology in the next decade, focusing on the development of superintelligent machines and the challenges and implications that come with it.

Apple Intelligence: BEST update from Apple after Years (Supercut)

Mervin Praison's video on YouTube discusses a major update from Apple, highlighting its significance. The update is considered one of the best from Apple in years and is important as it showcases advancements in Apple's technology and user experience.

5 Useful Loss Functions

A loss function measures the difference between predicted and actual values. This blog covers five key loss functions: Binary Cross-Entropy and Hinge for classification, and Mean Square Error, Mean Absolute Error, and Huber for regression. Each helps improve model accuracy.

Mathematics of Machine Learning

A new book is being created to help you master the mathematics of machine learning. It offers step-by-step explanations from basic to advanced concepts without complex tricks. Join early access to be part of the book's development and get personal assistance from the author. Check out a free preview to see if it's what you need.

Introducing Apple Intelligence, the personal intelligence system that puts powerful generative models at the core of iPhone, iPad, and Mac

Apple introduced Apple Intelligence for iPhone, iPad, and Mac, integrating powerful AI with personal context. It enhances writing, photo searches, and Siri's capabilities. Privacy is prioritized with Private Cloud Compute. ChatGPT is also integrated.

A case study in reproducibility of evaluation with RewardBench

The text discusses issues with evaluating reward models using RewardBench, highlighting variability in results due to factors like batch sizes, tokenizer truncation, and misimplementation of reward models. Solutions and observations for improving stability are provided.

Let's reproduce GPT-2 (124M)

In the video titled "Let's reproduce GPT-2 (124M)," Andrej Karpathy explains how to recreate the GPT-2 model with 124 million parameters. The video is available on YouTube.

OpenAI welcomes Sarah Friar (CFO) and Kevin Weil (CPO)

OpenAI has welcomed Sarah Friar as CFO and Kevin Weil as CPO to help scale operations and drive growth. Sarah will enhance financial strategy, while Kevin will focus on product innovation. Their expertise will support OpenAI's mission of advancing AI research and deployment.

On the Effects of Data Scale on Computer Control Agents

This study tests if fine-tuning alone can improve computer control agents using a new dataset, AndroidControl. Results show fine-tuning helps in-domain performance but is less effective out-of-domain, especially for complex tasks.

Developing an LLM: Building, Training, Finetuning

The text discusses developing large language models (LLMs) through pretraining, training, and finetuning for various tasks like text generation and classification.

🥇Top ML Papers of the Week

This week's top ML papers cover diverse topics: a multilingual model for 200 languages, a method to extract patterns from GPT-4, a new architecture for efficient state space models, matrix multiplication-free LLMs, and thought-augmented reasoning. Other topics include LLM confidence training, concept geometry, alignment methods, and a new AgentGym framework.

NotebookLM goes global with Slides support and better ways to fact-check

NotebookLM, an AI research and writing assistant, is now available in over 200 countries. It supports Google Slides, web URLs, and more. New features include inline citations and multimodal capabilities. Users can quickly create summaries, study guides, and more.

Developing an LLM: Building, Training, Finetuning

Sebastian Raschka's 1-hour presentation covers the development of Large Language Models (LLMs). It includes stages like dataset preparation, tokenization, pretraining, finetuning, and evaluation. The video has clickable chapter marks for easy navigation. Happy viewing!

Would You Build the Manhattan Project in the UAE?

The article compares the development of AI to the Manhattan Project, highlighting national security risks. It argues that AI, like the atomic bomb, can be weaponized, and stresses the importance of controlling AI infrastructure and data to mitigate these risks.

Thanks for reading!

Funny, I actually think Apple Intelligence is brilliant marketing. Most people don’t care about AI - at all. Most people only care about outcomes.

First of all, I appreciated this issue for the lucid and illuminating clarity of exposition, thank you very much for writing it! I'm wondering about the aspect of rebranding that I think, from an external - and marketing point of view - is the most interesting. It is difficult to think that it is only dictated by 'AI' which would no longer stand for Artificial but for Apple, Intelligence, in order to create an exclusive association in the mind of the consumer. I think that behind it there is probably also a strategic reasoning linked to Apple's developments: since the technology is not yet as advanced as the others, they can exploit the strong brand to try to make up for certain consumers who love the 'bitten apple'. But your considerations are absolutely worthy of further study!