The Metrics Machine Learning Engineers Care About That Modelers Don't

And a brief overview of TPUs in Google data centers

In the realm of machine learning, everyone mentions training performance metrics like:

Accuracy: The ratio of correct predictions to total predictions for classification tasks.

Precision: The ratio of correctly predicted positive predictions to the total predicted positives for classification tasks.

Recall: The ratio of correctly predicted positive observations to all actual positives in classification tasks.

Error: A measurement of predicted values to actual values in regression tasks. This is generally done via calculations such as Mean Squared Error or Root Mean Squared Error.

The other metrics that are required to ensure a machine learning model is able to run in production and work for an end user are barely mentioned. In reality, the above metrics capture maybe half of what goes into creating a quality model. Sure, they're the metrics modelers care about during the training process, but the large majority of the people behind machine learning won't work as modelers and will be concerned with other things.

It's the culmination of understanding and optimizing metrics throughout the machine learning pipeline that creates an effective machine learning system. For example, if your model outperforms similar models on all benchmarks, but you can only run if for four times the cost, it likely won't be a better model for the end user. Another great example is having a great model, but it takes half a minute for inference to run each time a user makes a request. Users will likely use a slightly worse model they can prompt five or six times in the amount of time it takes to prompt yours once.

The metrics machine learning engineers care more about include:

Serving reliability

Training and serving redundancy

Data errors

Occupancy rate

Training efficiency

Here's an overview of the other less-mentioned machine learning metrics machine learning engineers need to constantly think about.

Reliability, redundancy, and scalability

Reliability, redundancy, and scalability are important in all aspects of software engineering, but they take on a new meeting when it comes to machine learning and the costs associated with it. I wrote a general overview about this in my machine learning infrastructure article but I’m going to get into a few specifics I think are interesting here.

The first thing I want to mention is the importance of inference speed mentioned in one of the examples above. Inference speed is important for the end user but it becomes increasingly complex because it often uses the same AI acceleration chips required for training. This requires balancing resources to ensure training is smooth and efficient while also ensuring serving is fast and reliable. Using not enough chips for serving leads to a poor user experience and throttling requests, but using too many takes away from important training resources. I’ll talk about that a little bit in the occupancy section below.

Redundancy is interesting in machine learning because serving runs on an AI-specialized chip making it more expensive than serving other software applications. This means redundancy to ensure uptime is much more expensive. A solution I've seen for this problem is using specialized chips optimized specifically for serving.

What’s more interesting about redundancy machine learning is that there also may need to be redundancy in the training process to maximize resource usage and output. Production models at large companies can take days of training on many chips to come to fruition. What happens during that training process if there’s a failure? We can’t just throw out the entire training or we lose a lot of money and time. Instead, we need to ensure that we can backtrack in the training process and restart from before the failure.

This becomes even more complex in systems that use online training and are constantly training on live data. If a training process goes down, another need to quickly pick up where it left off. For reliability, redundancy, and scalability I would track metrics used in other software services such as request latency and I would couple them with metrics below to ensure proper reliability is achieved.

Data errors

Data handling within large machine learning pipelines has three primary steps: extraction, preprocessing, and feeding that data into models. Model data is the most important factor in achieving excellent model performance so each of these three steps need to be taken with great care to ensure high-quality data. This is such a time-intensive and important task that large companies have teams (or many teams) solely dedicated to extracting and preprocessing data and developing systems to accurately feed that data into models.

We've previously discussed the importance of ensuring proper data handling and usage and studies have been done to show that the current LLMs make it easy to extract their training data. This means machine learning engineers have to ensure sensitive data and intellectual property is used carefully during the training process. This either means skipping collecting that data or ensuring it is properly stored and managed. This is the first step for proper data handling.

Next, machine learning engineers need to ensure high-quality data. This means preprocessing properly, but also having some sort of benchmark for what high-quality data is and an easy way to run this evaluation during the data processing pipeline. Then, if data is determined unfit for training, there needs to be a way to ensure the data isn't used. This might seem like a simple task, but when you have an entire fleet training on live data it becomes more complex.

Feeding that data into multiple models also becomes difficult as a fleet gets larger. Machine learning engineers need to ensure the data works for the desired training purposes which will likely be different depending on the team using the data. This data also needs to be fed into models without blocking the training process. This requires loading the data into memory for training to ensure availability when needed and ensuring low-latency for data handling and validating. This is more difficult than it seems at scale, especially when the models you're using are very large and the chips don't have adequate memory to store all data necessary for training at one time. I'll go into this further in the occupancy section below.

To understand how to make all these things work, metrics need to be taken to understand data practices and what is/isn't working. The most important metric here is tracking the validation of training data and calculating an error rate. This should be broken down across multiple metrics (such as team-wide, user-wide, chip-wide failures) to understand what's going wrong with the data collection pipeline. If the data pipeline errors, it means stalling model training and a worse user experience.

Occupancy

In my opinion, this is the most interesting and complex topic for machine learning engineers. Put simply, occupancy rate is the rate at which chips are being used for training. It's a calculation of how many chips are being used at a given time. In production machine learning (and even on your own personal workstation), AI accelerators are incredibly valuable and idle chips lose both time and money.

This problem has a simply put, but much less simply executed solution: always be training. One might think a high occupancy rate means good training, but high occupancy means nothing if training is inefficient. Occupancy rate becomes complex for a number of reasons:

Reliability and redundancy: I mentioned this above. What happens when a training cluster goes down? How do we restart that model elsewhere without losing training progress? How do we ensure clusters don't go down in the first place?

Preemption: When you're working with a fleet-wide training pipeline you'll have multiple teams and users training that all require resources. How do you determine who gets resources and who's training is prioritized? This becomes especially complex when you take data center architecture into account.

Resource allotments: How do we choose how many chips/cores each job should have? For example, a job can train on less chips and take longer or we can allot more chips to it to have it train faster. How do we make this determination and how do we optimize the decision to ensure higher occupancy based on the data center network?

All of this complexity is compounded by working with data center architecture. Using Google's architecture as an example (since it's what I'm most familiar with it), let me explain what I mean by this. I'll start with a bit of background.

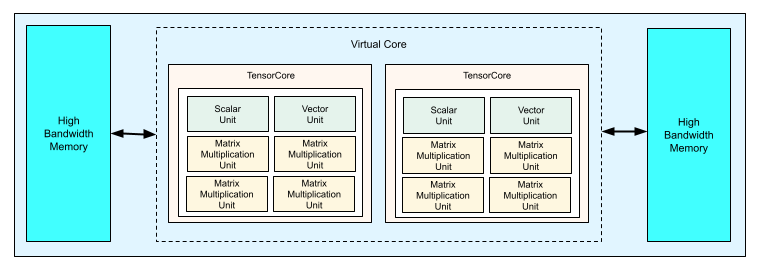

Google's AI chips are called TPUs (tensor processing units) and they're controlled by a TPU host. The TPU host is a separate computer that runs a VM (virtual machine) also known as a worker. This virtual machine has access to the TPUs on a server rack. Each TPU has one or more cores and each core contains the computational units that train and serve machine learning models. The TPU host is responsible for training and serving on the set of TPUs it has access to. Multi-host training and serving is accomplished by distributing the training/serving across multiple TPU hosts.

TPUs are grouped together in data centers in something called a TPU pod. This pod is a specialized network using high bandwidth connections to allow TPUs to communicate with one another. This is important for running training and serving calculations in parallel across TPUs. Having that high bandwidth connection makes communication between chips much faster. Pod size, number of cores, and number of TPUs a host has access to can all differ depending on TPU version.

So why does all this matter? When setting up a training job with a certain number of TPUs/cores, the job needs to be put into a pod that can provide those resources. If I have a job that needs more TPUs than a single pod can offer, I have to split that job across multiple pods. This becomes difficult because now the job doesn't have the high bandwidth communication across all cores and this can slow down the parallelization process. On the opposite end, if I have a really small job, I can schedule it on a single worker but I may not need all the cores that worker is responsible for. This means even though I've scheduled optimally, my occupancy rate may take a hit and those extra cores aren't usable by other jobs.

These memory and scheduling constraints need to be taken into account when scheduling training and serving and determining how resources can best be used. This is one of the most important jobs for a machine learning engineer. To clarify, data center networking and architecture are taken care of by a separate team. Optimizing resource usage via machine learning infrastructure is the job of a machine learning engineer. To understand this resource usage, it's important to track your occupancy rate in detail (this should break down to the model, team, etc), but it's also important to understand training efficiency to ensure a high occupancy rate shows effective training.

Training efficiency: Throughput, latency, and velocity

From an efficiency standpoint, there are two primary types of efficiency a machine learning engineer should understand. The first is more commonly spoken about: model throughput and latency. Model throughput and latency is the bread and butter to a great model training pipeline and is affected by the information mentioned in the sections above. Your data errors, training reliability, and occupancy rate will all paint a bigger picture of model throughput and latency. I'd also track latency and throughput metrics overall on a user-,team-, and chip-wide basis to monitor dips in throughput and increases in latency to better understand what might be causing them.

The second is less-discussed: modeler velocity. I'd want to know where modeler toil occurs and what pieces of infrastructure block progress on the modeler side. Modelers are an expensive resource and shouldn't be blocked on infrastructure. There's a reason large companies separate modeling teams from other machine learning-related initiatives so modelers can focus solely on improving models without having to deal with other issues that come up throughout the machine learning process.

Tracking latency and throughput gives a greater picture of infrastructure inefficiencies. Coupling this with understanding modeler velocity will show which pieces of machine learning infrastructure are causing resource expenses that can be fixed. For latency and throughput, I would track the amount of time spent at each stage of the machine learning training process. For modeler velocity, I would track when a model isn't making progress and determine the reason for that blockage.

Fleet-wide Metrics

In case it wasn't made clear while discussing the metrics above, it's important as a machine learning engineer to track your metrics across multiple dimensionalities. You should have an understanding of your metrics across teams, users, chips, model types, training parameters, and any other significant training metrics. This is the easiest way to identify inefficiencies in a machine learning pipeline and quickly understand how to fix them.

Let me know if I missed anything or you have corrections. You can support Society's Backend for just $1/mo for your first year and get access to all my machine learning resources and biweekly updates on the most important developments in AI and what they mean for you.