MatMul-free Machine Learning Can Improve Efficiency, Excellent Foundational Learning Resources, SSMs Might Be Better Than Transformers, and More

The top 10 most important updates of last week: 7/8/24

Here are the most important machine learning resources and updates from the past week. I share more frequent ML updates on X if you want to follow me there. You can support Society's Backend for just $1/mo to get a full list of everything I’m reading in your inbox each week.

Beyond MatMul: The New Frontier of LLMs with 10x Efficiency [Breakdowns]

Can Long-Context Language Models Replace Specialized AI Systems?

Beyond MatMul: The New Frontier of LLMs with 10x Efficiency [Breakdowns]

The article discusses MatMul-Free Large Language Models (MMF-LLMs), which eliminate matrix multiplications to improve efficiency in deep learning. These models are more computationally and memory efficient, using ternary weights and simpler operations. Innovations like the Fused BitLinear Layer and the MatMul-free Linear Gated Recurrent Unit (MLGRU) reduce memory usage and speed up training. Imagine if AI wasn’t compute intensive—that’s the potential this research shows.

Foundations of Applied Mathematics

"Foundations of Applied Mathematics" is a series of textbooks for undergraduate and graduate students at Brigham Young University's Applied and Computational Mathematics program. The textbooks cover topics like Mathematical Analysis, Algorithms, Data, and Dynamics. The accompanying Python labs help students apply theoretical concepts practically. This is definitely a learning resource worth checking out.

Announcing New Dataset Search Features

Hugging Face has revamped their Dataset search features to make it easier to share and find the datasets needed for machine learning. Hugging Face has been an excellent resource for community-led dataset collection and sharing. Data is the most important factor for quality and performant machine learning models. Hugging Face’s commitment to making quality data easier to find is huge for the AI community.

🥇Top ML Papers of the Week

I’ve found it incredibly tough to go through ML papers myself each week. There are hundreds coming to arxiv each day, most of which aren’t super significant. Papers that go directly to arxiv aren’t peer reviewed and basically anything can be posted there. Instead, I let other people more closely involved in the research community go through them for me. Elvis does a great job of putting these top ML paper updates together each week and I don’t miss an issue.

5 Common Mistakes in Machine Learning and How to Avoid Them

The text highlights five common mistakes in machine learning and how to avoid them. This is a great overview of some of the complexities of machine learning systems and the ML system design process that I think is particularly helpful for new machine learners.

The Method Google Used to Reduce LLM Size by 66%

This is the article I wrote last week and it has three really important takeaways: 1) Gemma 2 from Google is out. It’s an open mode that performs really well at small sizes. 2) Knowledge distillation is a huge part of the size reduction. Size reduction is (and will continue to be) paramount for making AI widely accessible. 3) The ability for a model to be trained either from scratch or via knowledge distillation is an excellent example for why software engineers should understand machine learning.

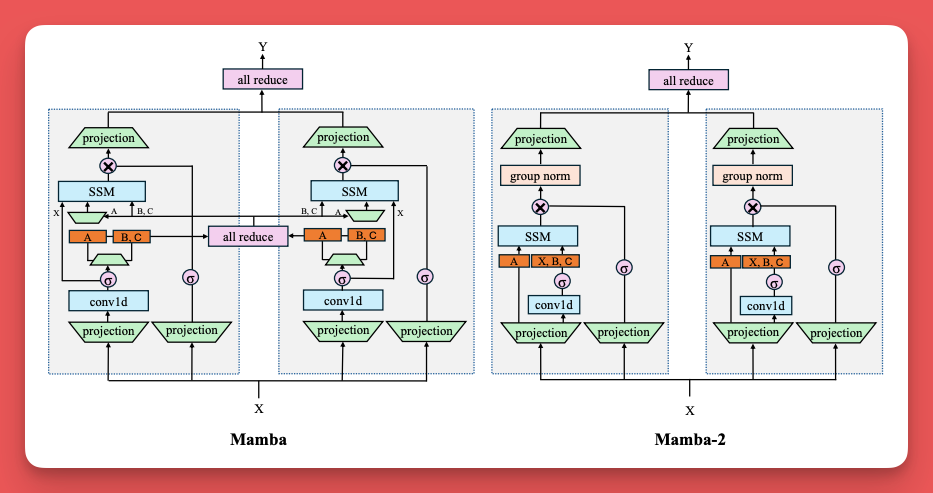

An Empirical Study of Mamba-based Language Models

Selective state-space models (SSMs) like Mamba overcome some of the shortcomings of Transformers, such as quadratic computational complexity with sequence length and large inference-time memory requirements from the key-value cache. Moreover, recent studies have shown that SSMs can match or exceed the language modeling capabilities of Transformers, making them an attractive alternative. The potential for SSMs is huge if they’re able to make up for the downside of transformers.

Deep Learning

The Deep Learning textbook by Ian Goodfellow, Yoshua Bengio, and Aaron Courville is a valuable resource for those interested in machine learning, especially deep learning. It is available online for free and can be ordered on Amazon. This book is the gold standard for a textbook-approach to understand deep learning and is worth checking out.

Can Long-Context Language Models Replace Specialized AI Systems?

This research explores if advanced AI models can replace specialized systems for tasks like retrieval and question answering. Long-Context Language Models (LCLMs) show promise but face challenges with complex reasoning. They can match specialized systems in some tasks but struggle with others. The study highlights the potential for a shift in AI paradigms and the importance of well-designed prompts for optimal performance. The findings suggest LCLMs could simplify AI processes but require careful deployment considerations.

Interviewing Dean Ball on AI policy: CA SB 1047, upcoming AI disaster response, Llama 3 405B, Chinese open-source AI, and scaling laws

Nathan Lambert interviews Dean Ball about recent developments in AI policy, focusing on California's SB 1047 bill. They discuss the bill's origins, evolution, and potential impacts on AI fine-tuning and disaster response. I found this interesting and figured I would share it. AI regulation will be the most impactful external factor on AI advancement for the next decade by far and it's important to keep up with.

Thanks for reading!

Always be (machine) learning,

Logan