ML for SWEs 5: AI for Education is Bigger Than You Think

Machine learning for software engineers 4-4-25

The hardest part about working in AI and machine learning is keeping up with it all. I send a weekly email to summarize what's most important for software engineers to understand about the developments in AI. Subscribe to join 6400+ engineers getting these in their inbox each week.

If you want to learn ML, check out my roadmap to learn ML fundamentals from scratch.

Always be (machine) learning,

Logan

There isn’t a whole lot to discuss this week, but there are a lot of good updates, articles, and resources listed in the ‘My picks’ section below that you should definitely check out.

First, ChatGPT Plus is free for college students in Canada and the US through May. Similarly, Anthropic released Claude for education which is a specialized version of Claude designed for higher education. Instead of just teaching students, it helps them ask better questions. This release also included the opportunity for students to become a Claude Campus Ambassador and a program for students to apply for free API credits. If you’re a student, these are definitely worth checking out.

Education is a huge opportunity for AI. It’s an historically under-resourced sector in many countries despite the fact that adequately resourcing it has great potential for impact. Providing education for more and improving education systems has potential to greatly improve quality of life in a country (I can write a separate article entirely about this if you’re interested in it—just let me know). Education is also a very personal endeavor as everyone learns differently. There’s an opportunity for AI to make a great education more accessible and personalized.

OpenAI also released their list of vendor dependencies for their services. This list details the handling of third-party data and who is handling it. Common names include Microsoft for infrastructure and Cloudflare for CDN. This is important to understand both as a user of ChatGPT and other OpenAI services and also as an engineer potentially building products with OpenAI APIs. It’s very cool that OpenAI releases reports like this and is a crucial step toward transparent AI and technology.

Also in the world of OpenAI, they’ve raised a new round of funding at $40 billion which sets their valuation at around $300 billion. This round of funding was led by SoftBank Group and tells us the market is still bullish on foundational models being incredibly valuable. This is a massive valuation for a company hemorrhaging money like OpenAI. It puts them valued well above other tech companies including Adobe (~$151B), Uber (~$138B), Intel (~92B), Airbnb (~68B), and their competitor Anthropic (~$61.5B).

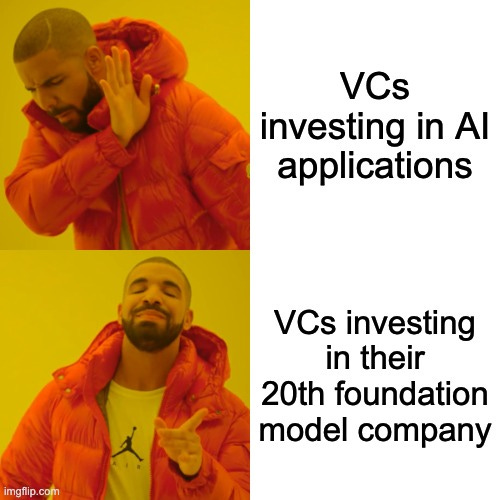

There has been a lot of discussion lately about the value of AI primarily being at the application layer instead of within foundational models. The argument essentially is that foundation models will be a dime-a-dozen and how they’re applied will drive the real value. My guess is there will be money in both, but many of the foundational model-focused companies we see now won’t last.

In the world of Google, Gemini 2.5 Pro is still rocking the top of the LLM leaderboards by quite a margin. This is the first time I’ve used only one model for all my LLM use cases (primarily research, coding, and sometimes writing/outlining). Gemini 2.5 Pro coming Cursor is huge for using to code (which is one of its many strengths). If you don’t pay for Cursor, you can also use the ‘Canvas mode‘ in the Gemini app to have 2.5 Pro write code for you.

Lastly, I read a great article this week about how our technology products are influencing us instead of helping us that I think is worth your time. I’ve been thinking about the rising popularity of the ‘dumb phone’ and how so many people desperately need an escape from the constant distractions in their pocket. I watched a video about this that made me realize how most people’s smartphones are just there to influence their behavior.

Think about the common person who purchases an Apple watch and an iPhone. Most people don’t filter their notifications or change any sort of default setting. As soon as they log into an app, that app starts pushing notifications straight to their wrist that are just ads. I believe ads have their place, but I don’t believe that place is on someone’s wrist. This gives the same vibe as people coming to your door to sell or ask for things except this person is already inside your home and goes with you when you leave.

As AI continues to become more effective, being mindful about technology use will become more important to allow us to use our technology instead of having our technology use us.

That’s all for this week’s discussions! If you missed last week’s ML for SWEs, you can find it here:

Below are my picks for this week. The entire list is included for paid subs (just ~3/mo for now!). Thank you all for your support!

My picks

What we learned from reading ~100 AI safety evaluations by

: A review of approximately 100 AI safety evaluations published in 2024 reveals a dynamic but static landscape, highlighting challenges in developing comprehensive assessments of AI's risks and benefits while calling for a structured approach to meta-evaluation to better understand AI's societal impacts.The Reality of Tech Interviews in 2025 by

: The tech hiring market in 2025 is showing signs of recovery, particularly for experienced engineers, but remains challenging for new graduates due to heightened interview standards and competitive processes.10. Auto-Encoder & Back-propagation | CSCI 5722: Computer Vision | Spring 25 by

: An auto-encoder is a neural network architecture consisting of an encoder and a decoder designed to compress input data into a lower-dimensional representation and then reconstruct the original input from this encoded form.3 Ways You Can Sabotage Your Own Tech Career by

: Undervaluing yourself, assuming rejection from a company disqualifies you from future opportunities, and submitting generic resumes are three common mistakes that can sabotage your tech career.: The repository provides an educational PyTorch implementation of the Llama 3.2 text model for learning and research, featuring minimal dependencies and instructions for using the model weights and generating text.Vision Large Language Models (vLLMs) by

: Vision Large Language Models (vLLMs) integrate visual information from images and videos into text-based models using techniques like cross-attention and separate training phases for enhanced multi-modal capabilities.When machines learn to speak by

: A significant transformation in voice AI is occurring, enabling fluid, human-like interactions and raising important questions about the future of communication and interpersonal relationships.Keep reading with a 7-day free trial

Subscribe to Society's Backend: Machine Learning for Software Engineers to keep reading this post and get 7 days of free access to the full post archives.