ML for SWEs 4: Waymo is the Perfect Example of ML Engineering, Gemini 2.5 Pro is #1, and GPT-4o Image Generation

Machine learning for software engineers 3-28-25

The hardest part about working in AI and machine learning is keeping up with it all. I send a weekly email to summarize what's most important for software engineers to understand about the developments in AI. Subscribe to join 6300+ engineers getting these in their inbox each week.

If you want to learn machine learning, check out my ML roadmap.

Always be (machine) learning,

Logan

21-year old exposes Leetcode-style interviews

Every software engineer knows what Leetcode-style interviews are and the overwhelming disdain most engineers have for them. These interviews requires months of time for candidates to prep skills they likely won’t directly use on the job.

This style of interview is hated so intensely because no one wants to spend months on difficult prep that may go to waste and because it essentially turns changing jobs into an even more complex process for employees while maintaining a company’s ease of firing.

Naturally, since this process is so tedious its become a prime target for AI assistance (remember, automating tedium with AI isn’t always a good thing). A 21-year old from Columbia University created an application that can automate the interview process by producing an overlay with step-by-step answers to interview questions. He used it to get offers from large tech companies and then shared how he did it, causing his offers to be rescinded and for him even to be expelled.

There’s a certain irony here: The companies trying to convince everyone to use AI are entirely against anyone using it during their interview process. Many are also claiming engineers will be replaced by AI by the end of this year while simultaneously hiring human engineers because that clearly isn’t the case.

I understand motivations on both sides of this issue, but its such a silly situation. I don’t see a way for companies to get around AI-assisted interviewing in the future unless all interviews are done in-person. I highly recommend watching The Code Report’s overview of this situation as it goes further in-depth and it’s only 4 minutes long.

Leetcode-style interviews are a huge part of obtaining a job as an engineer and highly publicized cases like this have the potential to change what (in my opinion) is a fundamentally broken method of evaluating candidates.

I really hope this method is fixed because while it’s broken it’s still the best way companies can evaluate candidates. I don’t really see a better solution than what we currently have even though it’s far from ideal.

If you have an idea for a better solution, I’d be very curious to know what it is.

You should still learn how to code

I won’t harp on this too much because it was part of ML for SWEs two weeks ago, but this is a friendly reminder that it’s still a fantastic time to learn how to code. I don’t quite understand how after the vibe coding messes from last week we can still come to the conclusion that it isn’t worth learning to code anymore.

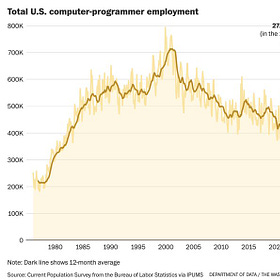

The most common arguments I hear against learning to code is that software engineers won’t exist in the near future and more recently that coding will be taken care of by AI and engineers will just need to review it.

The first argument I’ve written about many times, so I won’t go into detail. The best way to understand why that argument is wrong is simply to code AI-assisted for a while. You’ll realize it’s pros and cons and that it isn’t anywhere near taking the jobs of engineers.

The second argument is absolutely baffling because it is a logical fallacy. How are you supposed to review AI-generated code if you aren’t able to understand how the code works? I agree that a large part of software engineering will be reviewing AI-generated code because this is already something I do, but if I wasn’t capable of writing the code myself I wouldn’t be able to identify the many, many bugs and mistakes introduced by the AI.

Understanding fundamentals of technical topics also also comes with the benefit of knowing when and how best to use those fundamentals and things built on top of them. This is why I so strongly advocate for learning ML fundamentals before building with it.

Dave Plummer’s tweet (shown above) provides perspective on this topic.

Gemini 2.5 Pro is #1 at everything and GPT-4o can generate images

The most interesting part of these two releases have been the discussion surrounding them. I swear there has been more discussion online about how GPT-4o is more talked about than Gemini 2.5 Pro than there has been about the capabilities and benefits of either.

But, of course, this is social media so the important information is often missing or skewed. So here’s what you should know!

Gemini 2.5 Pro released and took the number 1 slot on most LLM leaderboards. It showed impressive performance in coding, math, and science. It has a 1 million token context window and can manage extensive datasets. This makes it an incredible tool for engineers to build AI applications with. Google has really been pushing accessibility of their models so I expect these capabilities to become even more accessible very soon.

GPT-4o can now generate images and has shown impressive generation and even caused the “Studio Ghibli-fication” of the internet. It has improved text rendering, graphic creation, and context awareness. This makes image generation much more useful for content creation and opens up new avenues for building image generation into products.

What’s most interesting about GPT-4o’s release was the discussion between a Google DeepMind researcher and the founder of Midjourney about how exactly OpenAI was doing it. This post was a true gem of X, something that’s been difficult to find recently.

Waymo expands to more cities with more features

Waymo has expanded to Austin, TX with plans to come to DC, Atlanta, and Miami. Waymo is also working on teen accounts to let teenagers aged 14-17 get around their city with permission from their parents. There has also been evidence showing that Waymos are significantly safer than human drivers.

Between the cars being available 24/7, giving teenagers autonomy and safe travel options, increasing the safety of the roads in a heavily car-depend country, and more, Waymo is an excellent example of how machine learning and software engineering can combine to increase quality of life.

If you want to read more about Waymo’s safety, check out

’s article:That’s all for this week’s discussion topics. If you missed last week’s edition of ML for SWEs, check it out here:

ML for SWEs 3: AI Can't Be Copyrighted, Don't Fall for Misinformation, and Stay Safe Online

The hardest part about working in AI and machine learning is keeping up with it all. I send a weekly email to summarize what's most important for software engineers to understand about the developments in AI. Subscribe to join 6000+ engineers getting these in their inbox each week.

Below are my picks for ML and software engineering learning resources from this past week you don’t want to miss. If you want the full list, or you want to support Society’s Backend (we’re nearing bestseller territory!!), you can do so for just $3/mo:

My picks

Training large language models more efficiently: A new training framework for large language models, called distribution-edited models (DEMs), significantly reduces computational costs by up to 91% while improving model quality through efficient use of mixed data distributions.

How not to build an AI Institute by

: The Alan Turing Institute is facing a crisis despite significant funding, as it struggles with governance issues, a lack of impactful research, and criticism for failing to meet its intended purpose in advancing AI in the UK.Reduce AI Model Operational Costs With Quantization Techniques by

: Model quantization reduces the precision of neural network parameters to significantly lower memory usage and operational costs, while maintaining performance efficiency, especially in large-scale AI models.AI Market Intelligence: March 2025 by

: Nvidia's acquisition of Gretel.AI to enhance its synthetic data capabilities highlights the growing importance of synthetic data in AI, while Google's internal dysfunction presents opportunities for agile competitors, and new open-source tools from DeepSeek aim to reshape AI infrastructure for scalable, proactive systems.How LLMs and MultiModal AI are Reshaping Recommendation and Search by

: Large language models (LLMs) and multimodal AI are revolutionizing recommendation and search systems by improving data quality, enhancing efficiency through techniques like knowledge distillation, and integrating search and recommendation tasks into unified architectures.Empowering disaster preparedness: AI’s role in navigating complex climate risks: Artificial intelligence can enhance disaster preparedness by creating integrated early-warning systems that provide localized, impact-focused predictions and empower communities to respond effectively to complex climate risks.

Hardware-Aware Coding: CPU Architecture Concepts Every Developer Should Know by

: Understanding CPU architecture concepts like instruction pipelining, superscalar execution, and caching can significantly enhance software performance by aligning code with hardware optimization techniques.Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.