ML for SWEs 3: AI Can't Be Copyrighted, Don't Fall for Misinformation, and Stay Safe Online

Machine learning for software engineers 3-21-25

The hardest part about working in AI and machine learning is keeping up with it all. I send a weekly email to summarize what's most important for software engineers to understand about the developments in AI. Subscribe to join 6000+ engineers getting these in their inbox each week.

Always be (machine) learning,

Logan

Don’t fall for misinformation, especially when it impacts your career

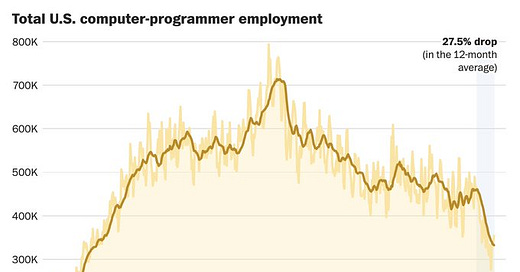

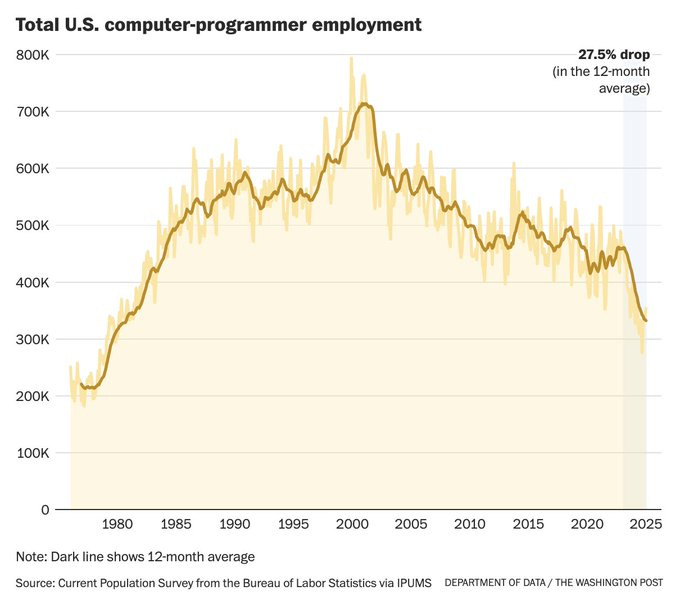

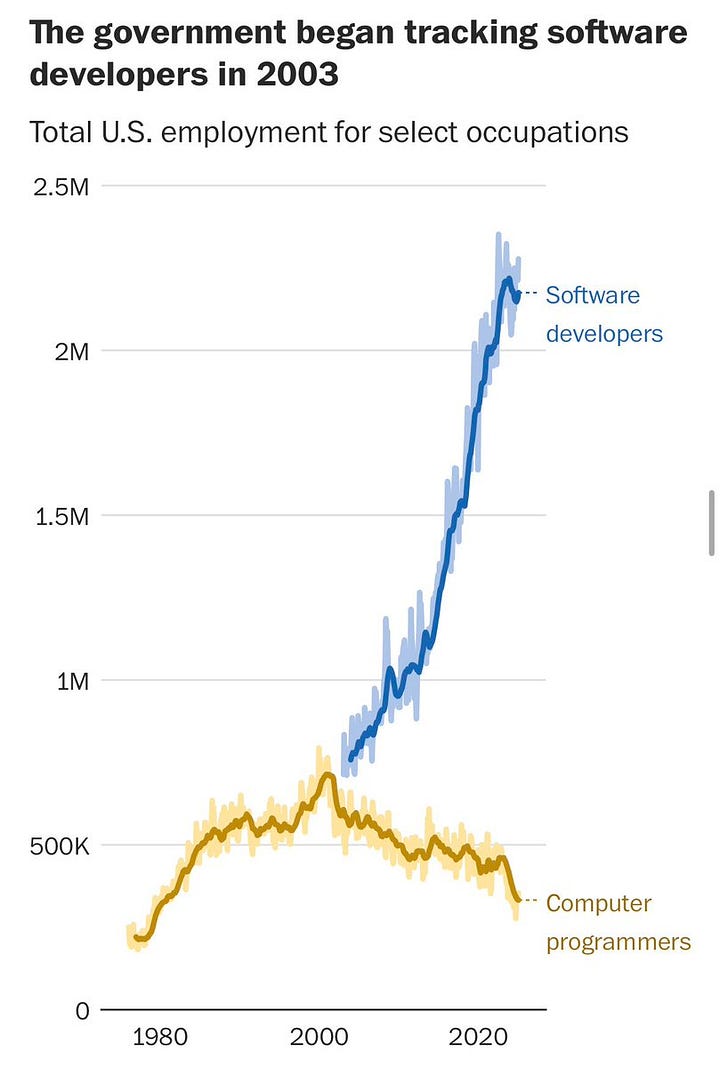

I want to start this edition of ML for SWEs off with a game. I’m going to show you two images. The first is from an article (and social media post) about computer programming jobs disappearing. The second is about the classification of programming jobs over the years. You tell me what’s wrong.

The first is a blatant misrepresentation of programming jobs being on a steep decline. When in reality, the job “computer programmer” changed to “software developer” and started to be tracked under that name as the old name fell off.

I see this kind of fearmongering all the time on social media and its my biggest pet peeve about being online. Misinformation runs rampant and I want Society’s Backend followers to know the truth.

This is particularly bothersome to me because of the sheer amount of engagement-farming posts and articles that are spreading the information that software engineering is “over” because job listings are down and companies are laying people off. Mix that with AI taking jobs and a lot of people become scared.

Firstly, all the evidence I’ve seen points primarily to the layoffs and decreased job listings being a correction from over hiring during COVID. That doesn’t make it good or make finding a job any easier, but the software engineering is far from “over”. Secondly, all the research I’ve done shows software engineering job listings in AI are actually on the rise.

Vibe coding might not be a vibe after all

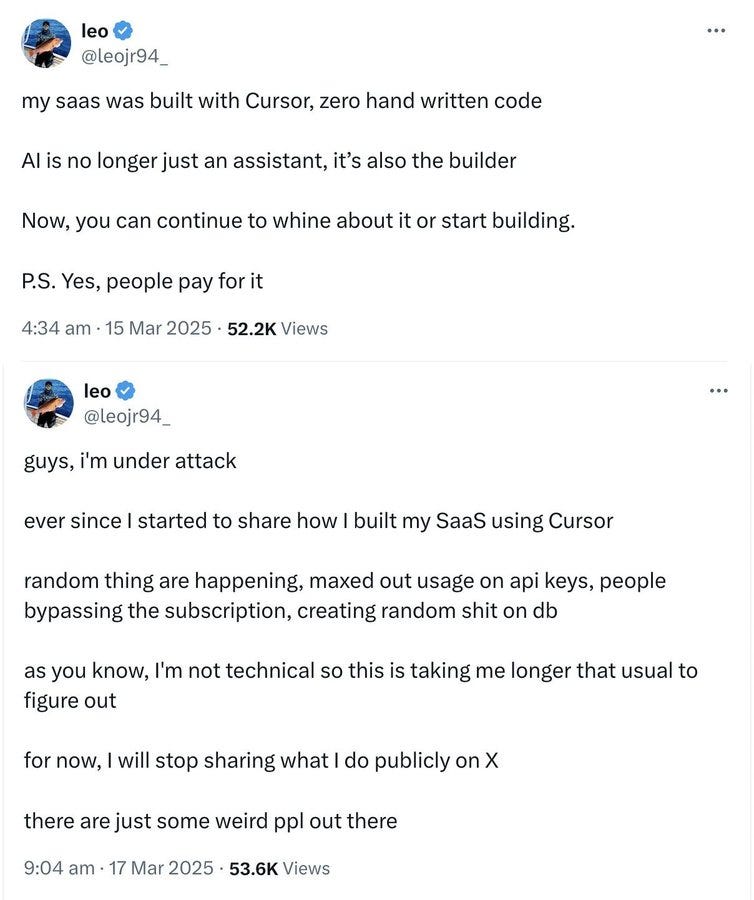

Another absolutely brilliant gem coming out of social media this week is a non-programmer bragging about creating a SaaS without knowing how to program only to post about that same SaaS being under attack just two days later.

It turns out this individual had exposed all their secrets when vibe coding and didn’t know that was wrong because they didn’t have any programming knowledge. Not only that, but when things went wrong it took them so much longer to debug because they didn’t know what was going on.

This is exactly what so many actual software engineers have been warning about regarding vibe coding. I’ve even written an article about when to use AI to code and when not to. While non-technical influencers peddle it as the almighty software solution, they don’t understand the vulnerabilities and issues it can cause.

I’m starting to think Santiago’s vision might actually be the future of software engineering. Maybe software engineers will become freelancers coming into fix what AI was incapable of and maybe we’ll get paid a lot more to do it.

Stay safe online

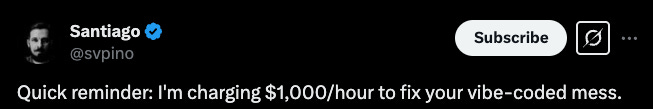

Since those were kind of negative, let’s look at a positive post that has come from social media this week. Being software engineers, we’re all technically gifted, but I’m surprised at how many of us don’t actually take the necessary precautions to protect ourselves digitally.

Andrej Karpathy put together a comprehensive blog post about digital hygiene I highly recommend reading. It explains digital security very clearly and how to stay safe online. It also explains the concept of virtual post mail which I didn’t even know was a thing but it’s a game changer.

Reading that one article and implementing what it says could say you from identity theft, comprised financials, scams, and a ton more. t’s an absolute must-read in my book (and it’s very short!).

AI work cannot be copyrighted

This past week a US appeals court reaffirmed that AI-generated content cannot be copyrighted. This reaffirms the requirement for human authorship for content to receive copyright protection.

Here’s an important outtake from the article:

A federal district court judge in Washington upheld the decision in 2023 and said human authorship is a "bedrock requirement of copyright" based on "centuries of settled understanding." Thaler told the D.C. Circuit that the ruling threatened to "discourage investment and labor in a critically new and important developing field."

I’m very curious to see how this develops as I’m certain there will be further appeals. I’m most interested in how “human authorship” will be defined. For example, what if a person creates an AI system from scratch using only their art? Is the output of the AI system then considered human authorship since they both authored the art and the AI?

Or will we see content be copyrighted that has both AI and human involvement? If so, will only that human-created pieces be copyrighted or will all of it be copyright-eligible?

Let me know what you think in the comments.

Nvidia GTC

Nvidia’s GTC 2025 highlighted significant advancements in AI inference and GPU technology. Of course, both of these are critical to AI development. Nvidia has been the frontrunner in this realm for a long time and it’s important to keep up with what they’re doing.

There were many highlights and this really could have its own article. Here’s a great overview from SemiAnalysis to get the scoop on what happened and what’s important.

Gemma is available in pip

Google released a Gemma PyPI package to make Gemma easier to use and fine-tune. Anything making AI more accessible to build with is always a good for the AI community, but especially software engineers. Gemma is Google’s open model making it especially useful to build with. Just make sure to understand its license to know how it can be used.

To be clear: releasing tools like this don’t make it any less important to understand machine learning. Understanding ML is paramount to using these tools properly, but having tools that make it easier to whip up working products is always helpful especially for open models.

The full docs can be found here.

That’s it for discussion topics this week! Thanks for reading. If you missed last week’s ML for SWEs update, check it out here for more on Model Context Protocol (MCP) and why you should learn to code:

Below are my picks for articles and videos you don’t want to miss from this past week. My entire reading list is included for paid subs. Thank you for your support! 😊

If you want to support Society’s Backend, you can do so for just $3/mo.

Logan’s picks

: The journey to becoming a self-taught AI engineer is accessible and valuable, requiring hands-on experience, a willingness to learn, and the ability to adapt to rapidly evolving technologies.How to manage AI training organizations (and teams) by

: Effective management of AI training teams hinges on maintaining small, focused groups that can quickly adapt and communicate while scaling up operations without losing direction or quality.Understanding Large Language Models by

: Large language models (LLMs) are advanced statistical language models that generate text by predicting the next word based on vast amounts of training data, enabling them to perform tasks such as text generation, structured data interpretation, and code generation.Fine Tune or Scale Up? OpenAI's Overlooked Experiments Say a Lot[Guest] by

: OpenAI's experiments reveal that while fine-tuned models can excel in specialized tasks, more powerful general models often outperform them, suggesting a potential shift in AI development priorities.If you want to learn machine learning engineering, start here by

: To become a successful machine learning engineer, software engineers should learn both software engineering and machine learning concepts using the curated resources and roadmap provided in this guide.Inside the launch of FireSat, a system to find wildfires earlier: FireSat is a new satellite system launched by Google and partners to detect wildfires early and accurately, enabling quicker emergency responses and contributing to climate change mitigation.

AI Reading List for Systems Engineering (Part 1) by

: Building successful AI solutions requires understanding the broader systems and human factors at play, rather than just focusing on specific models or technical improvements.Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.