ML for SWEs 7: Eval-driven Model Development?

Machine learning for software engineers 4-18-25

Society’s Backend now has 6600+ members! If you want to stay updated with everything software engineers should know about machine learning and join our quickly growing community, make sure to subscribe!

And remember, if you want to learn ML check out the roadmap I put together here.

Always be (machine) learning,

Logan

This was another HUGE week in AI. First, let’s go over the big announcements and then we’ll get into some interesting discussions.

Google introduced Gemini 2.5 Flash just yesterday. I haven’t had the chance to try it myself, but there are a lot of great reviews. I’ll update y’all as I give it a go. The main takeaway with Flash: This is likely the best model for most of your use cases. It’s incredibly cheap, reliable, and capable. Unless you specifically need greater features, Flash should be the default.

Google also announced Cell2Sentence-Scale (C2S-Scale), a family of open-source large language models trained to interpret and understand biology at the cell level. There are many important applications of AI in biology and healthcare and I love seeing advancements in this area. I’m probably a bit biased because I have family working in healthcare and I’ve previously worked in medical imaging research. This announcement didn’t make the waves I would expect, but it does seem to be appreciated by the biomedical community.

OpenAI also had a killer week releasing both GPT-4.1 (yes, after GPT-4.5) and o3 along with o4-mini. I highly recommend reading the post I just linked because it has a lot of interesting details about the models. OpenAI also released an open-source command-line tool to access OpenAI’s reasoning models in your terminal called OpenAI Codex CLI.

My understanding is the release of GPT-4.1 was to release a model more capable of GPT-4o that was more cost manageable than GPT-4.5 which, from my understanding, was hemorrhaging money. The big upgrade here is GPT-4.1 has a 1 million token context window whereas GPT-4.5 and GPT-4o only had 128,000.

o3 and o4-mini have been touted as being genius-level AI (whatever that actually means) and have shown impressive benchmarks. I haven’t had the chance to try these yet, but they seem to be excellent competitors to Gemini 2.5 Pro which was so far ahead of other models there wasn’t even a question as to which model was best. I’m excited to give these go as the community has had a really positive reception to them with some people even speculating that tool use was used during training to make them even more capable and agentic.

Definitely give OpenAI’s models a try and tell me what you think. The big takeaway here is that competition between AI companies is very good for consumers. We need companies pushing each other to make AI cheaper and better.

OpenAI Codex CLI can be thought of as OpenAI’s competitor to Claude Code. The AI community has been very happy with Anthropic’s terminal-based AI code editing assistant with some even saying it writes better code than Cursor but at a greater cost.

Now onto the interesting discussion points:

Speaking of AI code editors,

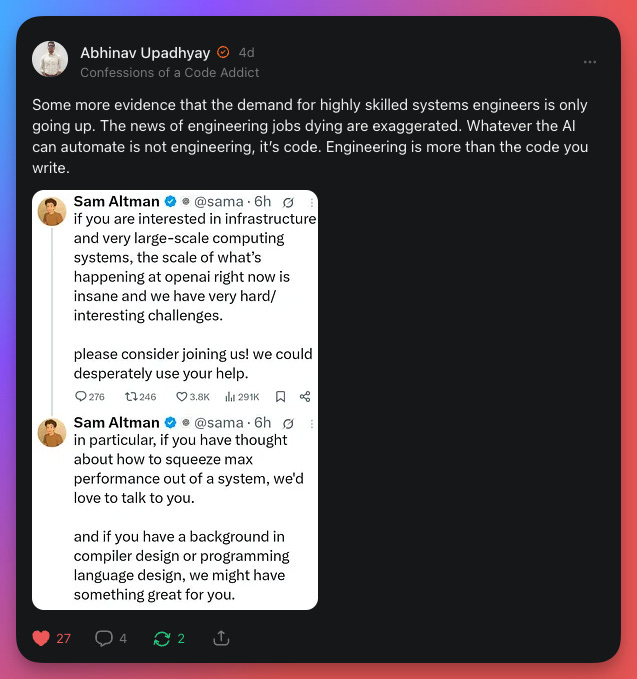

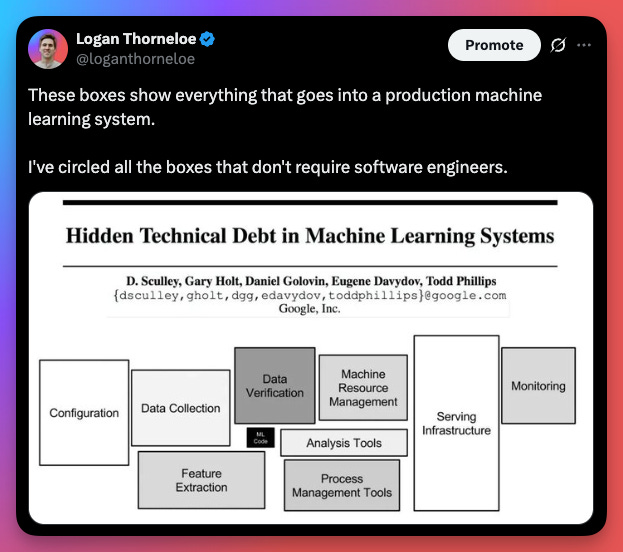

wrote an excellent piece about why it’s not worth using Cursor in an enterprise setting. When choosing AI editors, everyone seems to jump to cost without thinking about the many other important considerations. Devansh shows how reliable behavior, security and privacy, and performance are also important and why they show Cursor is likely not the best tool for your company in its current state. Worth the read! wrote an important Note that all software engineers should understand. At the same time companies are claiming software engineers will be replaced with AI or they’re offshoring SWE jobs to low-quality engineers overseas, companies at the peak of AI are practically begging for excellent machine learning talent.AI will increase the need for software engineers. The number of engineers needed to productionize AI systems is still vastly underestimated. Incredible engineering is very important to getting AI not just working, but working well. I posted a cheeky graphic on X this past week that I think describes this well.

Another interesting topic this week was about Palantir offering their own “degree”. If you’re active in the Society’s Backend chat, we had a pretty interesting discussion about it from multiple viewpoints. Many people were excited for a better option than currently overpriced higher education systems but points were brought up that this gives companies more power (and Palantir is a company many are especially wary of).

I will always applaud attempts to reform education because I think education systems are fundamentally broken and bloated and improving education will have a ripple effect that improves many other aspects of society. The benefits of an educated society are overlooked (or they’re not and worsening education is being done on purpose—but that’s a conspiracy for another time) and is the first step toward improving other problems.

I’m not sure if Palantir’s approach is a fix, but I don’t think anyone will know until we see how it plays out. I like the idea of teaching things on the job because, as all software engineers know, most of what you need to learn to do a technical job is learned on the job anyway.

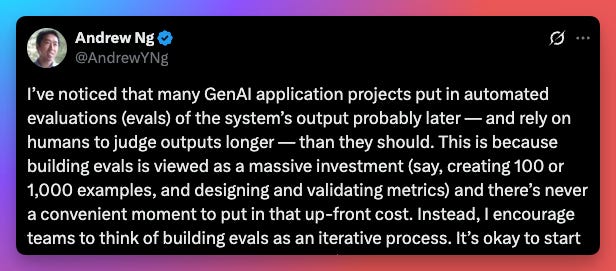

Lastly, Andrew Ng (an OG of deep learning, founder of Coursera, and professor at Stanford) posted on X about the importance of iterative evaluation when developing machine learning models. To be quite honest, evals are something I don’t know as much about as I should so I’ll likely be writing about them in the future (subscribe to get that article!).

But this made me think of test-drive software development which has been widely successful for many teams. Is the future of developing machine models eval-driven model development? Or will that lead teams in the wrong direction?

It’s something I’ll be looking into, but in the meantime I highly suggest reading Andrew’s post.

That’s it for this week’s discussions and important updates! I’ll be posting discussion topics more in the Society’s Backend chat throughout the week, so if you want these topics early make sure to join us there.

Last week we discussed the fact that RAG isn’t dead. If you want to learn more about that, check it out here:

Below are my picks for the week. I include these for paid subscribers as a thank you for supporting Society’s Backend, but this week I’m including them for everyone for free. I hope you enjoy them!

If you get value out of Society’s Backend, consider supporting my writing for just $3/mo. We’re very close to bestseller status!

My picks

How to Build an Agent: A simple command-line application allows users to chat with Claude, an AI, while enabling it to read local files and list directory contents through a few implemented tools.

How much does a 10 million token context window actually cost? by

: While Meta's Llama 4 Scout boasts a 10 million token context window, its practical use is limited by significant training and serving costs, as well as challenges in accurately recalling information from such a large context.NVIDIA Doesn’t Have a Communication Problem—It Has a Timeline Problem [Guest]: NVIDIA's main challenge lies in effectively communicating the relevance of its AI innovations to the general public, rather than in the technology itself.

Automating hallucination detection with chain-of-thought reasoning: HalluMeasure is a new method for detecting and analyzing hallucinations in large language models by breaking down responses into claims, classifying them by error type, and using chain-of-thought reasoning to enhance accuracy and understanding of these errors.

Model Context Protocol has prompt injection security problems by

: The Model Context Protocol (MCP) has serious prompt injection security issues that can lead to malicious exploitation and data exfiltration when tools are misused or poorly implemented.5 Lessons Learned Building RAG Systems: Building effective retrieval augmented generation (RAG) systems requires prioritizing high-quality information retrieval, careful context management, systematic verification to reduce hallucinations, optimizing computational costs, and continuous knowledge management to ensure accuracy and relevance.

Detecting & Handling Data Drift in Production: Data drift occurs when the data fed into machine learning models changes over time, negatively impacting their accuracy and requiring strategies for detection and correction to maintain performance.

The Pulse : why is every company is launching its own coding agent?: Also: CVE program nearly axed, restored at the 11th hour, Rippling rescinds signed offer the candidate-to-join already handed in their resignation, and more

The MCP Revolution: MCP, or Model Context Protocol, is emerging as a crucial standard for AI connectivity, enabling better interoperability among various tools and systems despite its current flaws.

AGI is Still 30 Years Away — Ege Erdil & Tamay Besiroglu: Full automation of work through AI is expected to take longer than many anticipate, potentially around 30 years, due to the need for significant advancements and scaling across various economic and technological components.

Coupling and Cohesion: The Two Principles for Effective Architecture: Coupling and cohesion are crucial principles that determine how easily software systems can evolve and be maintained, influencing everything from debugging to team collaboration.

AI Policy Primer (Issue ): This month's AI Policy Primer discusses new AI tools for error detection in scientific papers, the potential impact of AGI on the balance of power in liberal societies, and the economic effects of AI on employment in the US.

Developing Digital Disgust: The overwhelming consumption of information has led to a cultural imbalance that necessitates developing a sense of disgust towards excessive data, allowing for reflection and genuine understanding instead of mere accumulation.

Deep: AI Agents in Practice: The article explores the evolving concept of "AI agents," highlighting real-world applications, recent developments, and insights from tech leaders amidst ongoing debates about the term's definition.