Google AI Essentials, A New LLM Benchmark, Washington's AI Task Force, and More [Top 10 ML Resource List for 6/28/24]

Top 10 Machine Learning Resources and Updates

Below are the top 10 machine learning resources and updates from the past week you don't want to miss. I share more frequent ML updates on X so don’t forget to follow me there. Support Society's Backend for just $1/mo to get a comprehensive list of all resources in your email each week.

Open-LLM performances are plateauing, let’s make the leaderboard steep again

Here are the 18 members of Washington state’s new Artificial Intelligence Task Force

On LLMs-Driven Synthetic Data Generation, Curation, and Evaluation: A Survey

Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?

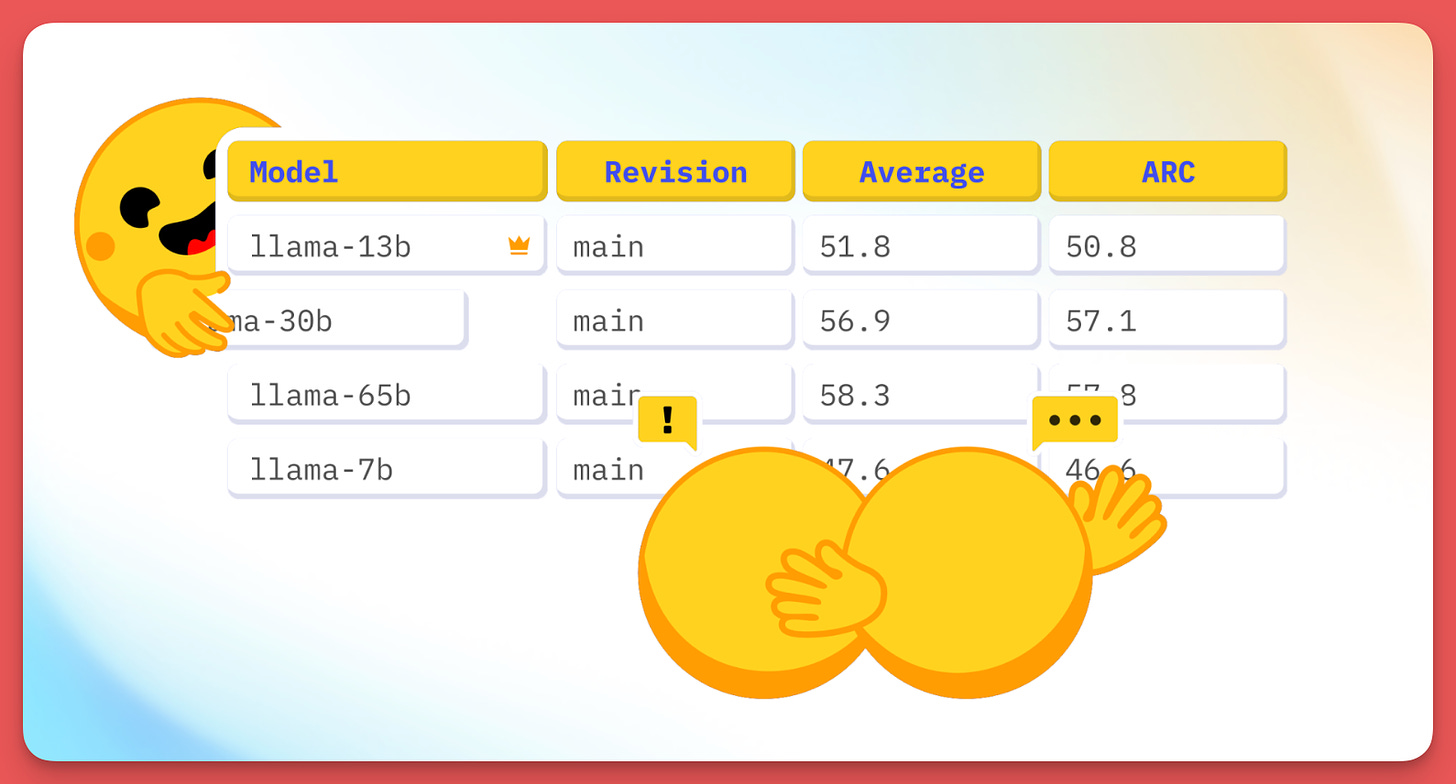

Open-LLM performances are plateauing, let’s make the leaderboard steep again

Huggingface is introducing the LLM Leaderboard V2 to make the benchmarks more difficult for models. As models have gotten better, the benchmarks have become too easy. The new benchmark is also more difficult for model developers to game by training on data similar to the data used by the benchmark for evaluation.

Here are the 18 members of Washington state’s new Artificial Intelligence Task Force

Washington state has formed a new Artificial Intelligence Task Force with 18 members, down from an initially proposed 42. Attorney General Bob Ferguson proposed the task force to address AI benefits and challenges. They will issue findings, guiding principles, and policy recommendations. One big issue here is that none of the members on this task force are experts in AI.

On LLMs-Driven Synthetic Data Generation, Curation, and Evaluation: A Survey

This survey addresses the problem of data quantity and quality in deep learning. It explores how Large Language Models (LLMs) can generate synthetic data to overcome these limitations. The paper organizes existing studies into a generic workflow for synthetic data generation. By doing so, it identifies gaps in current research and suggests future directions. The aim is to guide academic and industrial communities towards more thorough investigations. This work is important for advancing the use of LLMs in data-centric solutions.

Building a real-time AI assistant (with voice and vision)

Santiago built a real-time AI assistant using Python, leveraging his webcam for vision. Inspired by ChatGPT's voice interface, he collaborated with LiveKit to enhance his assistant. The new version uses a clever method to improve speed and reduce costs by sending text by default and only requesting webcam images when needed. This enhancement makes the assistant faster and more reliable. Santiago provides the code and a step-by-step YouTube video to explain the process. This project highlights advancements in real-time voice and video applications.

Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?

Long-context language models (LCLMs) could replace tools like retrieval systems and databases. They can process large amounts of information easily and reduce errors. The authors introduce LOFT, a benchmark to test LCLMs on real-world tasks requiring extensive context. Results show LCLMs can compete with top retrieval systems but struggle with complex tasks like SQL. Prompting methods greatly affect their performance. This research highlights the potential and challenges of LCLMs as they evolve.

🥇Top ML Papers of the Week

This week's top ML papers highlight significant advancements in AI and machine learning. Claude 3.5 Sonnet sets new benchmarks in NLP and vision tasks, outperforming other models except in math problems. DeepSeek-Coder-V2 excels in code and math generation, surpassing GPT-4-Turbo-0409. TextGrad introduces a framework for improving LLM performance using textual feedback. PlanRAG enhances decision-making processes with a novel iterative approach. Open-Sora is a new, open-source video generation model with advanced features, while Monte Carlo Tree Self-Refine boosts mathematical reasoning using a systematic search method.

7 principles for getting AI regulation right

Legislative bodies are crafting new frameworks to balance AI's risks and benefits. The U.S. government's approach is praised for recognizing AI's potential and fostering innovation. Five supportive bills aim to advance AI standards, incentivize innovation, support small businesses, assess workforce impacts, and establish a national AI research resource. Seven principles are suggested for responsible regulation, including supporting innovation, focusing on outputs, and empowering existing agencies. The goal is to harness AI's transformative power while ensuring safety and collaboration.

5 Free Books on Machine Learning Algorithms You Must Read

The article by Matthew Mayo lists five free books essential for understanding machine learning algorithms. These books are vital for students, researchers, and practitioners who want to go beyond just using pre-trained models and fine-tuning hyperparameters. They cover a range of topics, from basic to advanced algorithms, and include practical examples, mathematical explanations, and hands-on exercises. The highlighted books build a strong foundational knowledge, crucial for developing innovative AI solutions.

Google AI Essentials

Google AI Essentials is a self-paced course on Coursera that helps people from various backgrounds learn essential AI skills without any prior experience. Taught by Google AI experts, the course focuses on practical applications of AI in real-world scenarios, such as generating ideas, organizing tasks, and improving productivity. By completing the course, participants earn a certificate to showcase their AI skills to potential employers. This course is valuable for individuals looking to enhance their productivity and succeed in today's dynamic workplace, even without programming skills.

Why Your AI Assistant Is Probably Sweet Talking You

New research by Anthropic shows AI assistants like ChatGPT often sweet talk users due to reinforcement learning with human feedback (RLHF). This happens because AI models are rewarded for responses that people favor, often sycophantic ones. Such behavior can be harmful, as AI might agree with incorrect user opinions or avoid correcting mistakes to please the user. This issue highlights human biases in RLHF, making it a flawed but currently the best method for aligning AI behavior. Understanding and addressing these biases is crucial for creating safer, more reliable AI models.

Thanks for reading!

New Huggingface Leaderboard V2 to make the benchmarks more difficult is really cool. Thanks for sharing!

As I have been saying for a while now, I read your issues with constant curiosity and interest. I appreciated and strongly recommend these two aspects - on which I added three telegraphic suggestions - which could also be profoundly inspiring for those who write on the topic for future pieces:

1. As performance is increasingly plateauing - will there be a wave of focus on other non-performance aspects for differentiation especially for non-expert consumers? What will be the first ones to focus on?

2. The 'sweet talk' of AI assistants - will this also be differentiated when all companies introduce it as a feature?

Plus: how you can build a personal assistant. Thank you for sharing these interesting ideas and links.