Backend Biweekly #3: 97 Updates and Resources

Huge Nvidia Updates, Gemini Hackathon for Money, and more

Welcome to the third edition of the Backend Biweekly! In about a month or so, this will change to weekly updates so it isn’t as long as the information gets to you faster. I’m also going to make video/podcast versions of these posts to make it easier for all of you to consume them however you’d like.

My approach to these updates is to include everything you should know about updates in the world of machine learning and AI. I also include the learning resources I think are worth taking a look at. Paid supporters also get:

Even more resources and updates.

A full list of all the feeds I use to navigate AI learning and updates, updated for each issue.

Direct access to my notes on each resource (coming soon, still figuring this out, but I’m really excited about this!)

You can support Society’s Backend for just $1/mo for your first year. This helps me help over 800 people understand the world of AI and machine learning engineering.

Thanks to all of you for your support!

If you want to learn machine learning from the ground up, I have a free road map to help anyone learn whatever they want about machine learning.

I’ve parsed 97 significant machine learning updates and resources for this issue and included ~40 total. Free subscribers have access to the first 20. Let’s get started!

Nvidia Announcements from GTC

Nvidia made many announcements during their GTC conference that will shape the future of AI. Rowan Cheung created an overview of all important announcements here. Here they are:

Blackwell: AI superchip that reduces cost and energy by 25x.

GR00T: A foundational model for humanoid robot learning.

Earth-2: A digital Earth twin for making weather predictions using AI.

Digital twins for warehouse operations and simulated 3D environments.

Inference Microservices (NIMs): a new approach to speed generative AI model deployment from weeks to minutes.

Gemini Hackathon with Monetary Prizes

Google has invited people to participate in a virtual hackathon where they can use generative AI to build something creative and potentially win cash prizes, including a chance to win $50,000. The hackathon is virtual and participants can work on their projects at their own pace, with the deadline being May 3rd. A Product Manager at Google has posted some suggestions for how to use Gemini in the contest here.

xAI announces Grok-1.5 coming soon

xAI recently released the weights of Grok-1 and are now set to released an improved version of Grok to X. Benchmarks show Grok-1.5 to be comparable to GPT-4 and similar models. Grok-1.5 also has a context window of 128,000 tokens making it 16 times longer than Grok-1. This isn’t as large as other large context window models, but xAI and X are in a great position to develop an excellent LLM. They have both a platform to distribute the LLM, but more importantly training data to continually improve it that doesn’t risk lawsuits.

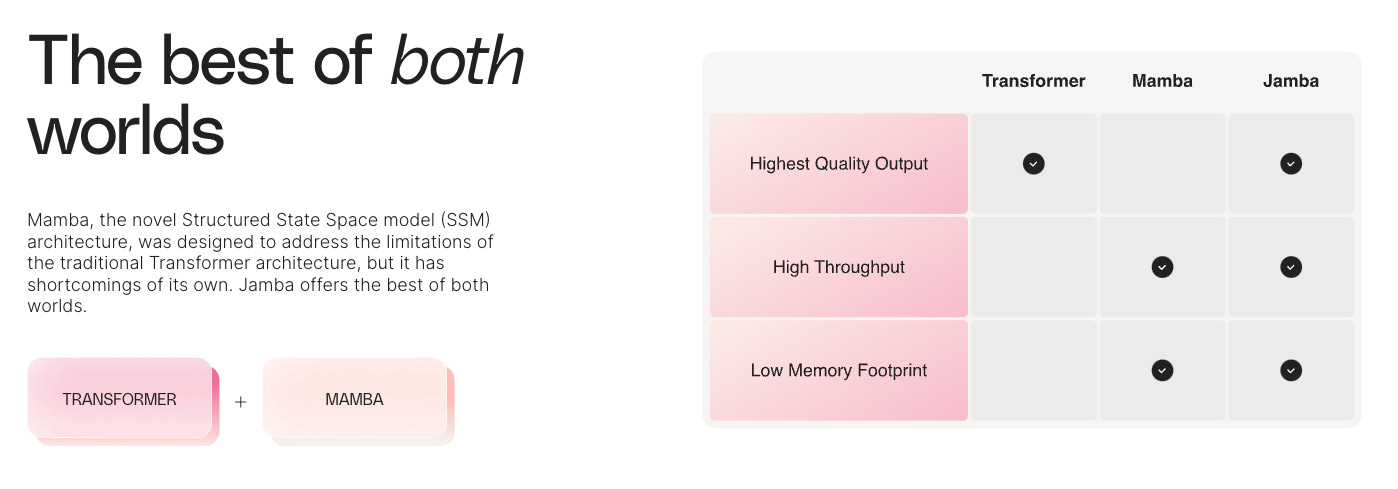

AI21 Labs Releases Jamba, an SSM-Transformer Open Model

This is the first production-grade Mamba-based model and uses a mixture of both Transformer and Mamba-based architecture to make up for the shortcomings of each. It outperforms many of the other LLMs in its size class in both performance and efficiency. Mamba vs Transformer architecture was a debate for a while, but we haven’t seen Mamba architecture in a production-grade model until now. This model was released with open weights.

You can try it now on Hugging Face. Maxime Labonne tried out Jamba and created a notebook to play around with it that has been made available to you.

01 Light: A Voice Interface for Your Home Computer

The 01 Light is a portable voice interface that controls your home computer. It can see your screen, use your apps, and learn new skills. Essentially, it’s a new way of controlling your computer and interfacing with your apps, but it can work even when you aren’t at home. It reminds me of the Rabbit R1, but implemented differently. If I understand correctly, an app will also be available. The accessibility benefits of a device like this are amazing. You can watch the video here.

Apple is Making Moves with MLX

Just like last week, here is a single update on progress made on MLX recently. It’s a huge deal to run ML models efficiently on Apple Silicon. It’s a win for all consumers when AI is made more widely available. I also read a post on X recently saying there are only a few people working on MLX which makes the pace at which it's advancing even more incredible. Here are some updates:

Space Force Not Doing Enough to Leverage AI

Space Force needs to better utilize AI and machine learning tools for tasks and predictive analytics. These technologies can help with monitoring space objects and assessing guardian readiness. The Space Force is exploring how AI can enhance training and decision-making in simulated environments.

I added this here because we don’t hear a lot about Space Force and it’s important to see government entities thinking about using AI–they’re typically far behind in the technology department.

Introducing RewardBench: The First Benchmark for Reward Models

Nathan Lambert shares the introduction of RewardBench on X. The goal is to understand why reward models work in reinforcement learning rather than just how. The benchmark reveals insights about model behavior and the challenges in running reward models. Future work includes exploring generative models and improving training. Links to the leaderboard, code, paper, and eval dataset.

Google presents Vid2Robot: End-to-end Video-conditioned Policy Learning with Cross-Attention Transformers

Vid2Robot is a novel end-to-end video-based learning framework that enables robots to infer and execute tasks directly from observing human demonstrations. The key innovation is the use of cross-attention mechanisms to fuse the prompt video features with the robot's current state, allowing the model to generate appropriate actions that mimic the observed task.

The framework consists of four main modules: (1) a prompt video encoder, (2) a robot state encoder, (3) a state-prompt encoder, and (4) a robot action decoder. It is trained on a large dataset of human video demonstrations and robot trajectories, with additional contrastive losses to enhance the alignment between human and robot video representations.

The paper can be found here. Example videos can be found here.

Google DeepMind Presents Long-form Factuality in LLMs

Google suggests using large language models to evaluate long-form factuality automatically. These models can achieve performance ratings beyond human capabilities according to their research. The study details this potential in a paper available on arXiv and code on GitHub.

OpenAI is Testing Custom GPT Usage-Based Earnings

OpenAI is teaming up with a small group of US builders to try out using GPT earnings based on usage. The aim is to build a lively ecosystem that rewards builders for their creativity and impact. Collaboration with builders will help determine the most effective pathway to achieve this goal.

Databricks Launches DBRX

Databricks has launched DBRX, a new large language model (LLM) that outperforms existing open-source models like Llama 2 and GPT-3.5 on industry benchmarks for language understanding, programming, and math. DBRX sets a new standard for open-source LLMs, enabling customizable and transparent generative AI for enterprises. It was trained in just 2 months for only $10 million. DBRX is freely available on GitHub and Hugging Face for research and commercial use, and is also available on major cloud platforms like AWS, Google Cloud, and Microsoft Azure through Azure Databricks.

Nathan Lambert shared the DBRX system prompt here.

Blurring What is Real and What Is AI

A video spread on X that originally was claimed to be AI generated that was convincingly life-like showing an individual talking about a product. While impressive, the technology wasn’t the reason for the video spreading–it was because we couldn’t figure out if it was created by AI or not. It was shown that this person is actually on Fiverr where they’re paid to make videos like this. Originally this was seen as debunking the AI theory, but HeyGen claims the video was made with their AI technology using the likeness of the individual on Fiverr. This shows the difficulties that arise from generative AI and identifying what is real and what isn’t and how that’s further complicated when AI uses the likeness of a real person.

Google is Using AI to Reliably Forecast Flooding at a Global Scale

People rely on Google for crucial information during crises, and floods pose significant risks globally, affecting billions of people and causing substantial economic damages. Historically, accurate flood forecasting was challenging due to lack of data and resources, particularly in developing countries. However, a recent Nature paper reveals how AI has revolutionized flood forecasting, providing more accurate information up to 7 days in advance for 80 countries, benefiting 460 million people. By leveraging AI and expanding global forecasting capabilities, Google has made significant strides in improving early warning systems and assisting vulnerable populations in taking preemptive action against floods.

Apple is Bringing AI to iPhones

It hasn’t been a secret that Apple wants to bring LLMs to the iPhone, but surprisingly it’s been revealed that they are chatting with other companies to possibly license their LLMs. Apple usually takes full control over all their products and has the capability to do most things in-house. It’s possible these discussions are occurring because Apple doesn’t feel it’s ready to put their own LLM on the iPhone. It’s also possible Apple uses their LLM on-device and allows another LLM to take care of external requests. This adheres to Apple’s privacy model and alleviates them of the difficulties of maintaining an LLM. They’re reportedly in chats with Google, OpenAI, and Anthropic.

Devika: Open-Source Software Engineer AI Devin Alternate

I’ve written about Devin and its impact already if you’d like to read more about it. Devika is an Agentic AI Software Engineer that can understand high-level human instructions, break them down into steps, research relevant information, and write code to achieve the given objective. Devika aims to be a competitive open-source alternative to Devin by Cognition AI. For the use-case of creating an AI coworker, an open-source product will likely be more comfortable for many people. Check out Mervin’s video to learn more about Devika.

Hume AI releases an empathic AI voice

This is the first AI emotionally intelligent voice-to-voice AI. Not only does it chat with you, it also analyzes the way you’re saying things to understand how you’re feeling and better understand what you’re asking it. This is a must for any highly-intellectual voice assistant in the future. You can demo it now.

Apple Presents MM1

Apple releases MM1, an LLM. The paper discusses the benefits of large-scale pre-training for the MM1 model, focusing on properties such as enhanced in-context learning, multi-image reasoning, and few-shot chain-of-thought prompting. The MM1 model outperforms other models on captioning and visual question answering tasks in both small and large size regimes. The text also details the model architecture, pre-training data, final model configurations, and training recipes for MM1. Additionally, it mentions experiments with different data types, model ablations, and evaluation benchmarks. The text emphasizes the importance of multimodal pre-training for improved performance across various benchmarks and tasks.

Free Generate AI Course: Nvidia Generative AI Foundations

It covers various topics including Generative AI, Computer Vision, and Retrieval augmented generation (RAG). The courses include explanations on Generative AI, building neural networks, working with AI on Jetson Nano, video AI applications, RAG models, LLMs, and accelerating data science workflows with GPU. Each course offers specific learning objectives and practical applications in the field of artificial intelligence.

Thanks to Akshay for sharing this on X!

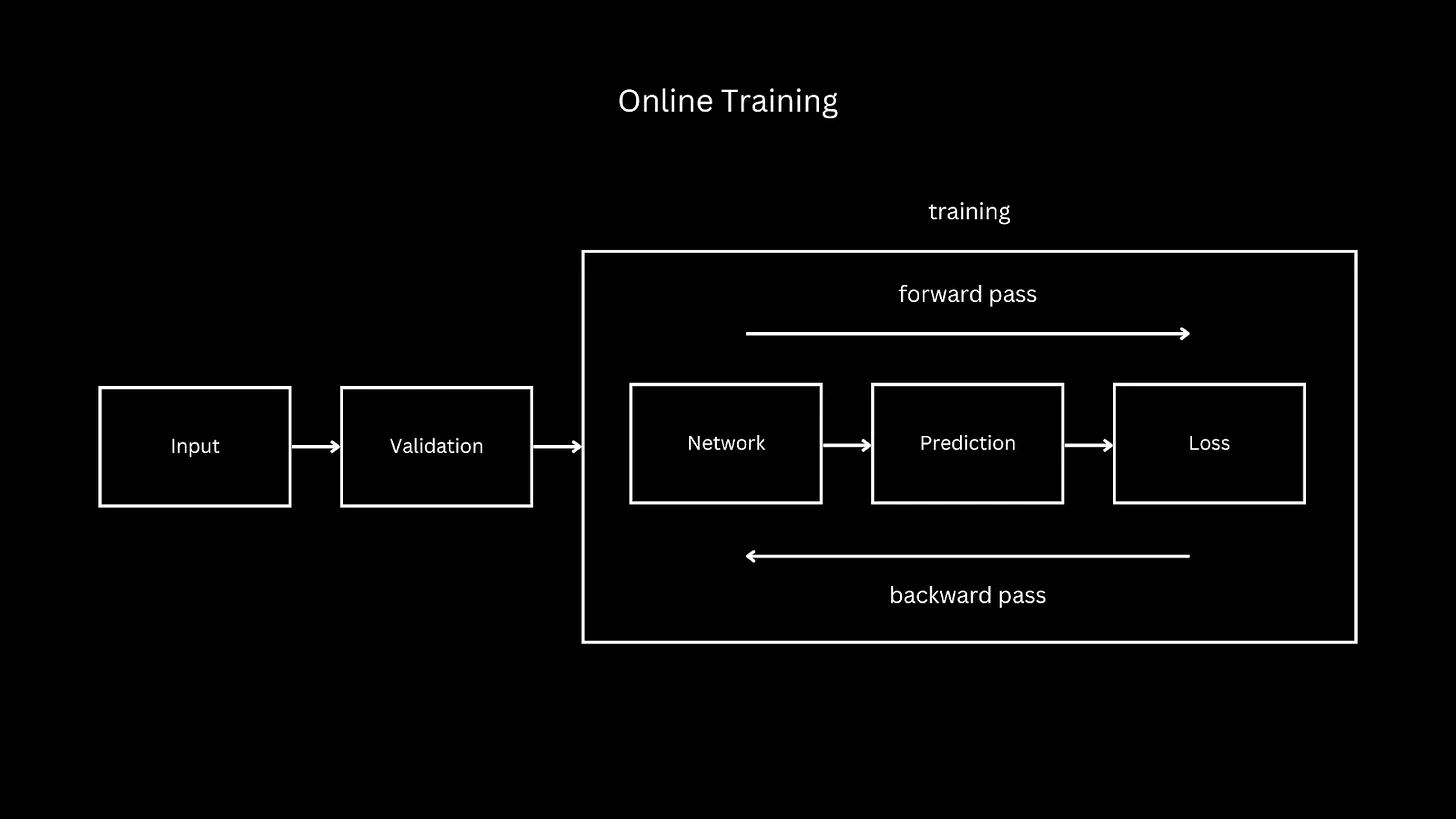

Online vs Offline Training for Machine Learning

This is a guest post I wrote for Devansh. Machine learning engineering is an unappreciated part of AI but is incredibly important for making AI advancements practical and usable. I discuss the considerations that go into online machine learning systems and how they differ from offline (batch) training systems in this article.

Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.