Backend Biweekly #2: 112 Total Updates and Resources

Details on Metas training clusters, analysis of ML competitions in 2023, AI's impact on water resources, and more

Welcome to the second edition of the Backend Biweekly! This is where I share the most important machine learning updates and learning resources of the past two weeks. Thank you to everyone supporting Society’s Backend. If you’d like to support Society’s Backend, you can do so for $1/mo for your first year:

I went through over 112 saved ML resources and updates for this update. I was able to combine and filter out less-important resources to get the article down to ~36 total resources and updates. In the future, I’m going to move these to weekly so I can go through less resources at a time and get them out to you in a more timely manner.

A few updates about this weekly update since last time:

Links to resources are now linked from the resource title.

Less images and some AI generated summaries to help me get through everything. Please give me feedback regarding the format.

I’ve tried to make things more concise and easily skimmable. I recognize there are a lot of resources and I want to make it easy for you to find those most useful for you.

12 of the resources and updates this week are free and the rest are available only for Society’s Backend supporters. Let’s get started!

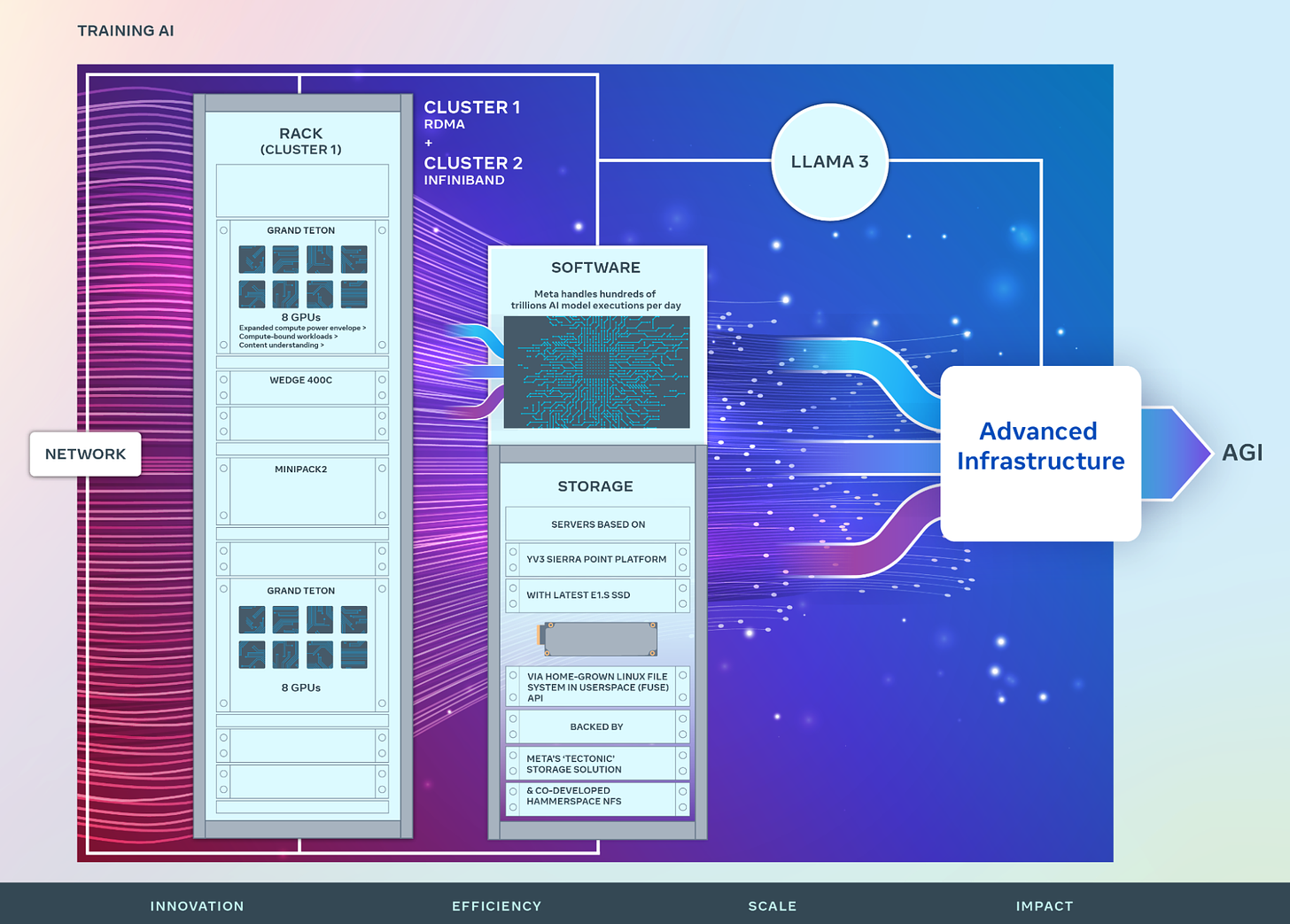

Details on Meta's 24k H100 Cluster Pods in Use for Llama3 Training

@soumithchintala (a Pytorch lead at Meta) shares information about Meta’s Llama3 training. Some details are:

Network: two versions RoCEv2 or Infiniband.

Llama3 trains on RoCEv2

Storage: NFS/FUSE based on Tectonic/Hammerspace

Stock PyTorch: no real modifications that aren't upstreamed

NCCL with some patches: patches along with switch optimizations get cluster to have pretty high network bandwidth realization

Various debug and fleet monitoring tooling, stuff like NCCL desync debug, memory row remapping detection etc.

Full information can be found here.

Analysis of 300+ ML Competitions in 2023

A Reddit user analyzed over 300 machine learning competitions in 2023 and found some interesting metrics. Here are a few:

Almost all winners use Python.

92% of deep learning solutions used PyTorch.

NN-based models won more computer vision competitions than Transformer-based ones.

This is a super interesting read. Full information can be found here.

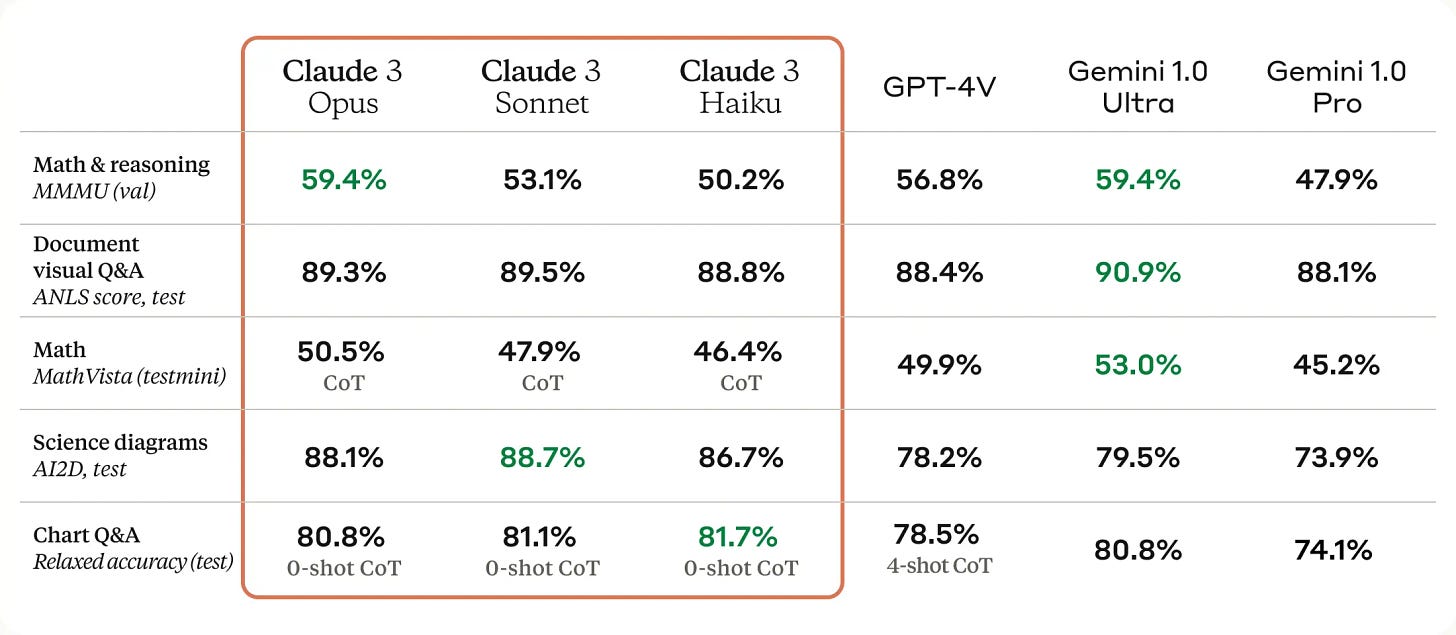

Anthropic Announces Claude 3

Anthropic has just unveiled Claude 3, which includes three models: Claude 3 Haiku, Sonnet, and Opus. Claude 3 Opus, the most powerful model, appears to outperform GPT-4 on benchmarks like MMLU and HumanEval. The capabilities of Claude 3 encompass analysis, forecasting, content creation, code generation, and multilingual conversion. These models support 200K context windows but can be extended to 1M tokens for specific clients and have strong vision capabilities for processing various formats.

This is a big deal because there have been reports of Claude 3 generally performing better than GPT-4 and being the new benchmark for LLM performance.

AI Depletes Water Sources

The use of artificial intelligence (AI) and the operation of data centers are indeed having an impact on water resources in Arizona, particularly in the context of cooling needs for the data centers. Microsoft, which has made a commitment to OpenAI, operates numerous data centers worldwide and is projected to use significant amounts of water in its Arizona facilities. For example, one report indicates that a Microsoft data center complex in Arizona is expected to use about 56 million gallons of drinking water each year, which is equivalent to the water usage of approximately 670 families.

It's important to note that AI is also being used to improve water management and conservation in Arizona. For instance, the city of Phoenix has launched a wastewater treatment pilot program with AI company Kando to monitor wastewater and detect irregularities, which can help prevent damage to wastewater plants and pipes.

EMO: Emote Portrait Alive

The EMO framework aims to generate expressive portrait videos driven by audio input, creating vocal avatar videos with dynamic facial expressions and head poses. By utilizing a two-stage process involving Frames Encoding and the Diffusion Process, the method integrates audio and visual elements to produce lifelike character animations with attention mechanisms for identity preservation and motion modulation. This innovative approach supports various languages, diverse portrait styles, and can animate characters from different eras or mediums, expanding possibilities for multilingual and multicultural character portrayal in videos.

Open-Source Video Generation

Open-Sora is an intriguing open-source library for video generation that is powered by Colossal-AI, offering features like dynamic resolution, various model structures, multiple video compression methods, and parallel training optimization. It allows users to build their video generation model akin to Sora, providing cost-effective implementation and expanding sequence possibilities significantly. This innovative project aims to make video generation more accessible and affordable by leveraging advanced technology and open-source collaboration.

OpenAI and Figure Partner To Make AI Robots

OpenAI and Figure are collaborating to advance robot learning by developing next-generation AI models for humanoid robots. Basically, OpenAI is providing the language model and Figure is providing the neural network for robot movements. A critique of OpenAI reaching AGI is that a lot of learning is environmental and LLMs can’t recreate that. Robots can. The full announcement can be read here with interesting videos and a link to jobs with Figure AI. There is also a more technical deep dive into this partnership here.

10 Techniques Every Machine Learning Engineer Should Know

@svpino shares 10 techniques every machine learning engineer should know on X. Here’s the list:

Active learning

Distributed training

Error analysis

Invariance tests

Two-phase predictions

Cost-sensitive deployments

Human-in-the-loop workflows

Model compression

Testing in Production

Continual learning

More information about this and the course he teaches on machine learning engineering can be found here.

Adobe Reveals Generative AI Tool for Music

Adobe has unveiled Project Music GenAI Control, a revolutionary AI tool for music creation and editing. This generative AI tool allows users to generate music from text descriptions or reference melodies, offering extensive editing capabilities within the same workflow. Users can input prompts like 'happy dance' or 'sad jazz,' customize the music by adjusting tempo and structure, and even remix sections or create loops. Developed with researchers from the University of California and Carnegie Mellon University, this tool provides a more integrated and user-friendly experience, emphasizing ethical considerations by training on licensed data to avoid intellectual property issues. Adobe's effort signifies a significant advancement in AI-powered music creation, catering to both professionals and beginners in the creative process.

Elon Musk Sues OpenAI

Elon Musk has filed a lawsuit against OpenAI, the artificial intelligence research company he helped found, and its CEO Sam Altman. The lawsuit alleges that OpenAI has breached its founding principles by prioritizing profit over the benefit of humanity. Musk claims that OpenAI's partnership with Microsoft, which resulted in a multibillion-dollar alliance, represents a shift from the company's original mission of developing open-source AI technology for the public good.

OpenAI has responded to Elon Musk’s claims by stating its intention to dismiss all of his claims. In a detailed blog post, OpenAI outlines its perspective on the relationship with Musk and the progression of the company's mission. The post reveals that Musk had proposed merging OpenAI with Tesla and sought full control, including majority equity, initial board control, and the CEO position. OpenAI declined these terms, feeling it would be against their mission for any individual to have absolute control over the company.

Brave's Leo AI assistant

Brave's Leo AI assistant is an AI-powered feature integrated into the Brave browser, designed to enhance user interaction with web content through a variety of tasks while prioritizing user privacy. Leo allows users to ask questions, summarize webpages, create content, translate languages, and transcribe audio and video, all within the browser interface.

Leo operates without requiring users to create an account or log in, ensuring privacy and anonymity. The AI assistant does not record or share chats, nor does it use conversations for model training. All interactions with Leo are private, with inputs submitted anonymously through a reverse-proxy, and responses are discarded immediately after generation.

February 2024: AI Research Recap

@rasbt on X shares a research recap for February 2024 which was a huge month in AI research. Here’s a quick summary:

Research papers in February 2024 cover topics such as a LoRA successor, comparisons between small finetuned LLMs and generalist LLMs, and transparent LLM research. Notable mentions include the introduction of OLMo, an open-source LLM, and Gemma, a suite of LLMs by Google. The papers delve into the performance of small LLMs, innovative finetuning techniques, and comparisons with existing models. Additionally, research on scaling factors, model architectures, and efficient training methods for LLMs is discussed, providing valuable insights for the research community.

The list of all resources is only available to supporters. You can support Society’s Backend for only $1/mo:

Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.