OpenAI's o1, Model Merging, California Approves AI Regulation, and More

Machine learning resources and updates 2024-09-17

Here are the most important machine learning resources and updates from the past week. Follow me on X and/or LinkedIn for more frequent posts and updates. You can find last week's updates here:

Support the Society's Backend community for just $1/mo to get the full list each week. Society's Backend is reader-supported. Thanks to all paying subscribers! 😊

Reverse engineering OpenAI’s o1

Nathan Lambert's article discusses OpenAI's new reasoning system, o1, which builds on past successes with reinforcement learning models like Q* and Strawberry. o1 is designed to handle complex tasks by training on long reasoning chains and employing a search-based RL algorithm, although OpenAI admits they are still exploring its full capabilities. The model has shown significant improvement with increased training and test-time compute, highlighting its potential for future applications.

The significance of o1 lies in its potential to revolutionize the way AI handles complex reasoning tasks, paving the way for more advanced and capable AI systems.

Model Merging: A Survey

Model merging, a technique involving the combination of parameters from multiple models, has gained significant traction in recent years, particularly in applications involving large language models (LLMs). The practice can enhance model performance without retraining by averaging or interpolating weights, with methods such as model soups and techniques like TIES and DARE offering improved results through various advanced strategies.

The importance of model merging lies in its ability to optimize performance and reduce computational costs, making it a valuable approach for improving the efficacy of machine learning models, especially in the context of LLMs.

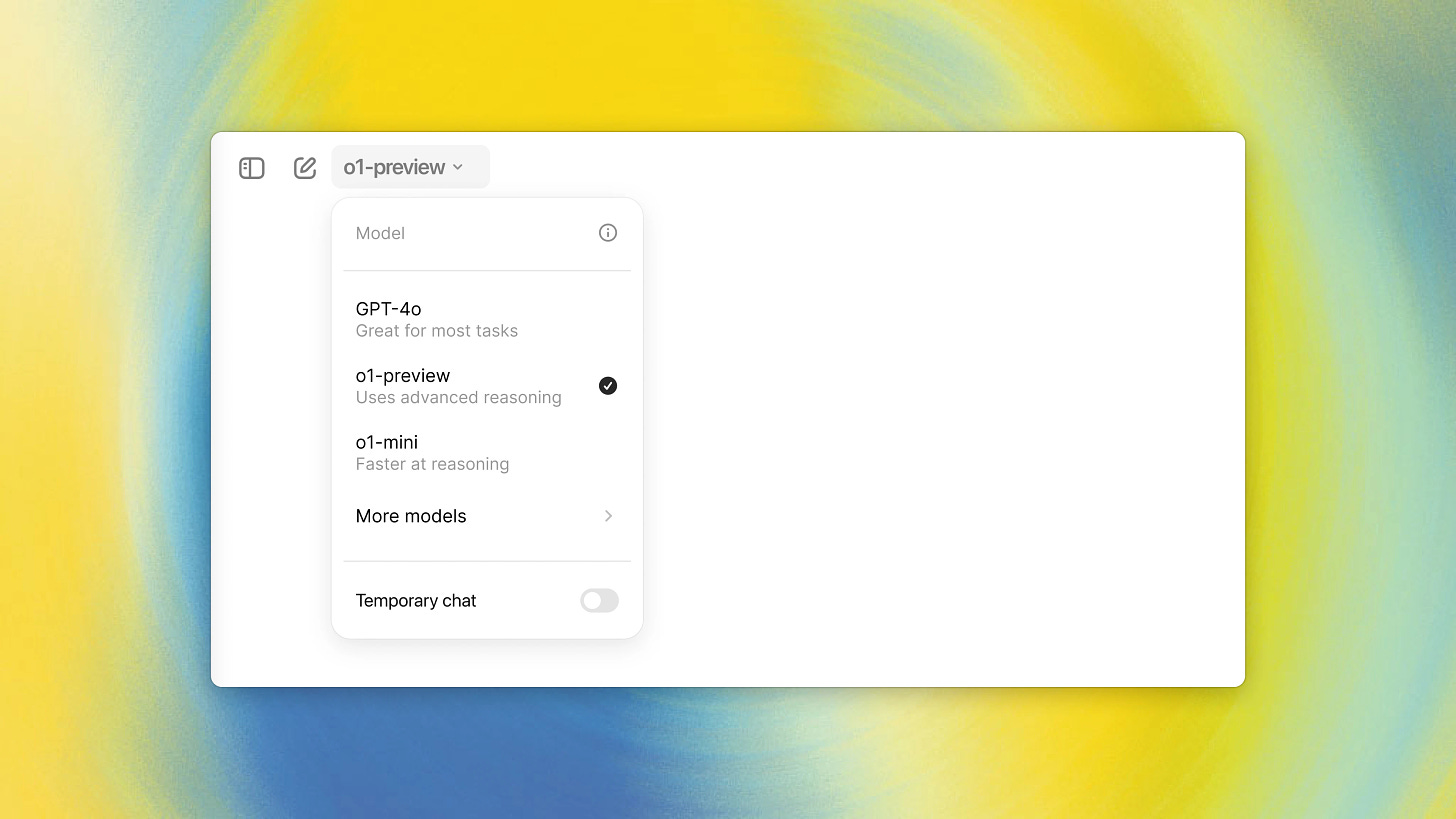

Introducing OpenAI o1-preview

OpenAI has introduced a new series of AI models, named OpenAI o1, designed to enhance reasoning capabilities for solving complex problems in fields like science, coding, and math. The initial release, o1-preview, has shown impressive performance in various benchmarks, including scoring 83% on a qualifying exam for the International Mathematics Olympiad and reaching the 89th percentile in coding competitions. The models also come with improved safety features, outperforming previous models in adhering to safety guidelines.

The significance of this release lies in its potential to revolutionize problem-solving in advanced fields by providing more capable and safer AI tools.

DeepSeek-V2.5 wins praise as the new, true open source AI model leader

DeepSeek-V2.5, developed by DeepSeek, an AI offshoot of High-Flyer Capital Management, has been launched as an advanced open-source AI model combining language processing and coding capabilities. It has received acclaim for outperforming its predecessors and other models in various benchmarks, and is available on Hugging Face under a permissive license that supports both research and commercial use.

The significance of DeepSeek-V2.5 lies in its role in democratizing access to cutting-edge AI technology, allowing developers and businesses to leverage its capabilities for a wide range of applications, thereby advancing the field of open-source AI.

California Legislature Approves Bill Proposing Sweeping A.I. Restrictions

California lawmakers have passed a bill that would impose strict regulations on artificial intelligence, requiring major AI systems to be tested for safety before public release and granting the state attorney general the authority to sue AI developers for serious harms. The bill, SB1047, now awaits Governor Gavin Newsom's decision amid strong debate, with tech industry leaders urging a veto and proponents arguing for the need for safety measures to prevent potential catastrophic misuse of AI.

If enacted, this legislation could set a precedent for AI regulation in the United States, potentially influencing national and global standards.

🥇Top ML Papers of the Week

The article highlights ten significant machine learning papers of the week, each contributing advancements in various areas of AI. Key papers include one on a new family of large language models (LLMs) trained to reason through reinforcement learning, another on a multi-modal model for molecular structure prediction, and a study on the novelty of LLM-generated research ideas. Additionally, it covers innovations like DataGemma for integrating statistical data into LLM responses, Agent Workflow Memory for reusable workflows, and Flash-Sigmoid for efficient attention mechanisms.

These papers are important as they showcase the latest breakthroughs and practical applications in machine learning, potentially driving forward advancements in fields such as drug discovery, scientific research, and AI efficiency.

Rethinking LLM Memorization

Avi Schwarzschild's blog post explores the concept of memorization in large language models (LLMs) and proposes a new definition based on a compression argument. The proposed definition suggests that a phrase is memorized if it can be reproduced with a prompt significantly shorter than the phrase itself, providing an intuitive measure for both functional and legal analysis.

Understanding and accurately defining memorization in LLMs is crucial for addressing legal questions about the fair use of training data and ensuring models do not violate copyright laws.

Our latest advances in robot dexterity

Google DeepMind's recent research introduced two AI systems, ALOHA Unleashed and DemoStart, which enhance robots' ability to perform complex, dexterous tasks. ALOHA Unleashed enables bi-arm robots to execute intricate actions like tying shoelaces and repairing other robots, while DemoStart uses reinforcement learning in simulations to teach multi-fingered robotic hands to perform tasks with high precision.

These advancements in robot dexterity are significant because they pave the way for more useful and versatile robotic assistants in everyday life and various industries.

DataGemma: Using real-world data to address AI hallucinations

Large language models (LLMs) are powerful but often produce inaccurate information, a phenomenon known as "hallucination." Google's new DataGemma models aim to combat this by anchoring LLMs in real-world data through integration with Google's extensive Data Commons. These models use two advanced techniques, RIG (Retrieval-Interleaved Generation) and RAG (Retrieval-Augmented Generation), to enhance the accuracy and factuality of AI-generated responses.

Grounding AI in reliable, real-world data is crucial for improving the trustworthiness and practical utility of generative AI across various applications.

Launch HN: Deepsilicon (YC S24) – Software and hardware for ternary transformers

Deepsilicon, founded by Abhi and Alex, is developing software and hardware to train and run ternary transformer models, which are more efficient than traditional models. These models use ternary values to achieve an 8x compression ratio and reduce arithmetic complexity, but current hardware isn't optimized for such operations, prompting the need for custom silicon to fully realize these benefits.

The significance lies in the potential for more efficient, cost-effective, and faster transformer model inferences, making advanced AI more accessible and practical for edge and cloud applications.

It should have been an app

The team behind Open Interpreter decided to discontinue the manufacturing of the 01 Light hardware due to their limited experience and the realization that modern smartphones offer more value. They have shifted focus to developing the 01 App, which provides the same functionalities via smartphones, enhancing user experience and accessibility. The company will also support the creation of affordable, open-source hardware through partnerships and community efforts.

This decision highlights the strategic pivot towards software development, ensuring better resource allocation and broader accessibility for users.

Can LLMs Generate Novel Research Ideas? A Large-Scale Human Study with 100+ NLP Researchers

The study explores whether Large Language Models (LLMs) can generate novel research ideas by comparing their performance with that of human experts. Over 100 NLP researchers participated in a controlled experiment where both LLMs and humans generated and reviewed ideas. The findings suggest that while LLMs can produce a large volume of ideas, their quality and innovation are still limited compared to human-generated ideas.

This research is significant as it highlights the current capabilities and limitations of LLMs in high-stakes tasks like research ideation, pointing to areas for future improvement.

Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.