Resources to Get a Job in ML, Post-Transformer Architectures, What's in Store for 2025, and More

Society's Backend Reading List 12-30-2024

Here's a comprehensive AI reading list from this past week. Thanks to all the incredible authors for creating these helpful articles and learning resources.

This one is particularly important so the full reading list is available to ALL subscribers. There are many great resources for getting a job in ML and a lot of great information about AI in 2024 and what’s in store for 2025.

Society's Backend is reader supported. You can support my work (these reading lists and standalone articles) for 80% off for the first year (just $1/mo). You'll also get the extended reading list each week.

A huge thanks to all supporters. 🙂

What Happened Last Week

The most important event from this past week was the release of DeepSeek-V3, an open model that competes with OpenAI’s strongest models while being trained on 10x less compute. This shows what’s possible when we don’t just try to scale up resources, but instead try to improve efficiency. I’ve been saying efficiency will be the focus of AI in 2025 and I stand by it. I have another article coming out later this week with more detail about it.

Other important happenings this past were the release of year-end AI recaps and 2025 AI predictions. Check these out:

Last Week's Reading List

In case you missed it, here are some highlights from last week:

Reading List

321 real-world gen AI use cases from the world's leading organizations

Organizations are increasingly using generative AI to enhance processes in areas like customer service, employee empowerment, and data analysis. Companies such as ADT, Alaska Airlines, and Best Buy are developing AI solutions to improve customer experiences and operational efficiency. Google Cloud technologies, including Vertex AI and Gemini, are central to these innovations across various industries.

Competitive Programming Changed My Life Forever

By

Competitive programming transformed Alberto Gonzalez's life by enhancing his problem-solving skills and leading him to a top-tier software engineering job in Sweden. He leveraged his expertise to earn income through writing, launching a newsletter, publishing a book, and offering coaching. Alberto encourages others to harness their skills and seize opportunities in the digital world.

Physics Professors Are Using AI Models as Physics Tutors

By

Many physics professors are now using AI models as personal tutors to enhance their understanding of the subject. This trend highlights a significant shift in how experts are leveraging AI to learn and uncover new insights in physics. As AI continues to advance, it is poised to play a crucial role in fields that were once considered uniquely human domains.

How To Focus On The Right Problems

Focusing on the right problems is crucial for effective problem-solving. Prioritize issues that significantly impact your goals and objectives. By addressing the most important challenges first, you can achieve better outcomes.

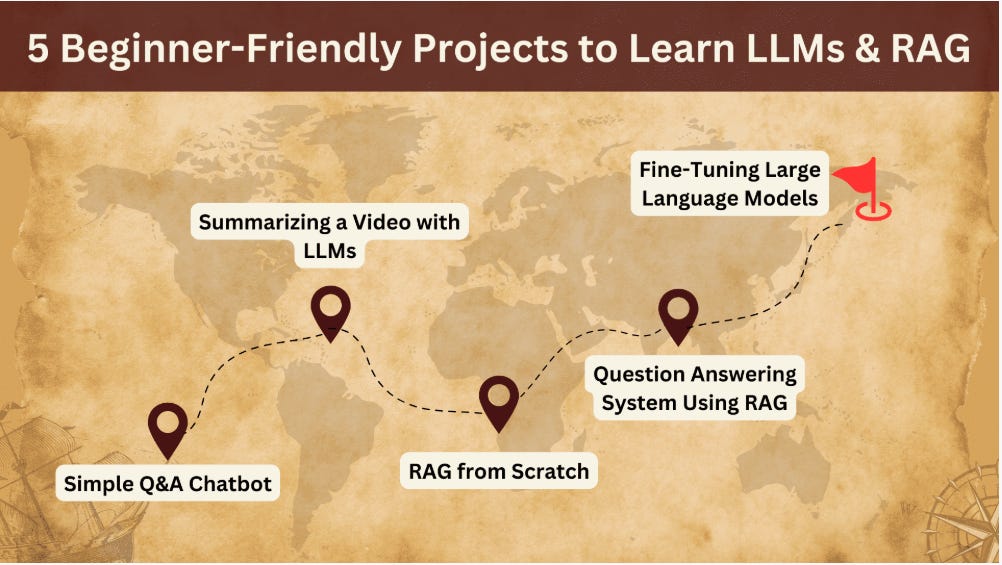

5 Beginner-Friendly Projects to Learn LLMs & RAG

Five beginner-friendly projects are outlined to help learners gain experience with Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG). The projects range from building a simple Q&A chatbot to fine-tuning LLMs for specific tasks, gradually increasing in complexity. Completing these projects will boost confidence and prepare learners for more advanced AI challenges.

The Ultimate Guide to Building a Machine Learning Portfolio That Lands Jobs

A strong portfolio is essential for machine learning job candidates to showcase their practical skills and differentiate themselves in a competitive market. Candidates should focus on a variety of projects, demonstrate their problem-solving process, and document their technical expertise clearly. Regularly updating the portfolio and sharing insights through blogs can enhance credibility and show readiness for real-world challenges.

7 Machine Learning Projects For Beginners

Seven beginner-friendly machine learning projects can help you learn essential skills and gain practical experience. Projects include Titanic survival prediction, stock price forecasting, email spam classification, and face detection, among others. Completing these projects will enhance your portfolio and boost your confidence in machine learning.

What is a Transformer?

Transformers are a powerful neural network architecture that revolutionized AI, particularly in deep learning and natural language processing. They use a self-attention mechanism to effectively capture relationships between tokens in a sequence, enabling tasks like text generation and more. Key components include token embeddings, attention layers, and a feed-forward network that refines token representations for accurate predictions.

How to Build Agentic AI[Agents]

By

Agentic AI focuses on creating effective AI systems by breaking down complex tasks into simpler steps and maintaining clear tool documentation. The approach emphasizes simplicity, transparency, and careful design of agent-computer interfaces to enhance user interaction and reduce errors. By balancing workflow-based and agent-based architectures, developers can build more efficient and reliable AI agents.

2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]

New models like RWKV and state space models are emerging as efficient alternatives to traditional transformers, offering constant memory usage during inference. These architectures focus on improving computational efficiency and understanding attention mechanisms for better performance on tasks requiring recall. The developments in this field aim to make AI more accessible and effective across various applications and contexts.

Linux Context Switching Internals: Part 1 - Process State and Memory

The article explains how the Linux kernel manages processes through two main data structures: task_struct for execution state and mm_struct for memory state. These structures are essential for context switching, which allows the CPU to switch control between different processes safely. Understanding these fundamentals is crucial for grasping how the Linux kernel efficiently handles process management.

Nagasaki Was Bombed Against Direct Orders - Adam Brown

The bombing of Nagasaki may have occurred against direct orders, as the crew initially aimed for a different city but faced visibility issues. After multiple attempts, they decided to drop the bomb despite not clearly seeing their target, raising suspicions about their actions. This incident suggests that a significant portion of nuclear weapons used in combat might have been deployed without proper authorization.

6 AI Trends that will Define 2025

After 18 months of Generative AI advancements, key trends are emerging that will shape the future of AI in 2025. The article outlines six important research directions and engineering approaches that tech professionals, investors, and policymakers should focus on to stay competitive. Insights from previous years have shown the significance of trends like small language models and open-source innovations, particularly Meta's Llama model.

Deep: The UX of Search

The UX of in-product search is crucial for user engagement and retention, as effective search capabilities can enhance user satisfaction. This analysis reviews how over 20 leading tech companies, like LinkedIn and Amazon, are evolving their search functionalities with AI, predictive text, and advanced filtering options. It highlights various UX components that improve search experiences, making it easier for users to find what they need quickly.

AI Agents with Reasoning, Solving Complex Tasks with EASE!

AI agents can now perform complex tasks using human-like reasoning and decision-making. They can analyze errors and suggest solutions, enhancing their problem-solving capabilities. The article provides a guide to creating these agents with simple coding steps and examples.

A Comprehensive Analytical Framework for Mathematical Reasoning in Multimodal Large Language Models

Mathematical reasoning in artificial intelligence is evolving, especially with Large Language Models (LLMs) that now incorporate multimodal inputs like diagrams and graphs. Researchers have identified five major challenges that hinder progress in this field, such as limited visual reasoning and domain generalization issues. A comprehensive analysis reveals significant advancements in specialized Math-LLMs, highlighting the need for more robust models to tackle these complexities.

Top 25 AI Tools for Content Creators in 2025

AI-powered tools are transforming content creation by making it easier for creators to produce high-quality videos, graphics, and written content. The top 25 tools highlighted offer features that enhance creativity, save time, and streamline workflows across various applications. From video editing to music composition, these solutions empower users to focus on their creative strategies.

DeepSeek-AI Just Released DeepSeek-V3: A Strong Mixture-of-Experts (MoE) Language Model with 671B Total Parameters with 37B Activated for Each Token

DeepSeek-AI has launched DeepSeek-V3, a powerful open-source language model with 671 billion parameters and 37 billion activated per token. This model addresses key challenges in NLP, such as computational efficiency and data utilization, while achieving impressive benchmark scores. With a training cost of $5.576 million, DeepSeek-V3 sets a new standard for accessible high-performance language models.

AWS Researchers Propose LEDEX: A Machine Learning Training Framework that Significantly Improves the Self-Debugging Capability of LLMs

Researchers from Purdue University, AWS AI Labs, and the University of Virginia introduced LEDEX, a new training framework aimed at enhancing the self-debugging capabilities of Large Language Models (LLMs). LEDEX improves code explanation and refinement through automated data collection and a combination of supervised fine-tuning and reinforcement learning. Initial results show significant performance improvements in LLMs, making them more effective at identifying and correcting code errors.

Google DeepMind Introduces Differentiable Cache Augmentation: A Coprocessor-Enhanced Approach to Boost LLM Reasoning and Efficiency

Google DeepMind has developed a new method called Differentiable Cache Augmentation, which enhances large language models (LLMs) by using a trained coprocessor to improve their reasoning abilities without increasing computational costs. This approach allows the LLM to generate a key-value cache that is enriched with additional latent embeddings, resulting in significant performance improvements on complex reasoning tasks. Evaluations showed accuracy gains, such as a 10.05% increase on the GSM8K dataset, demonstrating the method's effectiveness and scalability.

Meet SemiKong: The World’s First Open-Source Semiconductor-Focused LLM

SemiKong is the first open-source large language model specifically designed for the semiconductor industry, addressing the knowledge gap caused by retiring experts. It has been fine-tuned with semiconductor-specific data to improve chip design efficiency, reducing time-to-market by up to 30%. The integration of SemiKong with domain-expert agents streamlines processes and enhances the onboarding of new engineers, ultimately promoting innovation in semiconductor manufacturing.

Unveiling Privacy Risks in Machine Unlearning: Reconstruction Attacks on Deleted Data

Machine unlearning allows individuals to request the deletion of their data's influence on machine learning models, but it introduces new privacy risks. Researchers found that adversaries can exploit changes in model parameters before and after data deletion to accurately reconstruct deleted data, even from simple models. This highlights the need for safeguards like differential privacy to protect against potential privacy breaches.

Microsoft and Tsinghua University Researchers Introduce Distilled Decoding: A New Method for Accelerating Image Generation in Autoregressive Models without Quality Loss

Researchers from Microsoft and Tsinghua University have developed a method called Distilled Decoding (DD) to speed up image generation in autoregressive models without sacrificing quality. DD dramatically reduces the number of steps needed for image creation, achieving up to 217.8 times faster generation while maintaining acceptable output fidelity. This innovative approach allows for practical applications in real-time scenarios, addressing the long-standing trade-off between speed and quality in image synthesis.

Neural Networks for Scalable Temporal Logic Model Checking in Hardware Verification

A new machine learning approach uses neural networks to improve hardware verification by generating proof certificates for Linear Temporal Logic (LTL) specifications. This method enhances formal correctness and outperforms existing model checkers in speed and scalability across various hardware designs. Despite some limitations, it marks a significant advancement in integrating neural networks with symbolic reasoning for model checking.

Measure Up

Musical instruments have evolved over time, allowing for more complex and expressive music, as seen in Beethoven's work. The improvements in pianos during Beethoven's era enabled him to create pieces that were technically impossible for earlier instruments. Similarly, modern technology, like laptops and AI, enhances our ability to create and explore new ideas, paralleling the advancements in musical instruments.

Optimizing Machine Learning Models for Production: A Step-by-Step Guide

Optimizing machine learning models for production involves careful planning and execution throughout their development lifecycle, starting from understanding the business problem. Key steps include data preparation, model training, and continuous monitoring to ensure efficiency and adaptability. Incorporating security measures and a CI/CD approach further enhances the reliability and performance of deployed models.

10 Podcasts That Every Machine Learning Enthusiast Should Subscribe To

Podcasts are an engaging way to learn about the fast-evolving field of machine learning. The article lists ten recommended podcasts that cater to everyone from beginners to experts, covering diverse topics like AI applications, ethical issues, and practical advice. Listening to these podcasts helps enthusiasts stay informed about the latest trends and developments in AI.

5 Tools for Visualizing Machine Learning Models

Machine learning models can be complex, and visualizing them helps in understanding their structure and performance. Five recommended tools for this purpose include TensorBoard, SHAP, Yellowbrick, Netron, and LIME, each offering unique features for model evaluation and interpretability. These tools enhance insights into model behavior and predictions, making it easier to work with machine learning.

6 Language Model Concepts Explained for Beginners

Large language models (LLMs) predict word sequences by learning patterns from extensive text data. Key concepts include tokenization, word embeddings, attention mechanisms, transformer architecture, and the processes of pretraining and fine-tuning. Understanding these foundational ideas helps unlock the potential of LLMs in various applications.

This open problem taught me what topology is

An open problem in topology asks whether every closed continuous loop has an inscribed square. This question involves mapping pairs of points on the loop to three-dimensional space, leading to the discovery of self-intersection points that correspond to inscribed rectangles. The relationship between these pairs can be represented using a Mobius strip, illustrating how different geometric concepts are interconnected.

The 10 most viewed blog posts of 2024

Amazon is seeking talented Applied Scientists to develop innovative machine learning solutions that enhance customer experiences and optimize product returns. The roles involve collaborating with diverse teams to create AI-powered systems for various applications, including health initiatives and core shopping features. Candidates will have opportunities for career growth while working on high-impact projects in a fast-paced environment.

The 10 most viewed publications of 2024

The most viewed publications by Amazon scientists in 2024 cover diverse topics such as e-commerce knowledge graphs, cloud databases, and recession prediction. Notable works include a new text-to-speech model and advancements in anomaly detection using graph diffusion models. These research papers highlight innovative systems and frameworks aimed at improving performance and user experience across various applications.

Top 25 AI Tools for Increasing Sales in 2025

AI technologies are transforming sales and customer relationships, allowing businesses to improve lead generation and engagement. The article highlights 25 AI tools that help companies automate processes, gain insights, and enhance customer experiences. These tools empower businesses of all sizes to compete effectively in the marketplace.

8 Insights to Make Sense of OpenAI o3

OpenAI o3 has achieved remarkable performance on challenging benchmarks, surpassing human and specialist capabilities. The article explores the implications of this breakthrough and raises important questions about the future of AI, including accessibility and competition. It aims to provide insights to help readers navigate the evolving landscape of artificial intelligence.

Thanks for reading!

Always be (machine) learning,

Logan

Here's to a great 2025, Logan.

Thanks for sharing my post on your newsletter.

It means a lot!