Hi Everyone!

Here are this week’s events and resources every AI Engineer should know about. Subscribe if you want them in your inbox each week. Make sure to support the authors of the resources.

I’ve switched up how I’m handling the full list of resources for paying subscribers. Let me know what you think of the change. The previous method caused all sorts of issues in the Substack editor because the list was too long.

A huge thanks to all my supporters! You can support my writing for just $2/mo.

If you’re interested in learning AI/machine learning, check out the roadmap I put together to learn it entirely for free here. Enjoy this week’s resources!

Always be (machine) learning,

Logan

Reasoning is here to stay

There have been very many different AI model releases for multiple applications this past week. A huge takeaway we can learn is that reasoning models are here to stay. Reasoning models take the pattern recognition of traditional ML models and combine it with a reasoning process. This process provides greater context to the model to process information and scale at inference time.

Scaling at inference time means the model uses more computational resources when queried (as opposed to when trained) to provide better answers. These models typically generate progressive reasoning—like step-by-step logic—within a single response, improving adaptability and nuance. This allows for models to adapt better to new situations and reason with nuance as they scale in real-time.

Reasoning matters because some AI critics argued that performance gains were stalling as scaling at training time showed diminishing returns. Reasoning models have countered this by leveraging inference-time scaling to boost performance, often surpassing what traditional scaling alone could achieve, though both methods remain valuable.

Reasoning allows models to be more creative with their problem-solving and opens AI up to many more applications that require strategic problem solving approaches. Reasoning doesn't perfectly emulate human reasoning, but further development on scaling at inference time will bring better and more capabilities to models over time.

If you want more detail about how reasoning models work, check out “Demystifying Reasoning Models” in this week’s top ten resources.

Events you should know about

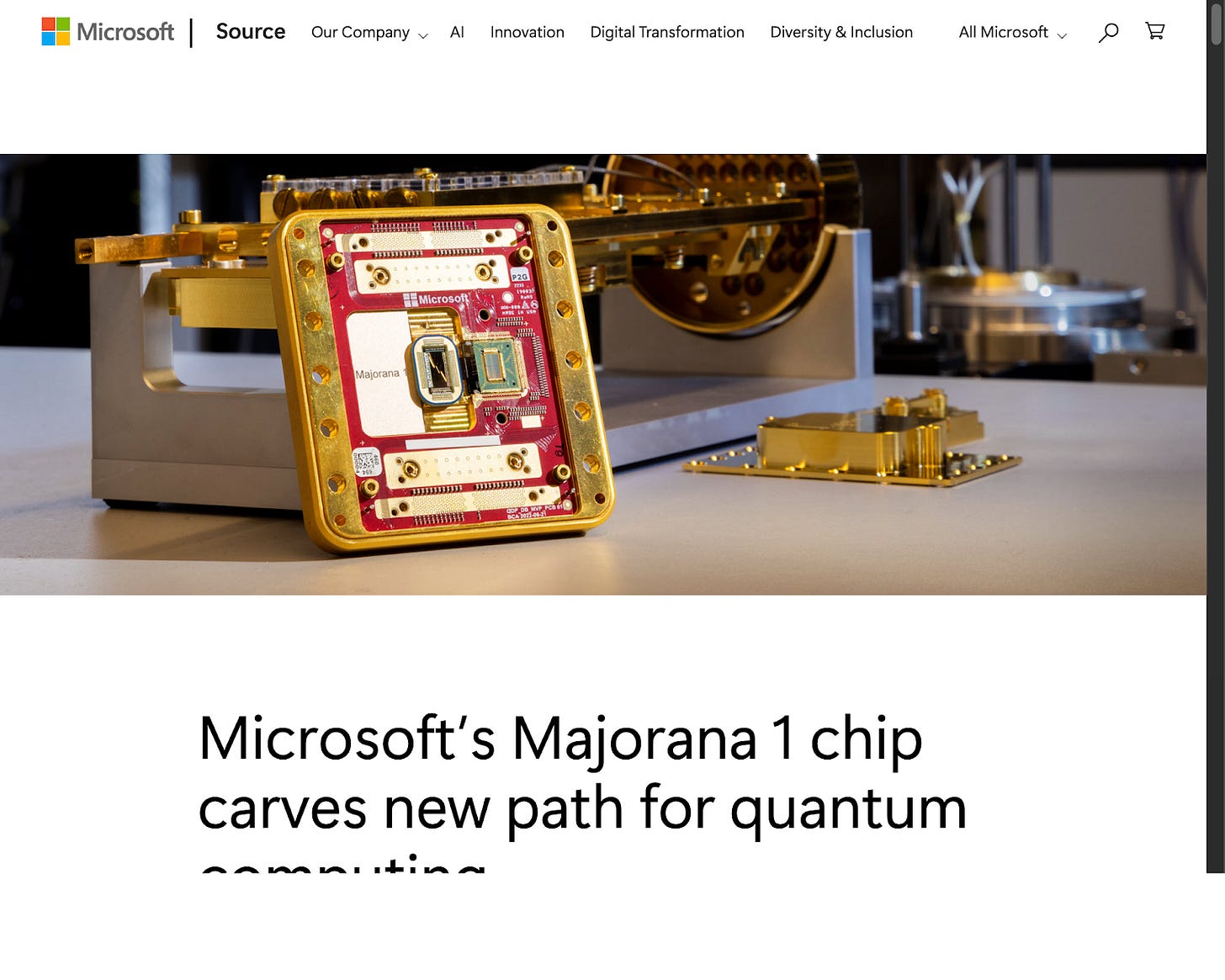

Microsoft released the Majorana 1 chip, using topological qubits for enhanced stability and scalability, which could lead to quantum computers solving complex problems faster. This suggests quantum computing might be just years away, not decades, as previously thought. More competition in this space is good for everyone and will push quantum computing development along.

Google launched an AI co-scientist to assist scientists by generating hypotheses and summarizing literature across many scientific fields. These are the types of use cases many professionals are hopeful for regarding AI.

Google released PaliGemma 2, an open vision-language model that processes images and text for tasks like captioning and object detection. It improves performance over the original PaliGemma and is capable of more tasks.

Evo 2 is a generative model for designing genetic sequences that was trained on genetic data from over 128,000 species. It offers insights into DNA, RNA, and proteins and can revolutionize genetic engineering and disease research.

xAI releases Grok 3 with Thinking and Deep Search models claiming to outperform its competitors on math, science, and coding. xAI has made significant progress to reach state-of-the-art in a relatively short time. It also proves the value of the effort xAI has put into their massive training clusters.

What you missed last week

Resources you need

Jeff Dean & Noam Shazeer – 25 years at Google: from PageRank to AGI

By

AI models are rapidly improving and have the potential to significantly enhance productivity in coding and data synthesis. Researchers envision a future where continual learning allows models to evolve over time, making research faster and more efficient. The growth of AI capabilities may lead to more innovative applications and the ability to tackle complex problems more effectively.

Microsoft’s Majorana 1 chip carves new path for quantum computing

Microsoft has launched Majorana 1, the first quantum chip using a new Topological Core architecture, aiming to enable quantum computers that can tackle complex problems in a fraction of the time. This chip utilizes topoconductors to create stable qubits, allowing for a future where quantum systems can scale up to a million qubits. The advancements made with Majorana 1 could revolutionize industries by solving challenges that current computers cannot address.

Accelerating scientific breakthroughs with an AI co-scientist

The AI co-scientist is a collaborative tool designed to help scientists generate novel research hypotheses and experimental protocols. It uses a multi-agent system to improve outputs iteratively, leading to higher quality and more impactful results. Early evaluations show that the AI co-scientist can accelerate scientific discoveries by uncovering original knowledge and improving research efficiency.

Introducing PaliGemma 2 mix: A vision-language model for multiple tasks

PaliGemma 2 mix is a versatile vision-language model that can handle multiple tasks like captioning, object detection, and optical character recognition. It comes in various sizes (3B, 10B, and 28B parameters) and can be easily integrated with popular frameworks like Hugging Face and PyTorch. Users can quickly explore its capabilities and fine-tune it for specific tasks through comprehensive documentation and resources.

Genome modeling and design across all domains of life with Evo 2

Evo 2 is a groundbreaking biological foundation model that analyzes 9.3 trillion DNA base pairs from all life forms. It predicts the effects of genetic mutations and generates new genomic sequences without needing specialized training. Evo 2's open-access design aims to enhance research and innovation in genomic and epigenomic biology.

Grok 3 Beta — The Age of Reasoning Agents

Grok 3 is the latest AI model from xAI, featuring advanced reasoning and extensive pretraining knowledge. It can solve complex problems by thinking for seconds to minutes and correcting its own errors. Alongside Grok 3, the cost-efficient Grok 3 mini is also being introduced for simpler STEM tasks.

Satya Nadella – Microsoft’s AGI Plan & Quantum Breakthrough

Microsoft has made significant breakthroughs with the Majorana zero chip and world human action models, driving the demand for advanced computing infrastructure. The company envisions a future where AI and quantum computing transform workflows and productivity. Satya Nadella believes these technologies will ultimately solve fundamental human challenges and lead to substantial advancements in various fields.

AI Job Pulse: Companies Make Finding AI Jobs Really Difficult

Job hunting for AI roles is challenging despite an increase in job listings, as many postings lack clarity and specific requirements. Most companies demand excessive experience, making it hard for qualified candidates to know if they fit the roles. However, there are many high-paying opportunities for software engineers interested in AI, and gaining familiarity with the field is advisable.

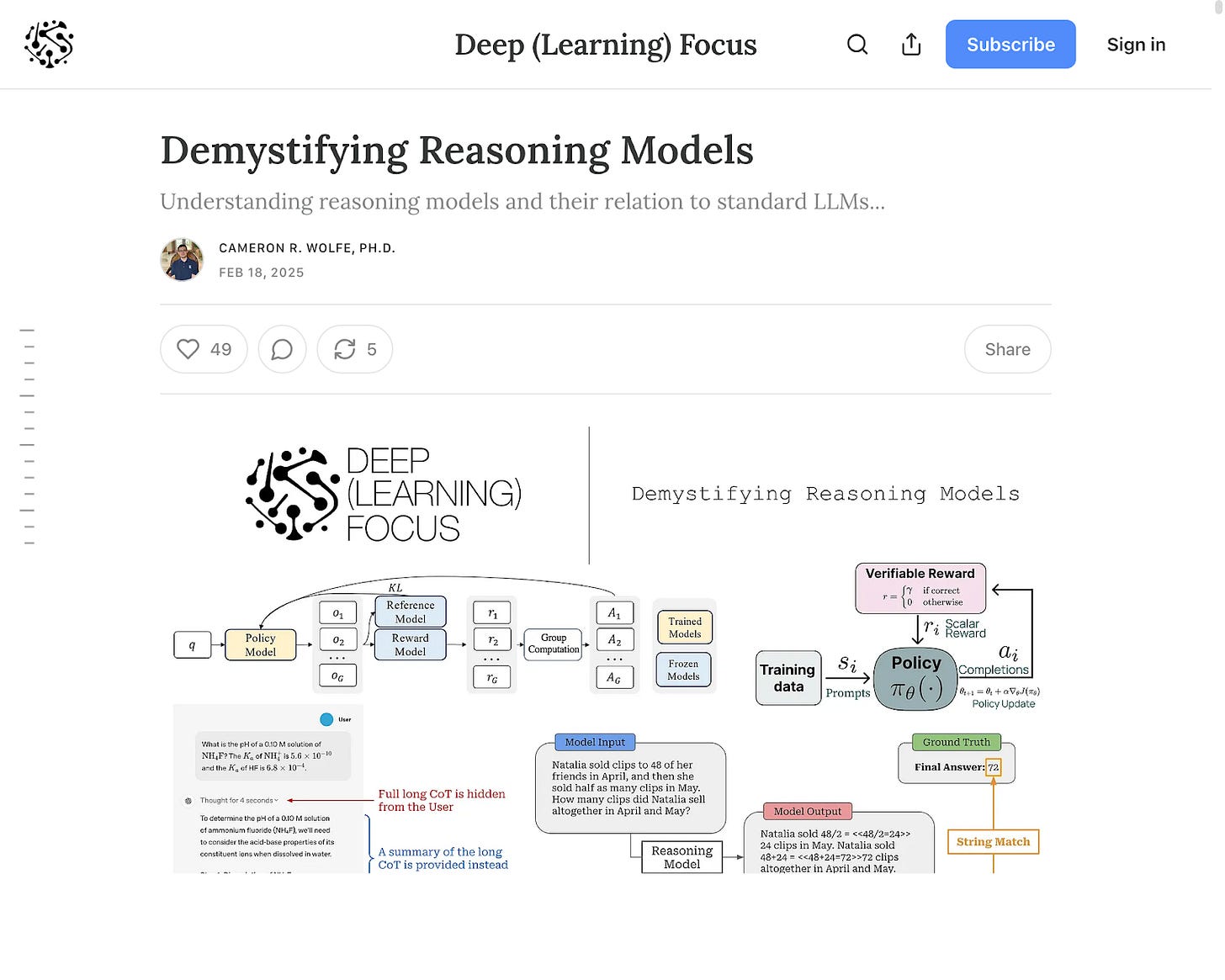

Demystifying Reasoning Models

By

Recent advancements in reasoning models, particularly from OpenAI, have significantly improved AI capabilities in solving complex tasks like math and coding. New models, such as DeepSeek-R1-Zero, show that reasoning skills can develop through pure reinforcement learning without supervised training. This ongoing research is leading to the creation of more efficient reasoning models that can autonomously enhance their capabilities.

How Will Foundation Models Make Money in the Era of Open Source AI?[Markets]

By

Foundation model providers can monetize their offerings through subscription models and API-based billing, each with distinct advantages and challenges. Subscriptions offer stable revenue but can limit growth, while APIs allow for uncapped earnings but face intense price competition. Companies may also explore strategies inspired by luxury brands, such as creating gated access to high-tier models, to enhance profitability and brand desirability.

All Resources

Keep reading with a 7-day free trial

Subscribe to Society's Backend to keep reading this post and get 7 days of free access to the full post archives.